Google Announces an AI Gesture Recognizer to Interact with the Web in Real Time

In Brief

Google has announced Airfinger, an AI-powered gesture recognition system that will allow users to interact with the web in real time using hand gestures.

It uses a combination of computer vision and machine learning to accurately recognize hand gestures, making it more affordable and accessible to a wider range of users.

Airfinger is currently capable of recognizing seven different gestures, but Google is already working on expanding its repertoire.

Google has announced a new AI-powered gesture recognition system that will allow users to interact with the web in real time using only hand gestures. The system, called Airfinger, is still in early development but has the potential to revolutionize the way we interact with our devices.

Airfinger uses a combination of computer vision and machine learning to accurately recognize hand gestures, making it possible to navigate websites, play games, and control smart home devices without ever touching a screen or keyboard. This technology could also have significant implications for accessibility, allowing people with disabilities to use mobile devices in new ways.

Airfinger uses the same basic principle as other gesture recognition systems, such as Microsoft Kinect or the Leap Motion Controller. However, instead of using a dedicated piece of hardware, Airfinger uses the front-facing camera on a smartphone or tablet. This makes it much more affordable and accessible to a wider range of users. Airfinger’s use of the front-facing camera on a smartphone or tablet also means that it can be used on the go, making it ideal for presentations or meetings where a traditional gesture recognition system may not be available or practical. Additionally, the software is constantly being updated to improve its accuracy and expand its range of compatible devices.

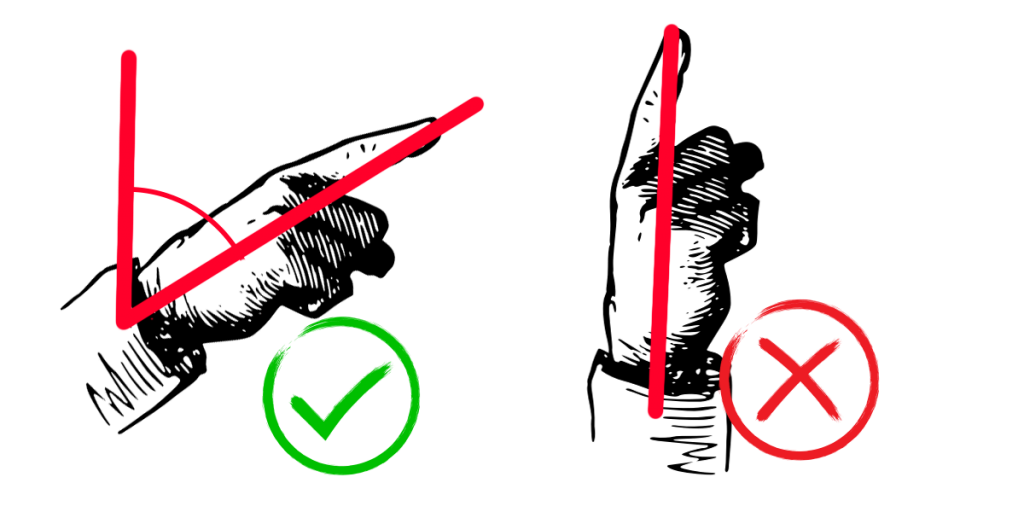

At the moment, Airfinger is only capable of recognizing seven different gestures: 👍, 👎, ✌️, ☝️, ✊, 👋, and 🤟. However, Google is already working on expanding its repertoire. The company is also working on improving the accuracy of the system, as well as its ability to work in low-light conditions. Google’s goal is to make Airfinger capable of recognizing more complex gestures and, eventually, sign language. This would greatly benefit people with disabilities who rely on sign language as their primary means of communication.

Mediapipe can recognize such gestures with the default training model:

- Closed fist (

Closed_Fist) - Open palm (

Open_Palm) - Pointing up (

Pointing_Up) - Thumbs down (

Thumb_Down) - Thumbs up (

Thumb_Up) - Victory (

Victory) - Love (

ILoveYou)

Google has made it available to the public on GitHub. However, the fact that it is already being worked on by the company’s R&D team suggests that it is a priority for Google. With any luck, we will see Airfinger roll out to devices in the near future.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.