AI can extract sensitive images and expose our private lives

In Brief

AI models may learn and remember certain data and specific images, which is a concern.

It might lead to new legal disputes.

There are numerous cutting-edge technologies that inspire wonder, and someone is suing them. In this case, the StableDiffusion model’s developer is being sued. StableDiffusion is an entirely open-source and free-to-use AI that can produce graphics based on a text request. You can use it to create photorealistic images or those that are inspired by other artists’ styles.

However, it is obvious that artists do not appreciate this position; ArtStation, a sort of Instagram for artists, even staged a “No AI” strike. The key argument they bring up is that the model trains on the images are not StabilityAI’s property. Imagine someone took a snapshot of you at a meetup with friends and uploaded it to Instagram. Then, an AI would get its hands on it, and the photo would be analyzed by the model and used in training.

Models may learn and remember certain data and specific images, which is a concern. In other words, a blackmailer can take a query about a gathering with friends and synthesize your uploaded image from that. This is already a little worrisome, especially given that StableDiffusion’s training set contains more than 5 billion pics from the Internet, including private images that were once public. The misuse of private images does happen, unfortunately. In 2013, a doctor snapped a picture of a patient, and the picture appeared on the clinic’s website.

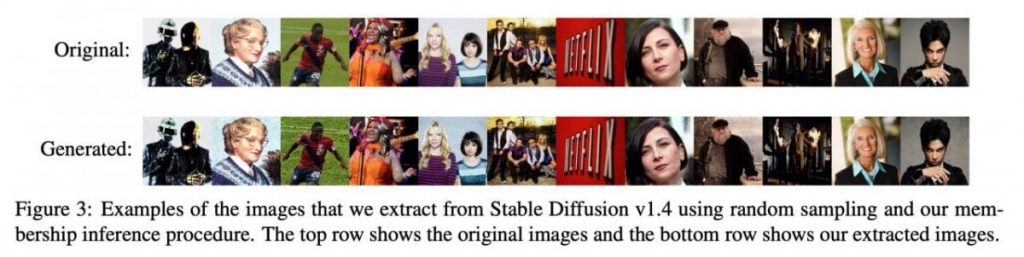

Here is an article that demonstrated how images were acquired using various AI models and how closely they matched training images (spoiler: there is some noise, but it is generally very similar). These defenses are applicable to the aforementioned action, and the jury will view these models differently in this instance since we can claim that they remember and replicate stuff (without the rights to do so).

However, it’s too early to claim that “models learn and don’t come up with anything” because, as I mentioned earlier, only 100 images were produced from the 5 billion training sessions (the authors marked with their hands the top 1,000 most similar generations for the most common prompts, but there were mostly no duplicates).

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.