AI Laws Unveiled: How the EU, US, China, and Others are Shaping the Future of Artificial Intelligence

In Brief

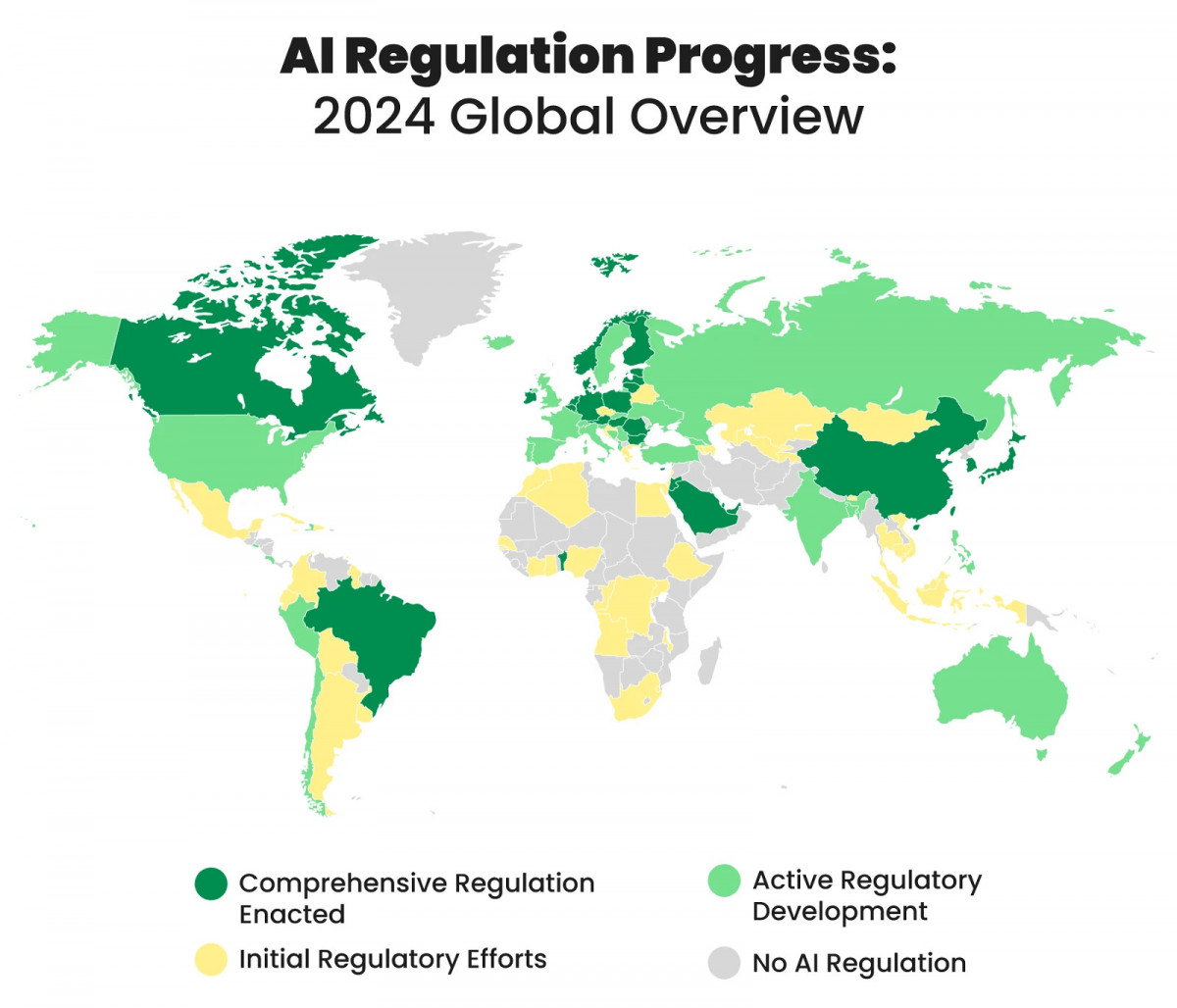

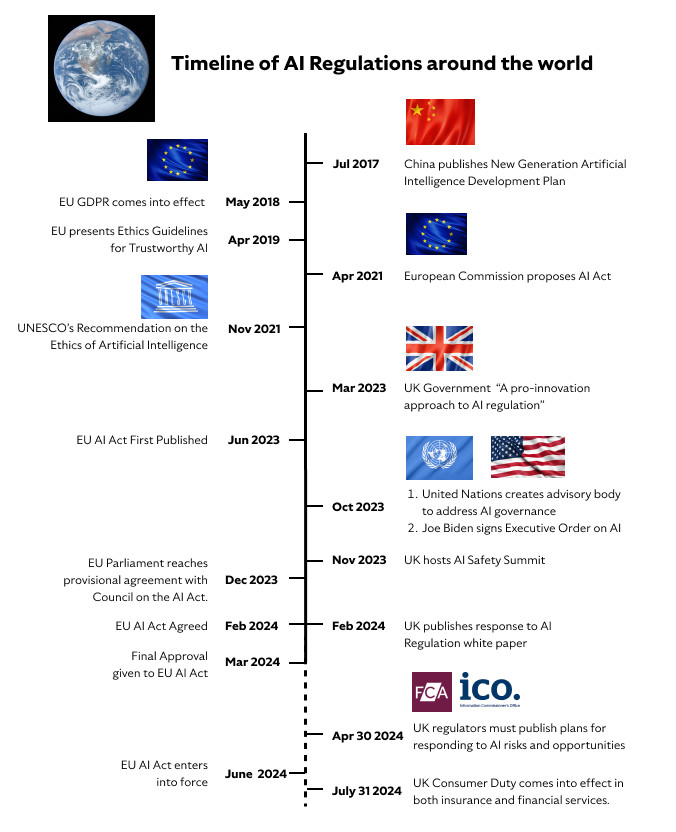

The global impact of artificial intelligence has led to challenges for governments and regulatory agencies in managing its advancement and use across various states and regions.

The global upheaval in businesses and society brought about by artificial intelligence has left governments and regulatory agencies struggling to manage its advancement and use. Here, we’ll overview the present situation of AI legislation across various states and regions.

Photo: AuthorityHacker

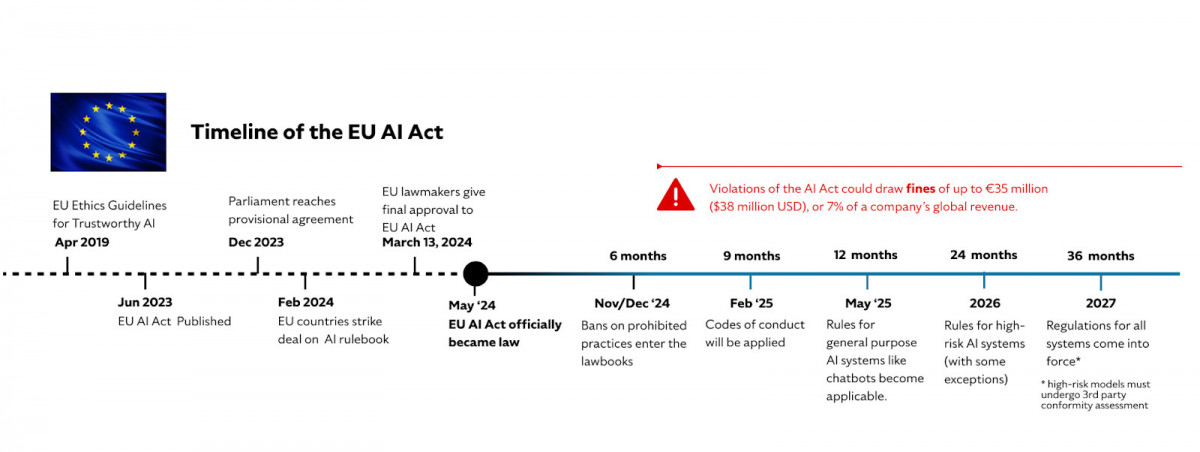

European Union

With the passage of its historic AI Act, the European Union has established itself as a leader in the field of comprehensive AI legislation. The Act, which was approved in March 2024, divides AI supervision into four risk categories: unacceptable, high, limited, and minimal or no risk.

Photo: mind foundry

AI systems that the Act deems to provide intolerable hazards are prohibited, including those that use subliminal manipulation tactics. Strict guidelines pertaining to testing, documentation, and human monitoring are applicable to high-risk applications. Additionally, the law requires chatbots and other low-risk AI systems to be transparent.

The AI Act will be implemented gradually, with its most important clauses taking effect in late 2024 or early 2026. A breach of these regulations may result in fines of up to €35 million, or 7% of worldwide revenue, demonstrating the EU’s determination to pursue strict enforcement.

United States

In terms of AI governance, the US has favored a more decentralized strategy. Executive orders and recommendations offer general direction at the federal level, but individual states and sector-specific agencies have been mainly left in charge of enacting specific regulations.

The “Safe, Secure, and Trustworthy AI” executive order signed by President Biden in October 2023 establishes new guidelines for AI system reporting and testing. Hundreds of AI-related laws have been introduced at the state level, many of which concentrate on particular uses like deepfakes or driverless cars.

Leading the way in state-level AI legislation are California, New York, and Florida, which are tackling everything from algorithmic discrimination to generative AI. Additionally, federal organizations that oversee AI usage, like as the Securities and Exchange Commission, are enacting laws in their respective states.

China

China published its “New Generation Artificial Intelligence Development Plan” in 2017, indicating that it was a pioneer in developing a national AI plan. This all-encompassing plan outlined China’s strategy for AI development through 2030, placing equal emphasis on the evolution of technology and its influence on society.

Since then, China has put in place rules that are specifically designed to address certain applications of AI, such as generative AI services and algorithm management. The government has worked to strike a balance between innovation and control, letting businesses and research facilities push the envelope while enforcing stringent regulations on AI services that are accessible to the general public.

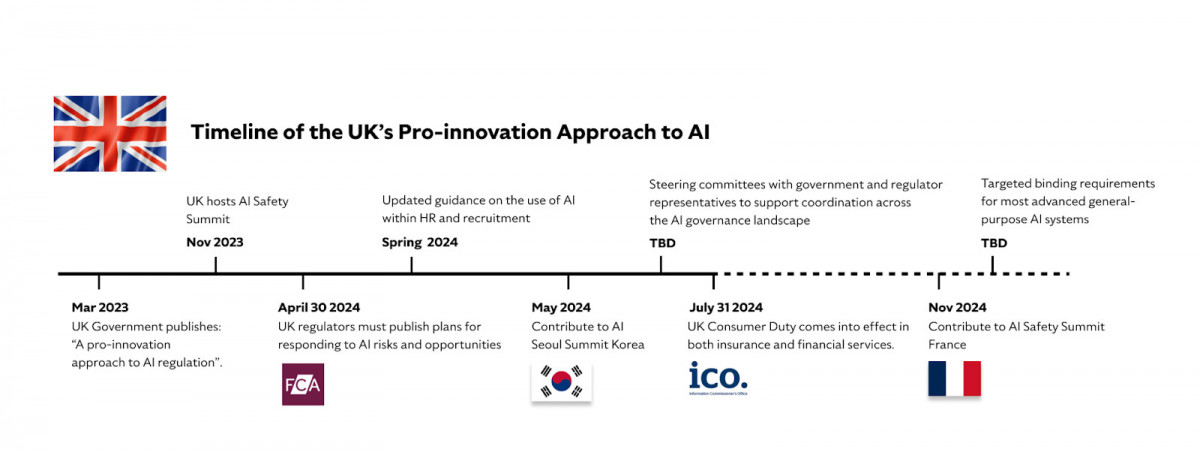

United Kingdom

The UK has chosen a “pro-innovation” strategy for regulating AI, forgoing broad legislation in favor of industry-specific supervision. Published in March 2023, the government’s framework assigns responsibility for AI regulation in each domain to specific regulatory organizations, with help from “central AI regulatory functions.”

Photo: mind foundry

This strategy seeks to strike a balance between the demands of investment, innovation, and efficient governance. Through organizing and hosting the AI Safety Summit in November 2023, the UK has also assumed a major position in global talks on AI governance.

Canada

The AI and Data Act (AIDA) is being worked on by Canada in an effort to protect its citizens from high-risk AI and to encourage ethical AI usage. AIDA places a strong emphasis on human rights, and safety, and limits heedless AI usage.

Furthermore, Canada has put into effect a Directive on Automated Decision-Making that establishes guidelines for the use of automated decision-making systems by the federal government. The nation’s strategy aims to solve particular domestic issues while conforming to international standards.

Japan

Japan advocates for “agile governance” in its regulatory framework surrounding AI. In this framework, voluntary efforts at self-regulation by the private sector are respected while the government offers non-binding guidelines.

Although it has published AI principles and guidelines, the Japanese government has not yet put tight, legally-binding laws into place. This adaptable strategy seeks to address ethical and safety issues while promoting innovation.

India

India is currently creating a legal framework for AI. The government has formed a task group to develop an AI regulatory body and offer suggestions on moral, legal, and sociological problems pertaining to AI.

Although legislation specifically pertaining to AI is still being developed, India’s current data protection and technology regulations offer some supervision over AI applications. The strategy would probably take into account the nation’s particular socioeconomic concerns as well as its goals of becoming a worldwide center for technology.

Australia

Australia has approached AI legislation cautiously, concentrating on integrating AI technology into the frameworks that are already in place. Although the government has emphasized the significance of responsible AI research, a complete law specifically pertaining to AI has not yet been put into place.

Critics caution that Australia may fall “behind the pack” in terms of AI governance as a result of this strategy. The government counters that it makes it possible to respond to quickly changing technology in a more adaptable manner.

Brazil

Brazil is working on a comprehensive AI Bill that will ban some high-risk AI systems, create a special regulatory agency, and hold AI developers and implementers accountable through civil lawsuits.

The regulation under consideration would mandate the expeditious disclosure of noteworthy security breaches and ensure that people have the entitlement to comprehend AI-generated conclusions and rectify any prejudices. This strategy is a reflection of Brazil’s attempts to establish itself as a pioneer in Latin American AI governance.

South Africa

With the Department of Communications and Digital Technology submitting a discussion document on AI in April 2024, South Africa is moving forward with developing AI rules. In South Africa, artificial intelligence is currently regulated by laws such as the Protection of Personal Information Act (POPIA).

The South African Centre for Artificial Intelligence Research (CAIR) was established by the nation as another sign of its dedication to expanding AI capabilities and creating suitable governance frameworks.

Switzerland

Switzerland has decided not to create a separate AI law, instead to modify current legislation in certain ways to make room for AI. This strategy involves amending local competition, product liability, and general civil laws to fit AI system demands, as well as incorporating AI transparency regulations into already-existing data protection laws.

The Swiss approach places a strong emphasis on adaptation and flexibility, which is consistent with the nation’s innovative reputation and sizeable AI research community.

Global Trends and Challenges

In global AI governance, several similar elements are developing despite the diversity of methods. Numerous regions are implementing risk-based frameworks, placing a strong emphasis on openness, and debating moral issues. The prevalence of industry-specific regulations is rising as more companies realize the potential advantages and hazards of artificial intelligence.

Photo: mind foundry

There are still issues with juggling innovation with security, staying up to date with quickly advancing technologies, and harmonizing laws across national boundaries. The enforcement of regulatory compliance and the resolution of bias and fairness problems in AI systems continue to be global regulatory priorities.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.