StyleDrop: Google’s Neural Network That Replicates Any Visual Style

In Brief

StyleDrop is a neural network that can mimic and transfer any visual style, capturing its nuances and intricacies.

Google has unveiled StyleDrop, a new neural network that has the ability to mimic and transfer any visual style to subsequent generations. This innovative technology, powered by Muse’s fast text-to-image model, enables users to effortlessly generate images that faithfully embody a specific style, capturing its nuances and intricacies.

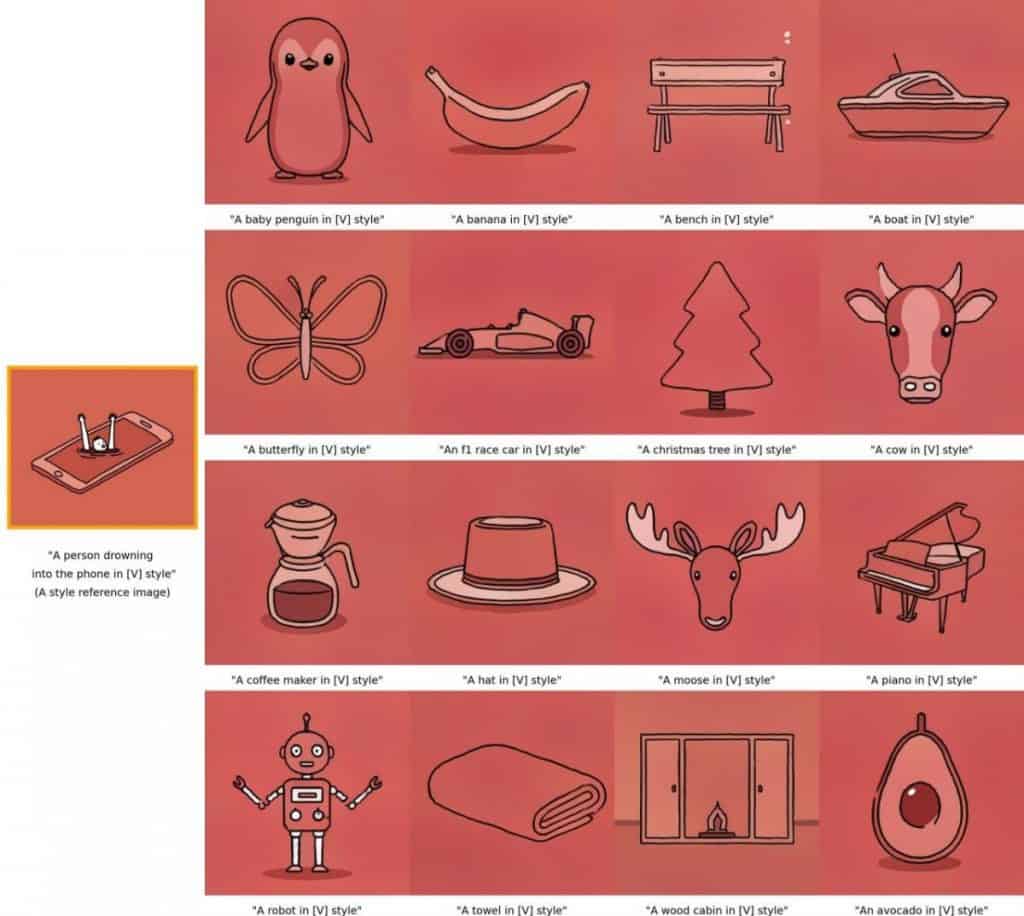

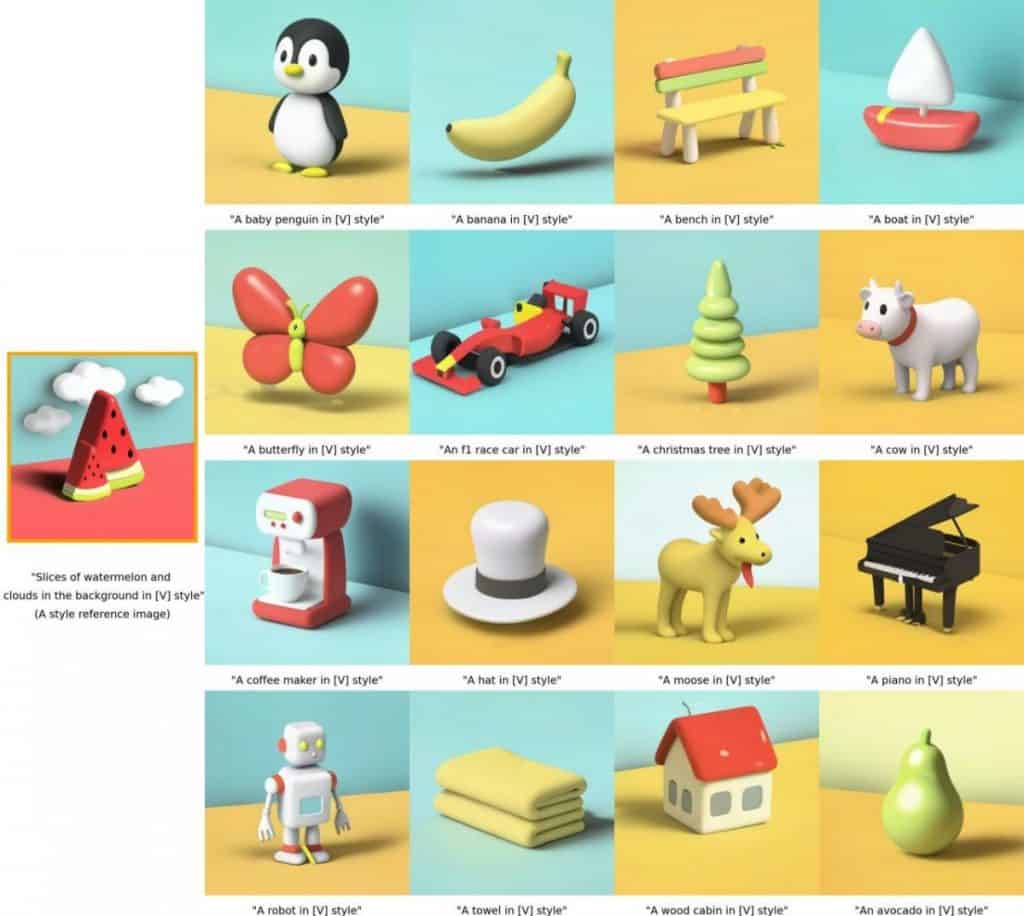

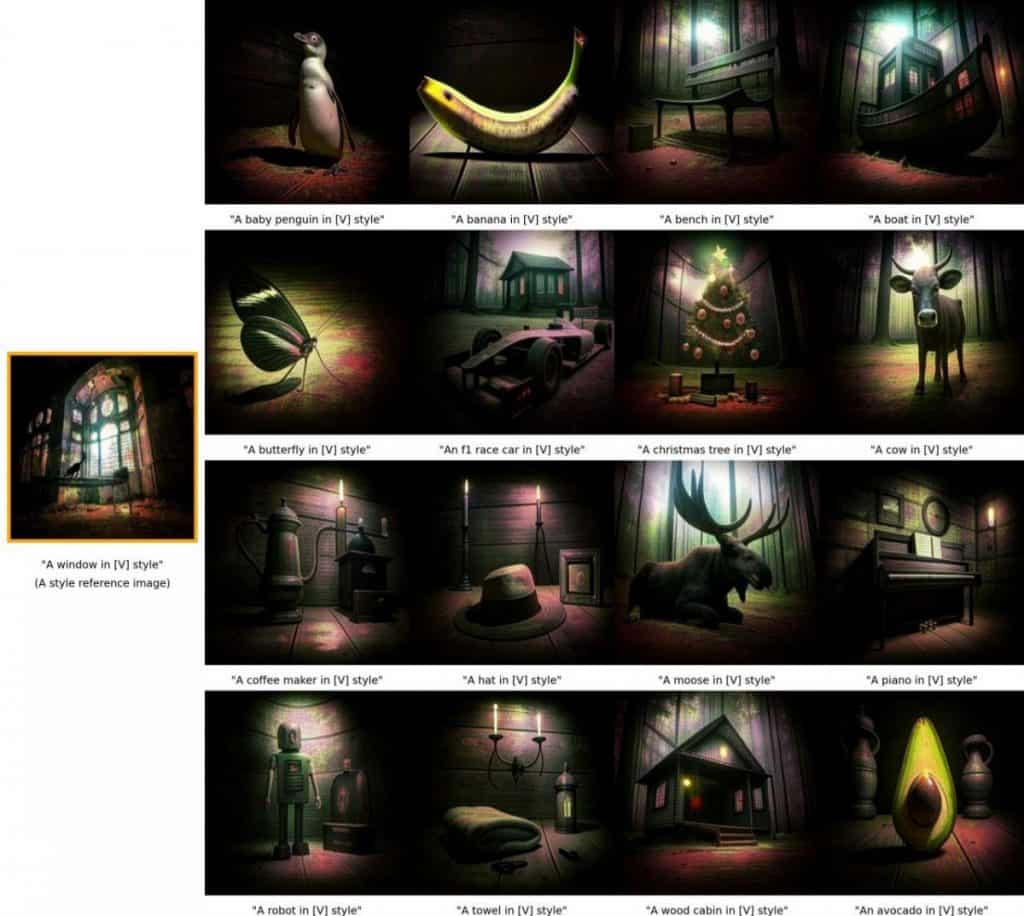

StyleDrop allows users to select an original image with the desired visual style and seamlessly transfer it to new images while preserving all the unique characteristics of the chosen style. The application can work with images that are completely distinct from one another. For example, users can use a children’s drawing as a base and generate a stylized logo or character.

Based on Muse’s advanced generative vision transformer, StyleDrop is trained using a combination of user feedback, generated images, and Clip Score. The neural network is fine-tuned with a minimal number of trainable parameters, comprising less than 1% of the total model parameters. Through iterative training, StyleDrop continually enhances the quality of generated images, ensuring impressive results in just a matter of minutes.

The versatility of StyleDrop makes it an indispensable tool for brands seeking to develop their unique visual style. With StyleDrop, brands can efficiently prototype ideas in their preferred style, making it an invaluable asset for creative teams and designers.

The extensive study conducted on StyleDrop’s performance in style tuning text-to-image models showcased its superiority over other methods, including DreamBooth, Textual Inversion on Imagen, and Stable Diffusion. StyleDrop consistently outperformed these approaches, delivering high-quality images that closely adhere to the user-specified style.

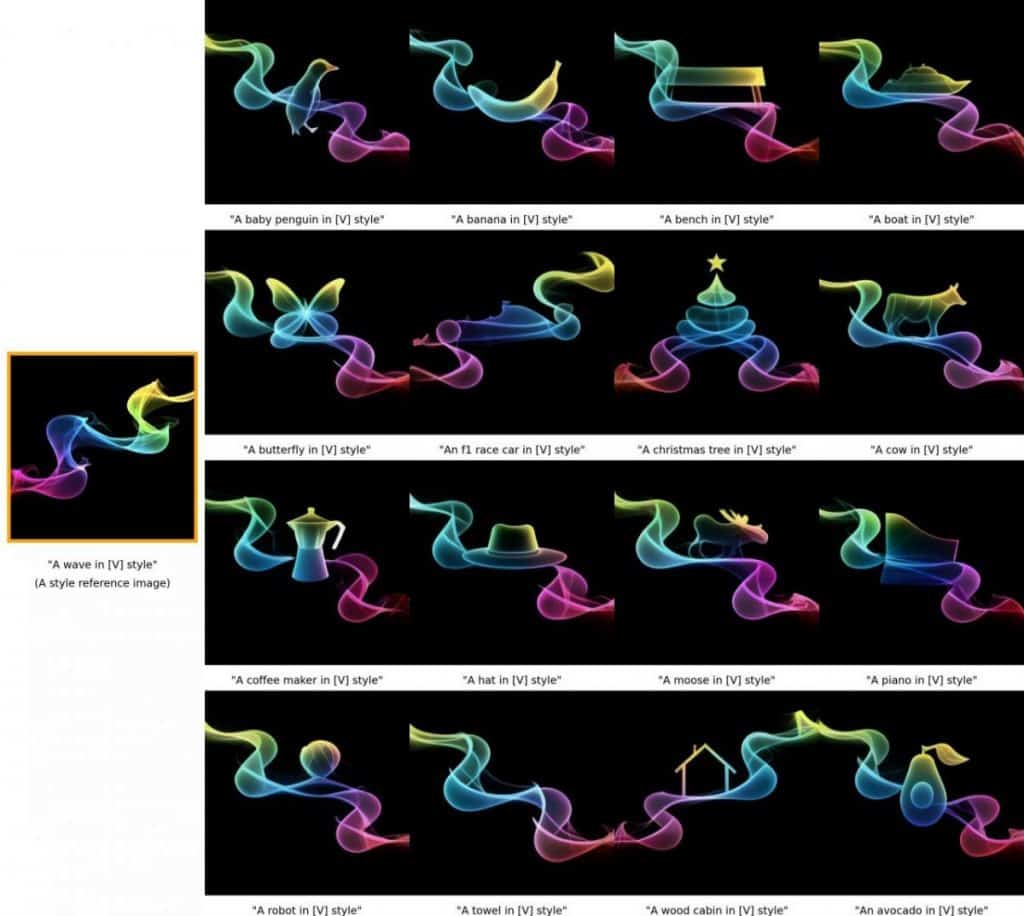

The text-based prompts provided by users play a crucial role in StyleDrop’s image generation process. By appending a natural language style descriptor (e.g., “in melting golden 3D rendering style” or “in abstract rainbow-colored flowing smoke wave design”) to the content descriptors during both training and generation, StyleDrop precisely captures the desired style.

Furthermore, StyleDrop offers users the opportunity to train the neural network with their own brand assets, allowing for the seamless integration of their unique visual identity. By appending a style descriptor in natural language to the content descriptors during training and generation, brands can rapidly prototype ideas in their own distinctive style.

The generation process with StyleDrop is remarkably efficient, taking no more than three minutes. This quick turnaround time enables users to explore numerous creative possibilities and experiment with different styles swiftly.

While StyleDrop demonstrates immense potential for brand development, it is important to note that the application has not yet been released to the public. The Google team is actively addressing copyright concerns and working towards ensuring legal compliance, enabling a smooth and secure launch.

This neural network helps brands and people to unleash their creativity and create appealing visual identities in an increasingly competitive digital landscape by effortlessly recreating any visual style. Brands now have a priceless tool at their disposal to create their own visual storylines with StyleDrop, and they can do it with unmatched ease and precision.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.