ControlNet Helps You Make Perfect Hands With Stable Diffusion 1.5

In Brief

ControlNet is an easy way to fine-tune Stable Diffusion.

It can be used to develop models for better SD control.

ControlNet is open-source and can be used in conjunction with WebUIs to achieve Stable Diffusion.

The one thing text-to-image AI generators have been struggling with is hands. While images are generally impressive, the hands are less so, with superfluous fingers, weirdly bent joints, and a clear lack of understanding of what hands are supposed to look like on AI’s part. However, this doesn’t have to be the case, as the new ControlNet product is here to help Stable Diffusion create perfect, realistically-looking hands.

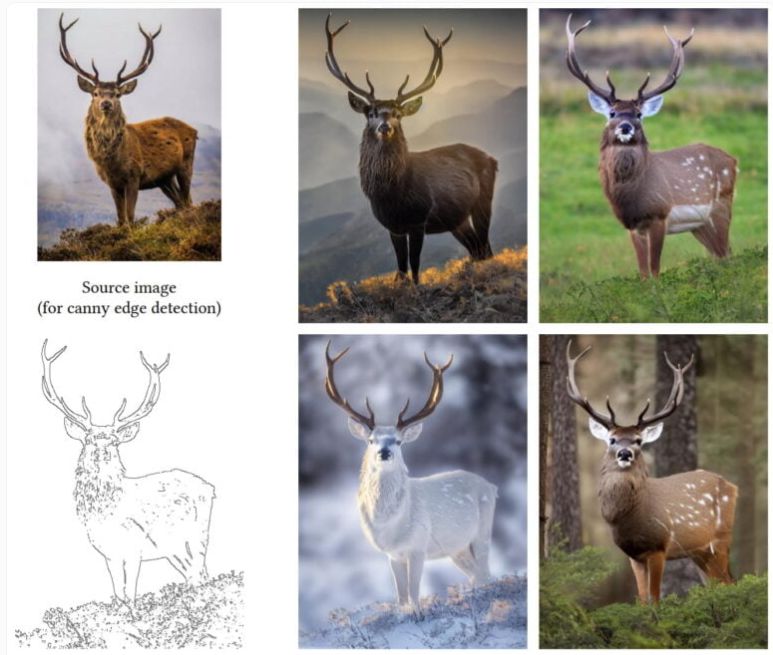

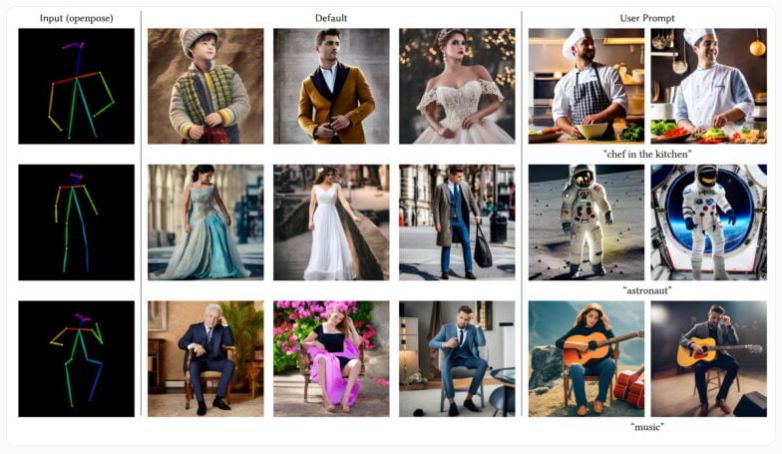

ControlNet is a new technology that allows you to use a sketch, outline, depth, or normal map to guide neurons based on Stable Diffusion 1.5. This means you can now have almost perfect hands on any custom 1.5 model as long as you have the right guidance. ControlNet can be thought of as a revolutionary tool, allowing users to have ultimate control over their designs.

To achieve flawless hands, use the A1111 extension with ControlNet, specifically the Depth module. Then, take a few close-up selfies of your hands and upload them to the ControlNet UI’s txt2img tab. Then create a simple dream shaper prompt, such as “fantasy artwork, Viking man showing hands closeup,” and experiment with the power of ControlNet. Experimentation with the Depth module, A1111 extension, and ControlNet UIs txt2img tab will result in beautiful and realistic-looking hands.

| Recommended post: Shutterstock rewards artists who contribute to generative AI models |

ControlNet itself converts the image that it is given to depth, normals, or a sketch so that later it can be used as a model. But, of course, you can directly upload your own depth map or sketches. This allows for maximum flexibility when creating a 3D scene, enabling you to focus on the style and quality of the final image.

We strongly suggest you look at the excellent ControlNet tutorial that Aitrepreneur has recently published.

ControlNet greatly improves control over Stable Diffusion’s image-to-image capabilities

Although Stable Diffusion may create images from text, it can also create graphics from templates. This image-to-image pipeline is frequently used to enhance generated photos or produce new images from scratch using templates.

While Stable Diffusion 2.0 offers the capability to use depth data from an image as a template, control over this process is quite restricted. This approach is not supported by the earlier version, 1.5, which is still commonly used due to the enormous number of custom models, among other reasons.

Each block’s weights from Stable Diffusion are copied by ControlNet into a trainable variant and a locked variant. The blocked form keeps the capabilities of the production-ready diffusion model, whereas the trainable variant can learn new conditions for picture synthesis by fine-tuning with tiny data sets.

Stable Diffusion works with all ControlNet models and offers considerably more control over the generative AI. The team provides samples of several variations of people in fixed poses, as well as various interior photos based on the spatial arrangement of the model and variations of bird images.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.