Text-to-Image AI Model

What is Text-to-Image AI Model?

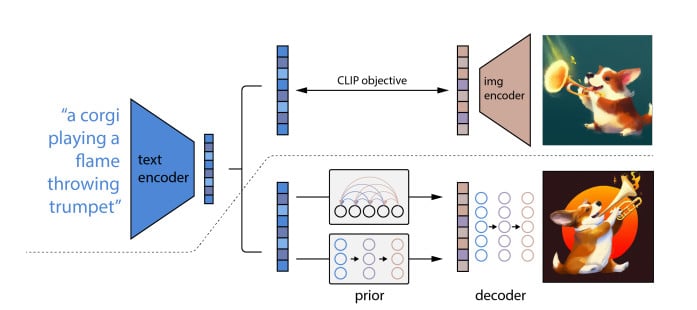

A text-to-image model is a type of machine learning model that generates an image that corresponds to a natural language description provided as input. Text-to-image models typically consist of two components: a generative image model that creates a picture conditioned on the input text, and a language model that converts the text into a latent representation. Large volumes of text and picture data that were scraped from the internet are typically used to train the most efficient algorithms.

Understanding of Text-to-Image AI Model

University of Toronto researchers released alignDRAW, the first contemporary text-to-image model, in 2015. The DRAW architecture that was first introduced was expanded by alignDRAW to provide text sequence conditioning. While the alignDRAW-generated images lacked photorealism and were hazy, the model demonstrated that it was capable of more than just “memorizing” the training set’s contents by being able to generalize to items that weren’t included in the training set and respond properly to new cues.

The OpenAI transformer system DALL-E was one of the first text-to-image models that drew significant public interest, it was unveiled in January 2021. In April 2022, DALL-E 2, a replacement that could produce more complex and lifelike visuals, was presented. In August of the same year, Stable Diffusion was made available to the public. Further demonstration of the “personalization” of huge text-to-image foundation models took place in August 2022. With text-to-image customization, a new notion may be taught to the model with a tiny number of photos of an item that wasn’t part of the text-to-image foundation model’s training set, this is achieved by Textual inversion.

| Related: Best 100+ Stable Diffusion Prompts: The Most Beautiful AI Text-to-Image Prompts |

Future of Text-to-Image AI Model

The creative community is exploding with AI art, which is pushing us into intellectually and artistically unexplored terrain. Though its creative aspects are still being explored, it has already started to alter the environment of artistic imagery. Intelligent human visuals beyond anything we’ve ever seen on a screen are already welcome in our minds. One of the most interesting advances is text-to-image creation, which enables computers to produce images in response to text commands. Artists use AI to expand their imaginations on a daily basis. Their interests lie more in investigating technology for making up imaginary cities, watching dogs dance at a disco, or trying to figure out what the future holds.

Latest News about Text-to-Image AI Model

- Midjourney 5.2 and Stable Diffusion SDXL 0.9 have released significant updates for creative image generation. Midjourney 5.2 introduces Zoom Out, customizable variations, and a 1:1 image transformation. It also introduces Outpainting, customizable variations, and a prompt parser for optimizing prompts and aligning them with users’ intentions. These updates enhance the user experience and improve accuracy in generating realistic images.

- SnapFusion is an AI model that allows users to create stunning images from natural language descriptions in just two seconds on mobile devices. It eliminates the need for expensive GPUs and cloud-based services, reducing costs and addressing privacy concerns. The model’s efficiency and performance have been demonstrated in experiments on the MS-COCO dataset.

- Researchers have developed GigaGAN, a text-to-image model that can generate 4K images in 3.66 seconds, a significant improvement over existing models. GigaGAN is based on the GAN framework and trained on a 1 billion-image dataset, generating 512px images at 0.13 seconds. It has a disentangled, continuous, and controllable latent space, allowing for various styles and image control. The model can also train an efficient upsampler for real images or outputs.

Latest Social Posts about

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.