Explainable Artificial Intelligence (xAI)

What is Explainable Artificial Intelligence (xAI)?

Explainable artificial intelligence (XAI) is a collection of procedures and techniques that enable machine learning algorithms to provide output and results that are understandable and reliable for human users. An AI model, its expected effects, and any potential biases are all can be described by explainable AI. It also contributes to defining model correctness, equity, openness, and results in AI-powered decision making. When a business decides to put AI models into production, explainable AI is essential to fostering confidence and trust.

Understanding of Explainable Artificial Intelligence (xAI)

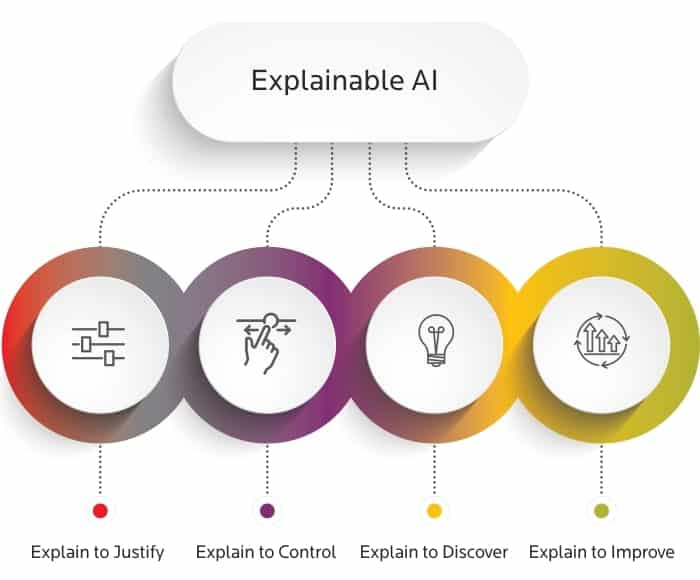

The setup of XAI techniques consists of three main methods:

- Decision understanding – it’s important to teach users how to use AI, since majority of people don’t trust it

- Prediction accuracy – For artificial intelligence to be successfully used in daily operations, accuracy is a important. The prediction accuracy may be verified by doing simulations and analyzing the output of XAI with the outcomes in the training data set

- Traceability – Another method for achieving XAI is traceability, it can be achieved bt restricting the options available for decision-making and by establishing a smaller number of ML features and rules

Explainability tries to address concerns raised by stakeholders regarding AI systems’ decision-making procedures. Explanations may be used by developers and ML practitioners to make sure that requirements for AI systems and ML models are satisfied throughout development, testing, and debugging. End users and other non-technical audiences can benefit from explanations by using them to better understand AI systems and reduce any doubts or worries they may have about the way they operate.

Latest news about Explainable Artificial Intelligence (xAI)

- The Explainable Artificial Intelligence (xAI) team has unveiled the “xAI PromptIDE”, an integrated development environment designed to revolutionize prompt engineering and interpretability research. The tool offers a Python code editor and a new software development kit (SDK), providing insights into Large Language Models (LLMs). It also offers analytics, automatic prompt saving, versioning, and concurrency features. The xAI PromptIDE is available to early access program members and is expected to revolutionize AI research.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.