AI Industry’s Potential to Rival National Electricity Consumption

AI’s rapid expansion in 2022 and 2023, driven by the success of OpenAI’s ChatGPT, has raised concerns about electricity consumption and environmental impact. Data center electricity consumption, which accounts for only 1% of global electricity use, may have increased by 6% between 2010 and 2018. This commentary examines AI electricity consumption and its potential implications, discussing both pessimistic and optimistic scenarios and cautioning against embracing either extreme.

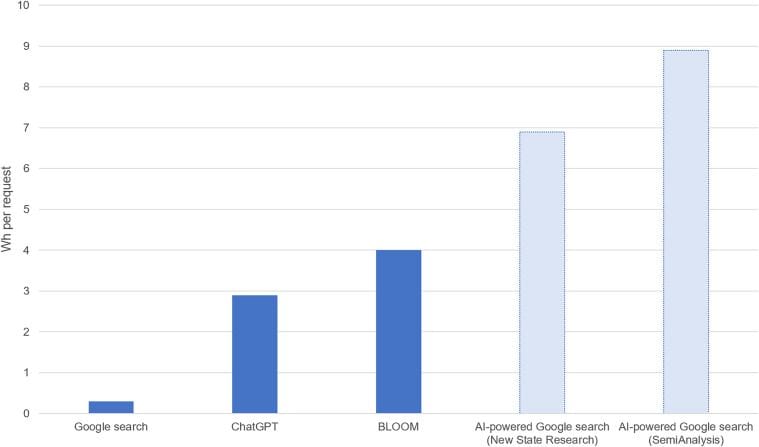

AI, including generative AI tools like ChatGPT and OpenAI’s DALL-E, uses natural language processing to create new content. The training phase, often energy-intensive, involves feeding large datasets and adjusting parameters to align predicted outputs with target output. The inference phase, where models generate outputs, has received little attention in literature. However, the inference phase may contribute significantly to an AI model’s life-cycle costs, with Google stating that 60% of AI-related energy consumption stems from inference.

According to researcher Alex de Vries from the School of Business and Economics in Amsterdam, the energy consumption of AI companies could reach staggering proportions by 2027, comparable to entire nations like Argentina, the Netherlands, and Sweden.

De Vries derives his calculations from the projected deliveries of AI servers by market leader Nvidia in 2023. His estimations indicate a substantial increase, from 100 thousand servers this year to 1.5 million servers by 2027.

Assuming these servers operate at full capacity, their energy consumption will surge from the current 6–9 terawatt-hours (TWh) annually to a staggering 86–134 TWh annually by 2027. For perspective, Sweden consumes 125 TWh of energy each year.

Furthermore, if Google were to exclusively transition its search service to AI algorithms today, the energy costs alone would amount to 29.3 TWh annually, equivalent to Ireland’s annual energy consumption.

De Vries acknowledges that such a scenario remains improbable, partly because Nvidia currently faces challenges in supplying the required quantity of AI servers. The scarcity of these servers also translates to high costs. For instance, Google’s theoretical shift to an all-AI approach would obliterate the company’s operating margin.

AI algorithm operation is financially burdensome for companies, and effective monetization remains elusive. Paradoxically, as user numbers grow, technology costs increase rather than decrease. Microsoft is attempting to capitalize on the hype around generative AI to create a market for such services and make money. However, the company has faced financial losses on its first generative products, such as the GitHub Copilot service, which lost from $20 to $80 per user. To avoid this, Microsoft has decided to release AI add-ons for its popular products, which can increase the cost of the product. Google and Microsoft also face difficulties in monetizing AI services due to the high maintenance costs. Companies like Microsoft and Google demand an additional $30 to maintain AI models. Zoom creators are also attempting to save money by developing their own algorithms and borrowing others for complex tasks. Adobe and other companies limit the use of neural networks based on tariff plans. Companies hope that the cost of AI models will fall over time, but before that happens, they will need to spend hundreds of millions of dollars.

Improvements in hardware efficiency, model architectures, and algorithms could potentially reduce AI-related electricity consumption in the long term. This could be due to Jevons’ Paradox, where increasing efficiency leads to increased demand, leading to a net increase in resource use. Additionally, repurposing GPUs for AI-related tasks, such as Ethereum’s “mining 2.0,” could shift 16.1 TWh of annual electricity consumption to AI.

AI-related electricity consumption is uncertain, but it could boost applications like Google Search. However, resource constraints may limit growth. Efforts to improve AI efficiency may trigger a rebound effect, increasing demand for AI. Developers should focus on optimizing AI and considering its necessity, with regulators considering environmental disclosure requirements.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.