Gen-1: AI Generates New Videos From Existing Ones by Combining Prompts and Images

In Brief

Gen-1 is a neural network that can generate new videos from existing ones by combining prompts and images.

It could also be used to create entirely new videos from scratch.

The ability to generate new videos from existing ones has a number of potential applications.

RunWayML, an artificial intelligence startup, has announced a new product called Gen-1, a neural network that can generate new videos from existing ones by combining prompts and images. For years, neural networks have been limited to the task of style transfer, which is the process of taking an image and applying the style of another image to it. This is how we get those trippy deep-learning style transfer videos where, for example, a landscape is rendered in the style of Van Gogh’s Starry Night.

Now, with Gen-1, neural networks can do more than just style transfer. It can generate new videos from scratch, using prompts and images as input. This opens up a whole new range of possibilities for AI-created videos. Right now, the videos generated by Gen-1 are short and simple. But as the technology develops, we can expect to see more complex and realistic videos being generated by AI.

What is Gen-1?

Strong picture creation and editing tools are unlocked by text-guided generative diffusion models. While these have been applied to the creation of videos, the present methods for editing the content of already-existing material while maintaining its structure demand expensive retraining for each input or depend on the risky propagation of picture alterations between frames.

Developers describe a model for structure- and content-guided video diffusion, which alters films based on either written or visual descriptions of the desired result. Due to insufficient decoupling, conflicts between user-provided content modifications and structure representations arise. They demonstrate that the structure and content integrity may be controlled by training on monocular depth estimations with various levels of detail.

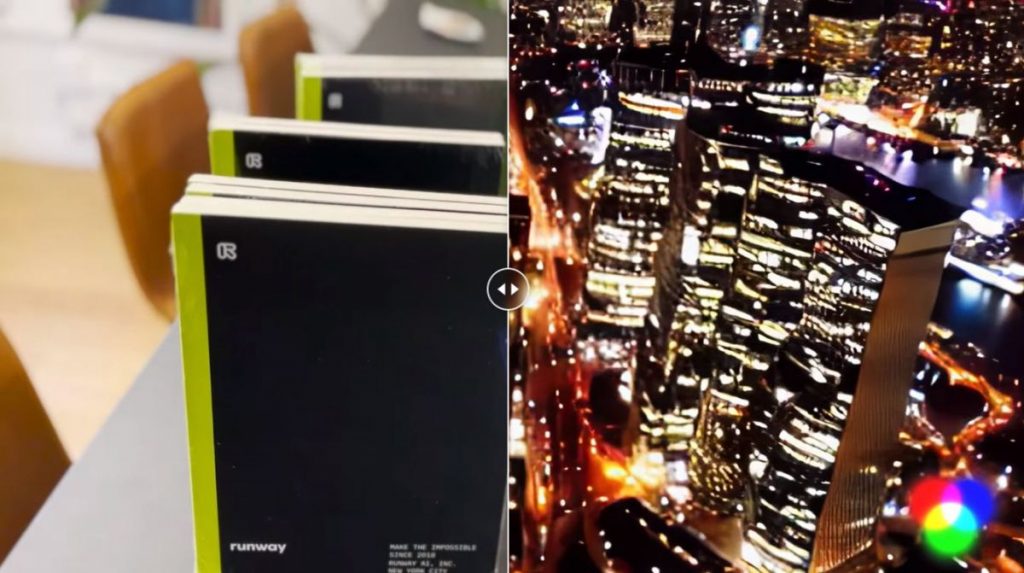

Synthesize new videos in a realistic and consistent manner by applying the composition and style of an image or text prompt to the structure of your source video. It’s like filming something new without actually filming anything.

Transferring the style of an image or prompt to every frame of a video can be a great way to unify your project, give it an overarching visual theme, and create consistency.

Through the use of software and creative design, mockups can be transformed into aesthetically pleasing and interactive renders that bring the user’s vision to life.

With video editing features, it’s easy to isolate parts of a video and enhance them with text prompts.

Applying an input image or prompt to untextured renders can greatly improve their realism, bringing the 3D models to life.

Runway Research is dedicated to building multimodal AI systems that enable new forms of creativity. Gen-1 represents yet another of our pivotal steps forward in this mission. If you’re a creative looking to experiment with the future of storytelling, request access below.

Gen-1 can also be used to create entirely new videos from scratch. It can be used for a number of purposes, including creating new versions of existing films or creating new films altogether. This news is sure to excite filmmakers and other creatives who are always looking for new ways to push the boundaries of their art. With Gen-1, they now have a new powerful tool at their disposal.

The ability to generate new videos from existing ones has a number of potential applications. For example, it could be used to create new versions of existing video content, so it could be used for creating alternative versions of scenes or shots that may be too expensive or logistically difficult to film.

You can apply to use the model while it is in beta testing here.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.