AI model MinD-Vis can read people’s minds based on brain activity

In Brief

AI model can interpret what a person sees based on brain activity – here’s how

A new AI model called MinD-Vis can interpret what a person is seeing based on brain activity. The model works by interpreting the ‘mnemonic invariants’ that are present in the brain when a person sees an object. These mnemonic invariants are patterns that are conserved across different memories of the same object.

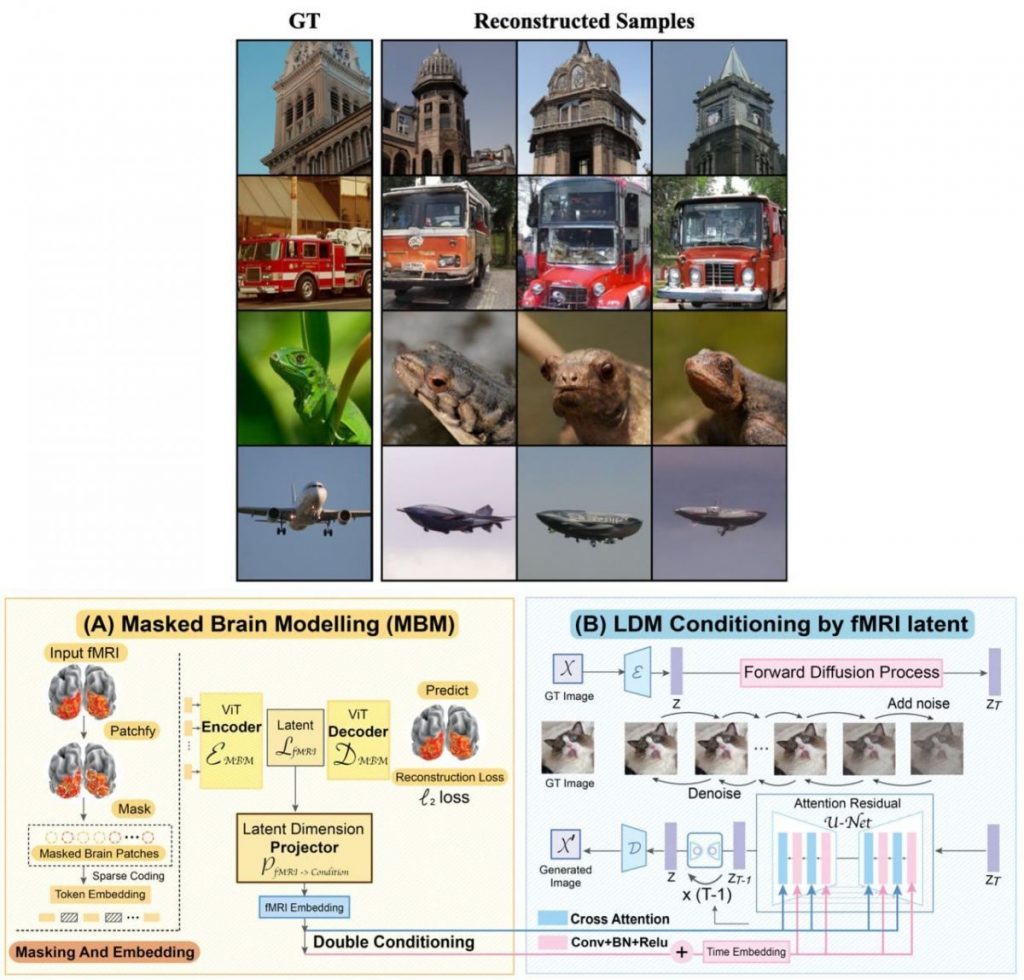

In order to obtain global embeddings of brain activity, the authors first trained a self-supervised model (the same for different people). They then introduced cross-attention to these mental representations using the pre-trained Latent Diffusion. The model was able to completely decipher what a person sees in front of him after some quick fine-tuning on 1.5k picture-fMRI pairs!

In order to better comprehend the human visual system and provide the groundwork for bridging human and computer vision via the Brain-Computer Interface, decoding visual stimuli from brain recordings attempts to expand our understanding of the visual system. The complexity of the underlying representations of brain signals and the dearth of data annotations make it difficult to recreate accurate images with high quality from brain recordings.

First, using mask modeling in a sizable latent space inspired by the sparse coding of information in the primary visual cortex, we create an efficient self-supervised representation of fMRI data. Then, we demonstrate that MinD-Vis can rebuild highly credible images with semantically matched details from brain recordings using a minimal number of paired annotations by adding double-conditioning to a latent diffusion model.

Researchers claimed

According to the experimental results, strategy outperformed the state-of-the-art in semantic mapping (100-way semantic classification) and generation quality (FID) by 66% and 41%, respectively. The model has been benchmarked both qualitatively and quantitatively. In order to evaluate the framework, a thorough study on ablation was also carried out.

The training data, the code, and the model weights are all available to the public on request.

The researchers believe that the model could be used to develop prosthetics for people who are blind or have low vision. It could also be used to help people with memory disorders, such as Alzheimer’s disease.

Read more:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.