Artist creates an anti-theft script to protect art, uses the same watermark as AI generators

In Brief

AI generators use a module to embed a hidden watermark into the images they produce.

AI bots can detect it when they search the internet for photographs to add to their database.

By employing the same watermark as the AI generators, Edit Ballai’s script adds an anti-theft protection.

The struggle between humans and machines intensifies. To combat AI, people arm themselves with AI’s own weapons.

The news was posted on Artstation by a user going by the handle Eballai.

The artists wrote a Python script that adds an invisible watermark to png images, making them “tasteless” for swarms of AI-Bots that would crawl the Internet for days looking for new photos for the voracious AI. This news caused the internet community to become enthused once more.

How does it work?

AI generators use a Python module to embed a hidden watermark into the images they produce. Since it is contained within the image and not on the image, rendering it invisible to the human eye. However, AI bots notice it immediately when searching the internet for images for their database or training. This watermark will screen any future AI-generated images, preventing them from being used to develop new AI models.

In order to safeguard our next artwork, we developed this watermark generator. This generator creates an anti-theft safeguard by using the same watermark as the AI generators. Our image won’t be used because our watermarked image can’t be differentiated from AI created images if AI bots stumble across it while browsing the web because they will notice the watermark and think it’s another AI generated image.

Do the pics that the AI generators create have a secret watermark?

According to specialists, all image generators, such as Midjourney, Stable Diffusion, DALLE2, WOMBO, and many others, use watermarks on their images. The watermark is avoided by AI crawlers who trawl the internet for images to feed the algorithms. These contaminated images are not included in the datasets.

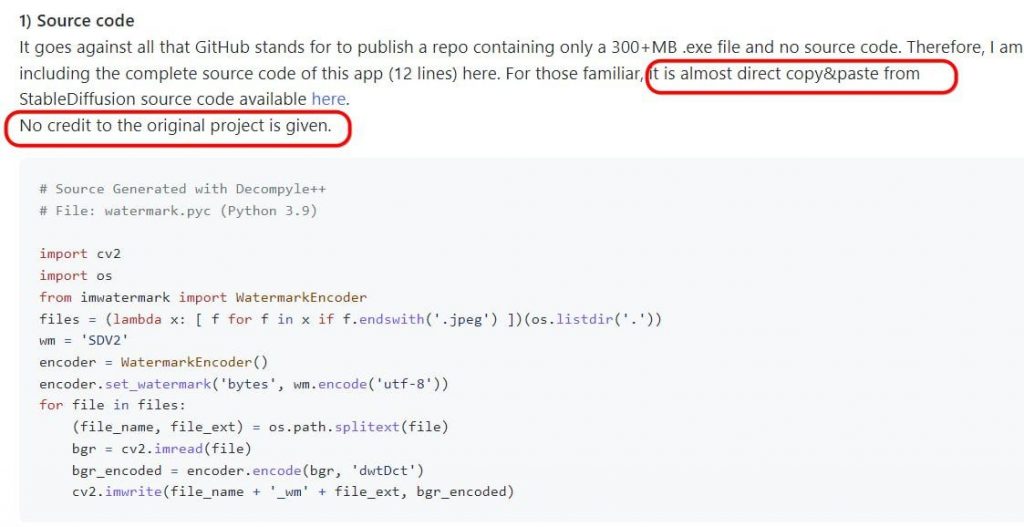

The narrative of Ebalai is densely packed with details. As soon as the post became public, coders flocked to GitHub to decompile the code. It found out that these are 12 lines of Python code, which are nearly a copy of the Stable Diffusion 2 code that adds a watermark to the created photos.

I just find it funny that this is an artist rights project, but this developer is the first to just copy and paste the code without giving proper credit,

someone commented on GitHub

Ebalai, AI’s most ferocious foe, was revealed to be the New Zealand artist Edit Ballai. She deleted all of her images from Artstation. Here’s a link to her article regarding watermarks.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.