Phi-1, A Compact Language Model, Outpaces GPT in Efficient Code Generation

In Brief

Researchers developed Phi-1, a compact language model for efficient code generation, using 1.3 billion parameters and a smaller training dataset.

Despite its smaller size, it achieves impressive results, with a pass@1 accuracy of 50.6% on HumanEval and 55.5% on MBPP benchmarks.

Phi-1, a compact yet powerful model specifically designed for code-generation tasks. Unlike its predecessors, Phi-1 demonstrates superior performance in coding and other related tasks while using significantly fewer parameters and a smaller training dataset.

| Recommended: 12 Best AI Coding Tools 2023 |

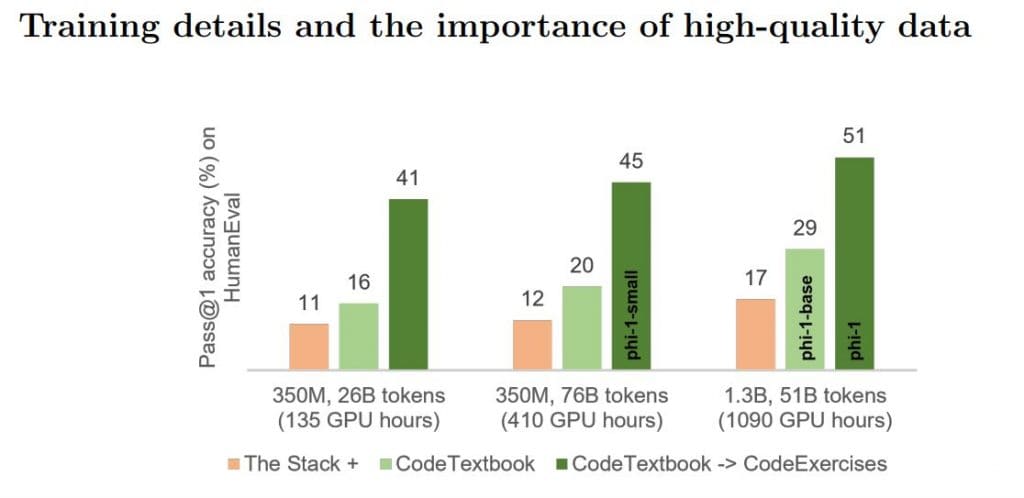

Phi-1, a Transformer-based model, stands out with its mere 1.3 billion parameters, which is only a fraction of the size of other competing models. Remarkably, it was trained in just four days using eight A100s. The training process involved carefully curated “textbook quality” data sourced from the web (6 billion tokens) and synthetic textbooks and exercises generated with the assistance of GPT-3.5 (1 billion tokens).

Despite its smaller scale, Phi-1 achieves impressive results, boasting a pass@1 accuracy of 50.6% on HumanEval and 55.5% on MBPP benchmarks. Moreover, it exhibits unexpected emergent properties when compared to Phi-1-base, an earlier model before finetuning, and Phi-1-small, a smaller model with 350 million parameters. Even with its reduced size, Phi-1 still achieves a commendable 45% accuracy on HumanEval.

The success of Phi-1 can be attributed to the high-quality data used during training. Just as a comprehensive and well-crafted textbook aids students in mastering new subjects, the researchers focused on crafting “textbook quality” data to enhance the learning efficiency of the language model. This approach resulted in a model that surpasses most open-source models on coding benchmarks like HumanEval and MBPP, despite its smaller model size and dataset volume.

However, it is important to note some limitations of Phi-1 compared to larger models. Firstly, Phi-1 specializes in Python coding and lacks the versatility of multi-language models. Additionally, it lacks domain-specific knowledge found in larger models, such as programming with specific APIs or using less common packages. Finally, due to the structured nature of the datasets and the lack of diversity in language and style, Phi-1 is less robust to stylistic variations or errors in the prompt.

The researchers acknowledge these limitations and believe that further work can address each of them. They propose using GPT-4 to generate synthetic data instead of GPT-3.5, as they observed a high error rate in the latter’s data. Despite the errors, Phi-1 demonstrates remarkable coding proficiency, similar to a previous study where a language model produced correct answers even when trained on data with a 100% error rate.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.