Meta’s Llama 3.1 Unleashed: How This Open-Source AI Titan Could Dethrone ChatGPT and Reshape the Future of Artificial Intelligence

In Brief

Llama 3.1, an open-source AI model, has been praised for its superior performance in tests, potentially marking a significant shift in the AI sector.

The business claims that Llama 3.1, an open-source AI model, outperforms top proprietary models in many important tests. This release might tip the scales in favor of open-source development and represents a major turning point for the AI sector.

The Flagship Model Has 405 Billion Power Parameters

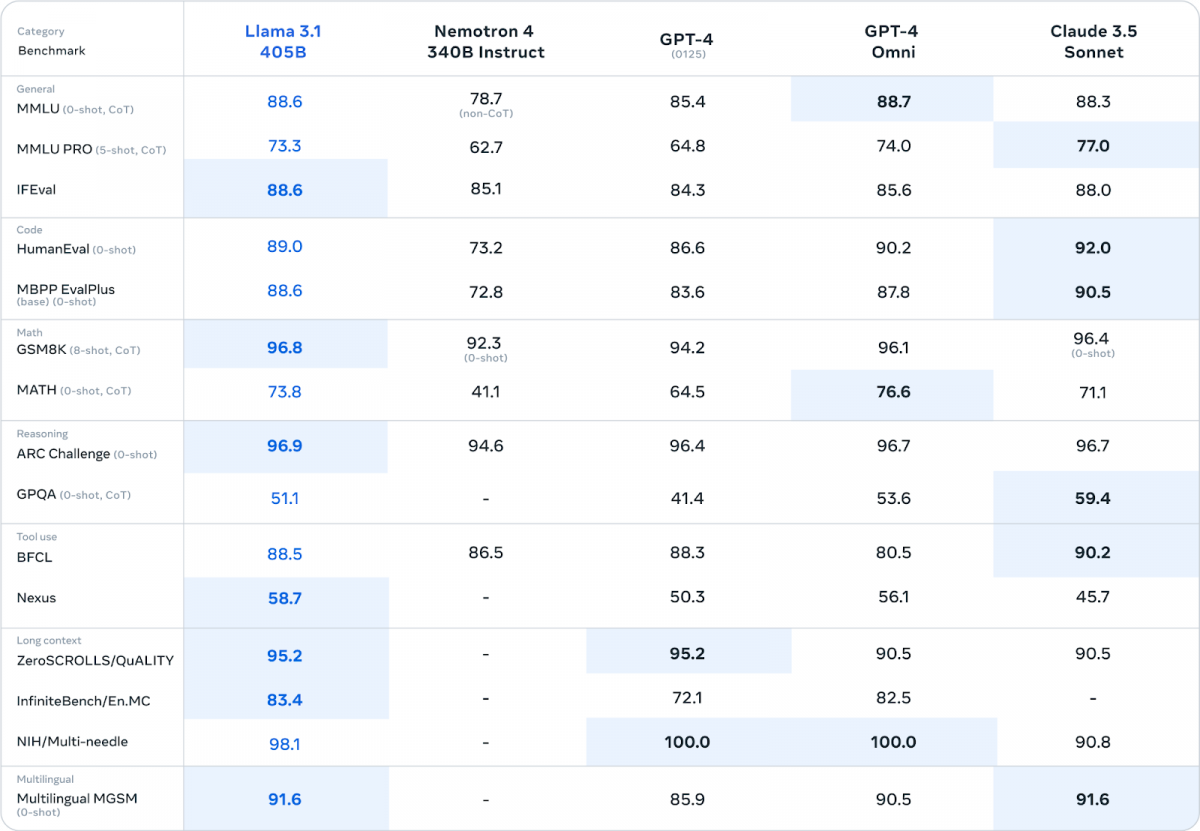

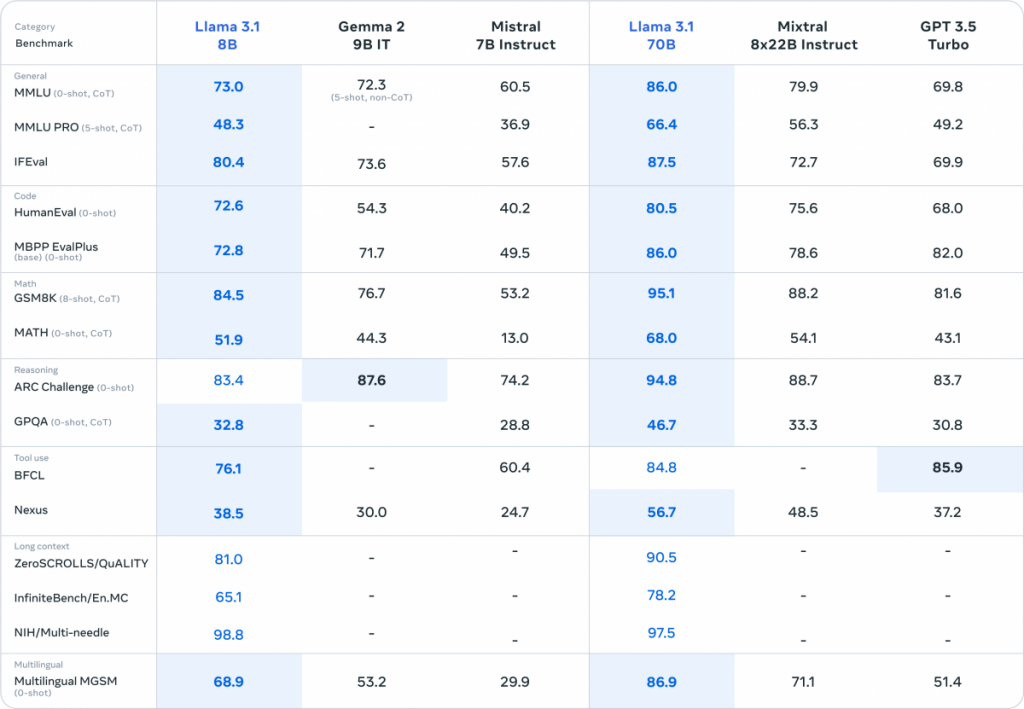

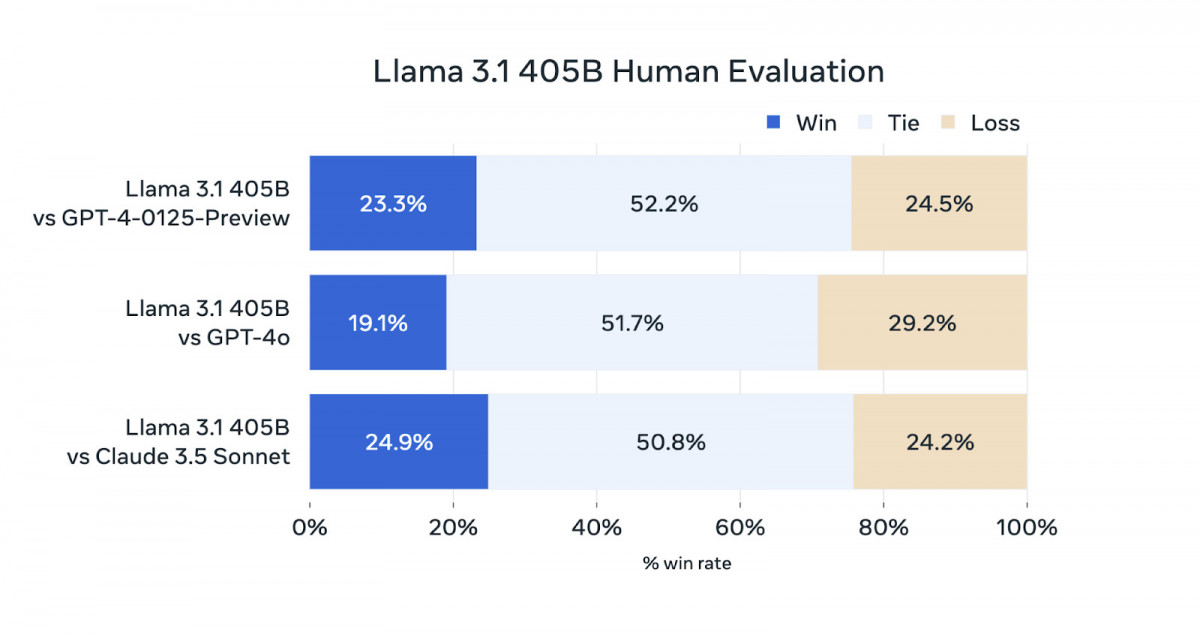

Models of different sizes are part of the Llama 3.1 family, with the flagship 405 billion parameter version being the biggest and most powerful publicly accessible foundation model to date. According to Meta, this model performs on pace with or better than closed-source models such as GPT-4o and Claude 3.5 Sonnet on a variety of tasks.

The CEO of Meta, Mark Zuckerberg, has voiced his belief in the model’s potential and forecast that by the end of the year, Meta AI—which is powered by Llama 3.1—will surpass ChatGPT as the most popular AI assistant. This audacious assertion highlights Meta’s dedication to open-source AI research and its confidence in the model’s potential.

Photo: Meta

Photo: Meta

Photo: Meta

Llama 3.1’s development took an important financial investment. Over 16,000 of Nvidia’s flagship H100 GPUs were used by Meta for training; industry insiders estimate that the total cost was hundreds of millions of dollars. Subject to specific usage requirements, Meta is making the model openly available to academics and developers even after this substantial expenditure.

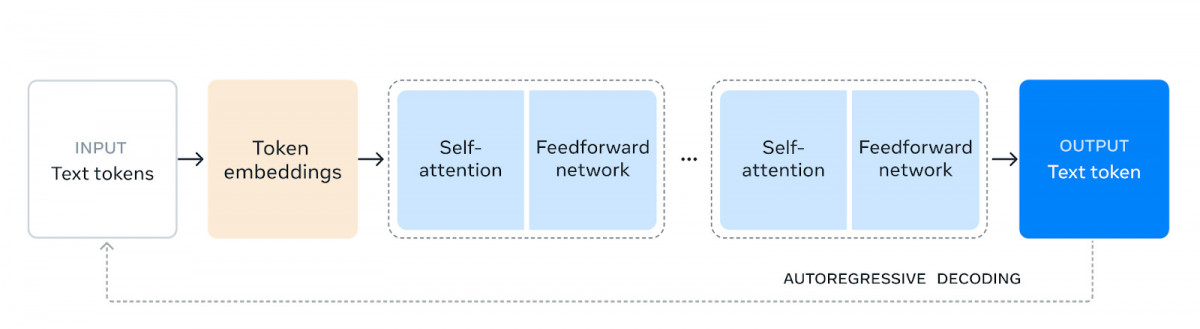

Photo: Meta

Enhanced Capabilities: Multilingual Support and Extended Context

Compared to its predecessors, Llama 3.1 has a number of noteworthy enhancements. The model can analyze and produce larger text sequences since it supports a 128K token context length. With support for eight languages, including French, German, Hindi, Italian, and Spanish, it also provides improved multilingual capabilities. Thanks to these characteristics, Llama 3.1 may be used for a variety of tasks, such as coding assistants, multilingual conversational bots, and long-form text summaries.

Llama 3.1’s release as an open-source model by Meta is a component of a larger plan to democratize AI technologies. Meta provides the model weights for download, enabling developers to completely alter the model to suit their own requirements and uses. This methodology is in opposition to the closed-source models made available by organizations such as OpenAI and Anthropic, which usually restrict access to APIs.

Llama 3.1’s open-source design has important ramifications for the AI community. It makes the model studyable, modifiable, and extensible for academics and web builders, which may spur further innovation in the sector. Meta contends that as compared to closed-source alternatives, this strategy will result in a faster advancement of AI models.

Meta has worked with over 25 brands, including major cloud providers and AI infrastructure companies, to assist the implementation and usage of Llama 3.1. Through providing a range of deployment choices and optimizations, these partnerships seek to facilitate developers’ work with the model.

Along with Llama 3.1, Meta is also releasing fresh applications and frameworks. These include the quick injection filter quick Guard and the bilingual safety model Llama Guard 3. These elements are a part of Meta’s initiative to support ethical AI development and assist in reducing the hazards that could arise from using huge language models.

Photo: Meta

Meta’s Vision: Democratizing AI Through Open Source

The AI business is seeing fierce rivalry at the moment of Llama 3.1’s release. While businesses such as Anthropic and OpenAI have attracted a lot of attention for their closed-source methods, Meta’s open-source strategy embodies a distinct mindset. The business contends that more access to AI technology, improved safety procedures, and increased creativity are the ultimate results of open-source AI.

But Llama 3.1’s open-source design also begs concerns about possible abuse. Meta has put in place a number of security measures, such as a license that businesses with substantial user bases must approve before using. In order to detect and reduce possible dangers, the organization has also carried out a great deal of testing and red teaming.

It is unclear how Llama 3.1 will affect the field of artificial intelligence. If its performance claims are objectively confirmed, it has the potential to change the industry’s power dynamics. Furthermore, the model’s open-source design may encourage other academics and developers to work on its enhancement, which might result in a faster rate of progress than with closed-source models.

Llama 3.1’s release by Meta is also part of their larger AI plan, which involves incorporating AI features across all of their platforms and products. Features enabled by Llama 3.1 are being rolled out by the firm on Facebook, Instagram, WhatsApp, and other platforms in its ecosystem. Furthermore, Meta is integrating AI into its augmented and virtual reality products, including the Ray-Ban smart glasses and the Quest headset.

Llama 3.1’s creation is a result of Meta’s substantial investment in AI research and development. The business believes AI will be essential to its success in the future, especially as it develops its metaverse vision. Meta wants to establish itself as a major force in influencing the direction of artificial intelligence by spearheading the field of open-source AI development.

Another Innovation by Meta is Set to be Unveiled this Year

The CEO of Meta has revealed that Meta plans to release its second set of augmented reality glasses later this year. First insights into the new product are provided by a hazy image of a big pair of spectacles that fold halfway down each arm and close to the lens.

The study speculates that the next generation of Meta glasses will be able to perform additional augmented reality tasks, such as employing standard projection technology to turn the right lens into a heads-up display. At around 70 grams, the glasses might weigh nearly twice as much as a regular pair of sunglasses.

Photo: Threads, mkarolian

It has been stated that Meta is quite upset about the branding for its next major venture into mixed reality. It remains to be seen if the next round of eyewear will continue to have the prominent Ray-Ban logo.

Google allegedly wants its share of the glasses market and is attempting to push Meta off EssilorLuxottica’s brand, according to two recent narratives. Although the agreement has not yet been signed, the prominent media and news agencies reported last week that Meta may attempt to spend millions of dollars on purchasing a 5% interest in the dominant eyeglass company, EssilorLuxottica.

Should Meta’s augmented reality spectacles indeed resemble the bulky sets shown in the blurry Threads picture, EssilorLuxottica may find it difficult to market them to its usual consumers as the newest and greatest thing.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.