Rumulations of OpenAI ‘Arrakis’ to Be Even More Powerful Than GPT-4 and Gobi

In Brief

Recently, two Reddit users claim to have access to two of OpenAI’s internal models, Gobi and Arrakis, which go beyond what anyone ever dreamed.

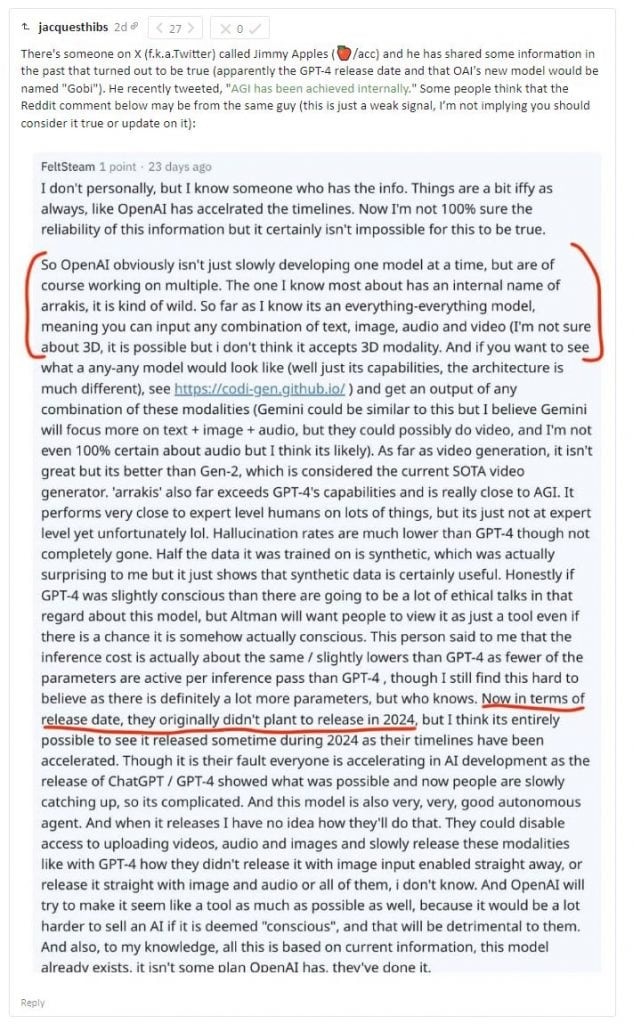

The rumors suggest that OpenAI is in possession of a powerful model called “Arrakis,” which is everything to everything, exceeds GPT-4 capabilities, and performs close to human experts in various fields.

Its hallucination rates are lower than GPT-4, and half of its training data is synthetic.

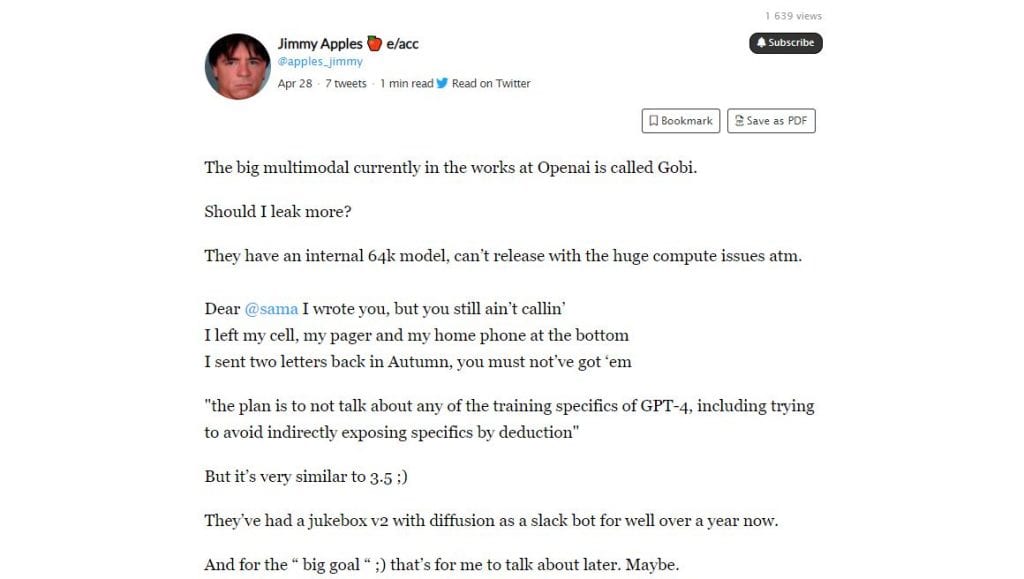

A Twitter user’s series of predictions regarding OpenAI’s developments and future projects has sparked interest and speculation within the tech community. While some of the claims seem far-fetched, they’ve nevertheless ignited conversations about the potential directions of artificial intelligence research.

In a tweet that has since been deleted, the user accurately foresaw the release of OpenAI’s Gobi model, which had recently made headlines. This tweet, however, wasn’t the only prediction that caught the attention of AI enthusiasts.

In a Twitter thread (archived link provided), the same user claimed that GPT-4 had already been integrated into Bing, with an official announcement expected shortly. They even accurately predicted that GPT-4 would expand its capabilities to process images. These early predictions turned out to be remarkably accurate.

LMAO 😂 I was asking Bing chat how to distinguish hail and sleet and it decided to illustrate using ASCII art. Way to work around not having a graphical interface, Bing 🤣 pic.twitter.com/D1e4IwefWF

— Michael P. Frank 💻🔜♻️ e/acc (@MikePFrank) March 2, 2023

One of the most intriguing claims made by this Twitter account is that “AGI has been achieved internally” in one of the AI laboratories, likely referring to OpenAI. AGI, or Artificial General Intelligence, represents a level of AI development where machines can perform tasks as well as humans across a broad range of activities. While this is a bold assertion, it remains a topic of debate among AI experts.

This account suggests that OpenAI has been working on a model codenamed Arrakis, reminiscent of the planet with “spice” in the Dune universe. The rumored release date for Arrakis is set for 2025, though accelerated research may result in an earlier launch, possibly in 2024.

Wait, can we consider seriously the hypothesis that 1) the recent hyped tweets from OA's staff

— Siméon ⏸️ (@Simeon_Cps) September 24, 2023

2) "AGI has been achieved internally"

3) sama's comments on the qualification of slow or fast takeoff hinging on the date you count from

4) sama's comments on 10000x researchers

are… https://t.co/f57g7dXMhM pic.twitter.com/Gap3V7VqkK

Interestingly, there’s another Reddit account that echoes many of these claims in a similar style, raising questions about their origins and credibility. According to this Reddit account, Arrakis surpasses GPT-4 in reasoning abilities and logical inference. It’s described as a multimodal model capable of generating various types of data, even including video production. Despite these advanced capabilities, it has not yet achieved superiority over humans in every aspect.

Hallucinations, a known issue in AI models, are reportedly less frequent in Arrakis compared to GPT-4. Approximately half of the training data used for Arrakis is synthetic, generated by other AI models. Additionally, Arrakis is touted as a highly capable autonomous agent, capable of being assigned tasks and working independently to complete them.

Its inference cost is around the same as GPT-4 due to conditional MoE/Multimodal weights offloading. Arrakis is also a very good autonomous agent, and its release date is scheduled for 2024. This rumor suggests that 2024 promises to be an AI year even more insane than 2023, and the AI development train is not expected to slow down any time soon.

While these claims and rumors are fascinating, it’s essential to approach them with a healthy dose of skepticism. Many tweets from the original Twitter user have been deleted, creating gaps in the narrative. However, the tech community remains intrigued, eagerly anticipating whether these speculations will evolve into concrete developments in the world of AI. Only time will tell the true extent of OpenAI’s innovations.

- The Information has been circulating rumors about Google’s next-generation model, Gemini, which is multimodal and works with multiple modalities such as text, images, video, and audio. OpenAI is aiming to beat Google in this field by releasing an even more powerful multimodal model, codenamed Gobi. Gobi was designed and trained as a multimodal model from the beginning, unlike GPT-4. However, it does not appear that Gobi training has already begun, so how it can come out before Gemini (scheduled for autumn 2023) is not clear.

- The reason for OpenAI’s delay in developing and rolling out a new model, where only pictures can be added, is mainly due to concerns about the new features and their use by attackers. OpenAI engineers appear to be close to resolving the legal issues surrounding the new technology. By the end of the year, we will see what companies have to offer in the multimodal model space.

Read more related topics:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.