OpenAI updated GPT-3: higher quality writing and longer text capability

In Brief

OpenAI updates GPT-3 model to help generate text of higher quality

OpenAI, an artificial intelligence research lab, announced an updated version of their text-generating GPT-3 model. The new model, dubbed “Davinci,” is said to produce a text of higher quality than the original GPT-3 model. As a result, GPT-3 has become less toxic, less likely to get confused with all the data, and generally better at all tasks. Even the 1.3B of the new model is said to be better than the 175B of the old one. It looks like Reinforcement Learning is back in vogue now, thanks to language models.

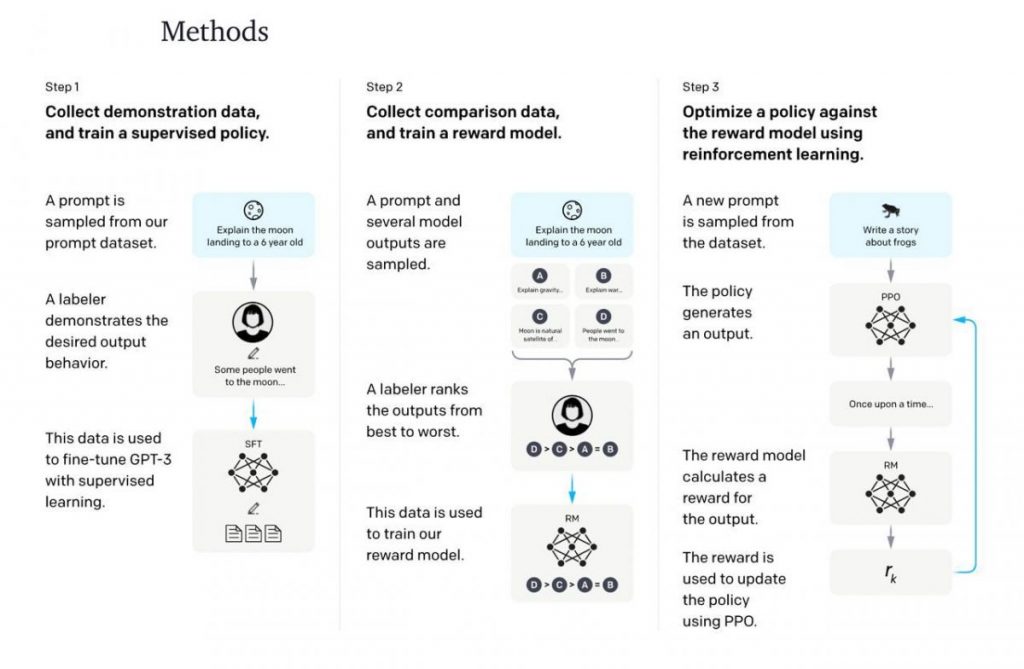

Architecturally, this is still the same GPT-3, the core feature is in additional training:

- First, researchers trained the model on primary data.

- Then, they manually marked the quality of the resulting outputs and trained the reward model to predict it.

- Next, the Reinforcement Learning algorithm (PPO) was used, which slightly tuned GPT according to this reward model.

Here’s what the updated version of GPT-3 from OpenAI can do:

- Follows instructions better (done with RL and the InstructGPT method).

- Produce higher quality writing: the model has been tuned on more texts and has better perplexity.

- Continue writing long texts: The text limit is 4K characters, two times lower than code-davinci-002.

The price is the same as code-davinci-002, so there is no reason not to use it.

OpenAI’s goal is to eventually create a model that can generate text that is indistinguishable from human-written text. The updated GPT-3 model is a step in the right direction, but there is still a long way to go.

Read more about GPT-3 below:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.