Gobi: OpenAI’s Multimodal LLM Looking to Beat Google’s Gemini

In Brief

Google’s Gemini, a next-generation AI model, is gaining interest due to its multimodal capabilities.

This involves a model working with multiple modalities, such as text, images, and video and audio.

OpenAI is aiming to lead the race in multimodality with Gobi, a multimodal model designed and trained for this purpose.

Recent buzz in the tech world revolves around Google’s Gemini, the next-generation model, which notably treads into the realm of multimodality. But what exactly is multimodality in AI, and why is it generating so much interest?

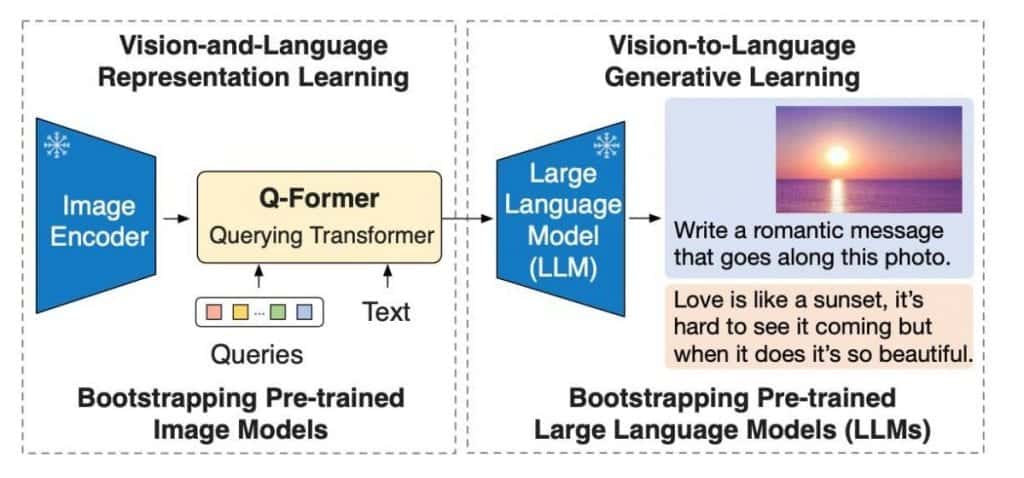

Multimodal AI, in essence, signifies a model’s ability to work with multiple modalities, such as text, images, and potentially even video and audio. However, implementing multimodality can take various approaches. One approach, colloquially termed “for the frugal,” involves using two separate models – one for images and another, typically a Large Language Model (LLM), for text. A bridging layer is then trained to translate images into a text-like format intelligible to the LLM. While this approach has been explored in open-source AI for some time, it has its limitations, primarily because the LLM may not truly grasp the essence of other modalities; they are, in a sense, merely appended.

A more ambitious path involves training a model from the ground up to understand and operate with multiple modalities simultaneously. Such an approach aims to empower the model with a holistic understanding of the world, enhancing its cognitive capabilities and the capacity to discern cause-and-effect relationships.

This brings us to the latest development in the AI arena, where OpenAI is strategically positioning itself to lead the multimodal race. Their weapon of choice: Gobi, a multimodal model designed and trained as such from its inception. Unlike its predecessor GPT-4, Gobi was conceived with multimodality in mind, signaling a significant step forward in AI versatility.

However, there’s a twist in the tale. According to reports, it seems that Gobi’s training has not yet commenced, raising questions about its timeline relative to Google’s Gemini, slated for release in autumn 2023. The competition is heating up, and the race for AI supremacy in the multimodal landscape is on.

One might wonder why the development of a new model takes so much time, especially when it appears to involve “just” integrating images. The answer lies in the intricacies of AI ethics and potential misuse. The addition of visual understanding capabilities raises concerns, such as the misuse of AI to bypass captchas or employ facial recognition for tracking individuals. OpenAI, it seems, is diligently addressing these ethical and legal considerations before rolling out their technology.

Salesforce and Multimodal Models

Many companies are involved in training prospective multimodal models. For instance, Salesforce, a leading SaaS CRM system, has been focusing on AI research to reduce the required resources for their models. They have been working on LLMs and multimodal models, which work with multiple data types such as pictures, text, sound, and video. One example of multimodality is answering questions based on pictures. However, the main challenge is integrating two different signals from the image and text. Existing approaches often require long training of large models to align or connect them.

Salesforce suggests reusing existing models, freezing their weights during training, and training a small grid between them to generate queries from one model to another. This approach requires minimal training and results in better metrics than the current state-of-the-art approach. The approach is brilliant in its simplicity and elegance.

The article provides code for the proposed approach, and a collab version is available for users to experiment with their pictures. The approach is brilliant in its simplicity and elegance.

Read more related topics:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.