OpenAI releases a powerful ChatGPT AI chatbot

In Brief

ChatGPT is the smartest conversational AI model

ChatGPT, in contrast to GPT-3, is a model that has been carefully taught to carry on an interactive chat and maintain the flow of the discourse. The model used to train ChatGPT, which was done training in early 2022, is from the GPT-3.5 series.

The dialog format enables ChatGPT to respond to additional queries, acknowledge its errors, refute false assumptions, and decline irrelevant requests. The chatbot is very intelligent and may create letters, come up with funny jokes, and respond to inquiries.

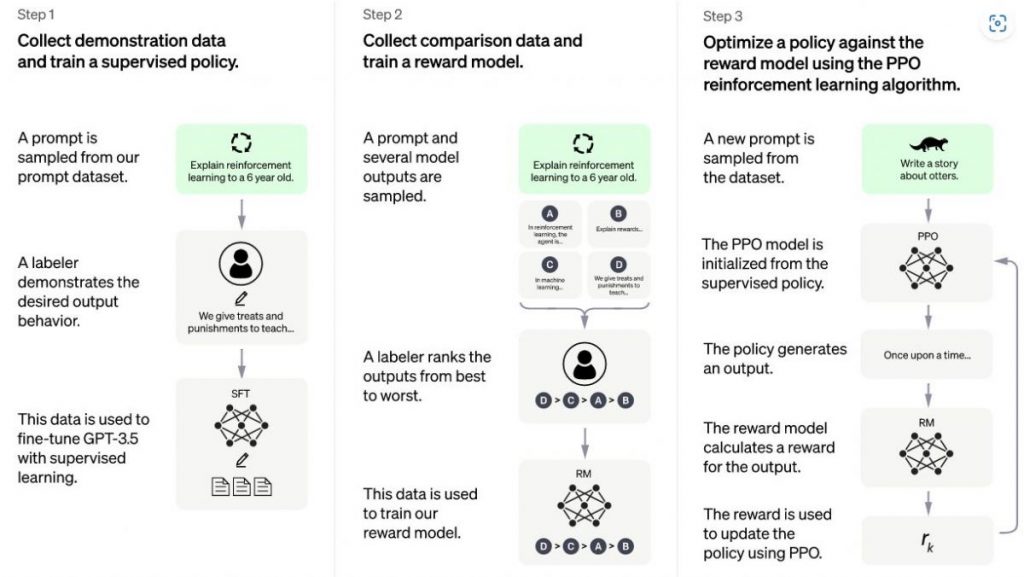

Just like InstructGPT, OpenAI used Reinforcement Learning from Human Feedback (RLHF) to train this model, with a few minor variations in the data collection arrangement. OpenAI uses supervised fine-tuning to train an initial model by having human AI trainers act as both the user and the AI assistant in chats. It provided the trainers with access to sample writing recommendations to assist them in creating their responses.

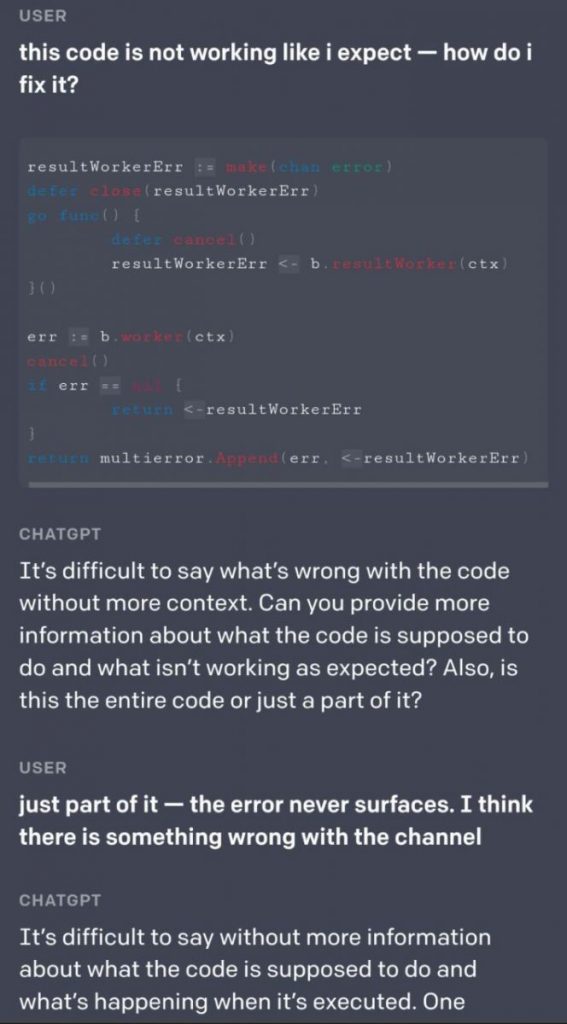

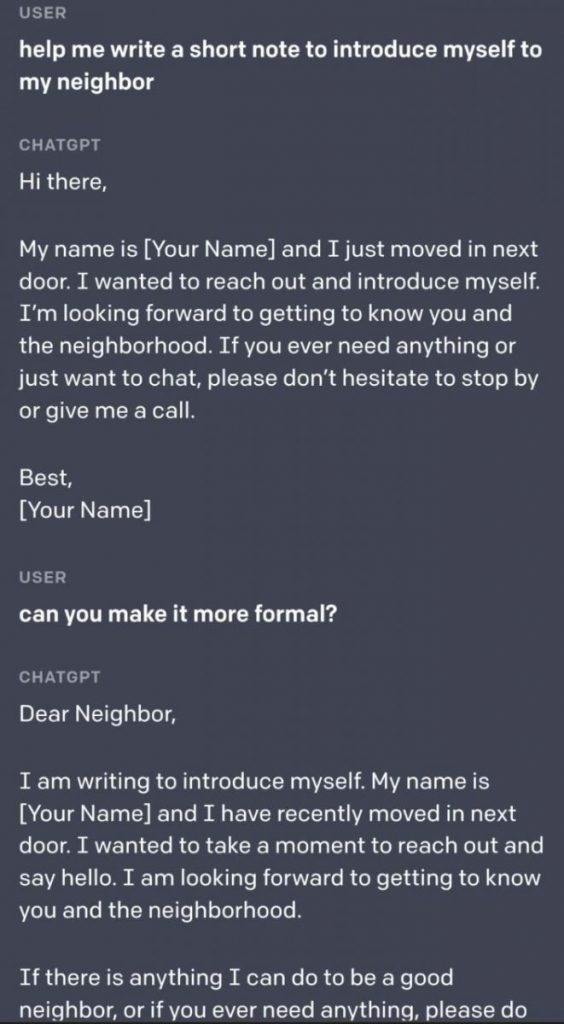

Some samples of dialogue are shown in the images.

It was necessary to gather comparison data, which consisted of two or more model replies graded by quality, in order to develop a reward model for reinforcement learning. Developers recorded discussions that AI trainers conducted with the chatbot in order to gather this information. The creators chose a model-written statement at random, sampled a number of potential conclusions, and asked AI trainers to rank them. It can adjust the model using Proximal Policy Optimization utilizing these reward models.

Talk to this chatbot right here. However, the servers are currently full.

Read more about GPT-3:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.