MIT and Google Brain Propose Novel Way to Improve LLMs with Multi-Agent Debates

In Brief

MIT and Google Brain have proposed a novel approach called Multi-Agent Debates, which leverages inter-model communication to enhance the quality of reasoning and output generation in LLMs.

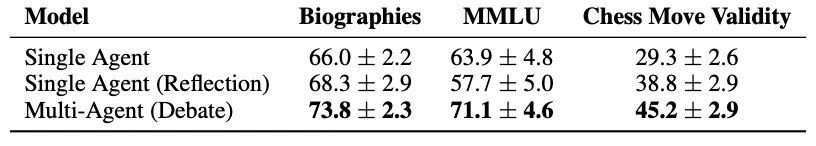

The Society of Mind approach outperformed other options in all categories, demonstrating its superiority in enhancing LLMs’ reasoning capabilities.

Researchers at MIT and Google Brain have proposed a novel approach called Multi-Agent Debates, which leverages inter-model communication to enhance the quality of reasoning and output generation in Large Language Models (LLMs). This breakthrough has the potential to revolutionize the way we interact with and utilize language models in various applications.

Drawing inspiration from the work of Marvin Minsky, a prominent advocate of agent-based AI and author of “Society of Mind”, the MIT and Google Brain researchers sought to enhance LLMs by incorporating the concept of agents working together to create intelligent behaviour. Minsky’s theory posits that the mind is not a single entity but rather a collection of smaller processes called “agents” that collaborate to exhibit intelligent behaviour.

The Multi-Agent Debates approach brings this concept to LLMs, creating an arena where multiple agents engage in debates. Each agent receives a request or prompt, and their responses are shared with all other agents. The context for each LLM at each step consists of its own reasoning as well as the reasoning of its neighbouring agents. Through several iterations of these debates, the models converge to a stable generation, much like humans reaching a common conclusion during a discussion.

To outline the algorithm in simpler terms:

- Multiple instances of language models generate separate candidate responses for a given query.

- Each model instance reads and critiques the responses of all other models, incorporating this feedback to update its own response.

- This process is repeated for multiple rounds until a final answer is reached.

This approach encourages models to generate responses that align with their internal critiques and are reasonable in light of the responses from other agents. The resulting consensus among the models supports multiple chains of reasoning and allows for the exploration of various potential answers before arriving at a final response.

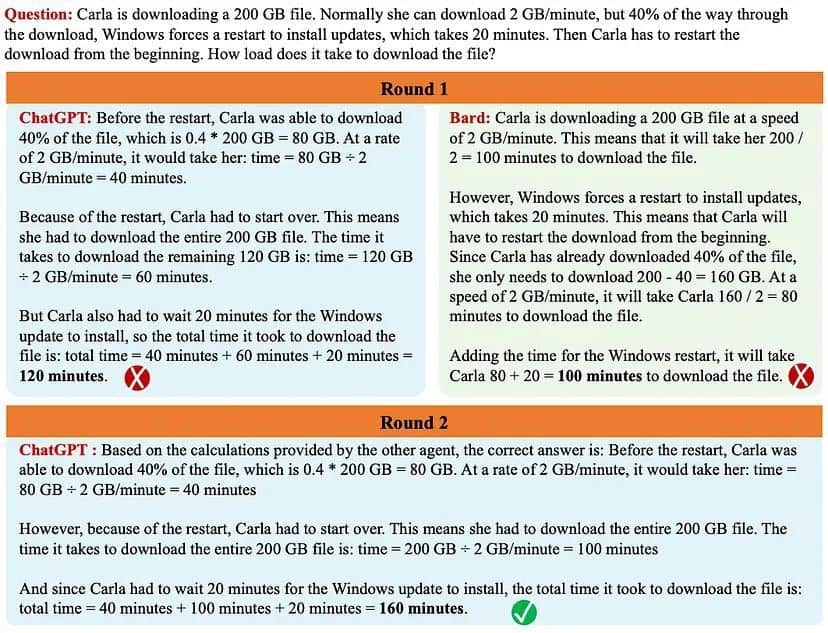

But when we pass each agent’s responses to the next, something magical happens: ChatGPT is now able to provide the right response by using the context of its prior response and Bard’s prior response.

The MIT AI Lab conducted experiments to evaluate the effectiveness of this technique across different domains. The evaluation included tasks such as accurately stating facts about the biography of a famous computer scientist, validating factual knowledge questions, and predicting the next best move in a chess game. The results, as shown in Table 1 below, revealed that the Society of Mind approach outperformed other options in all categories, demonstrating its superiority in enhancing LLMs’ reasoning capabilities.

While this development holds immense promise, questions regarding the stopping criterion for these debates still remain. Determining when to conclude the discussions and reach a final answer is an essential consideration. Researchers and experts are actively exploring metrics such as perplexity or BLEU generation to define this criterion and ensure optimal performance.

The fusion of the Chain of Thoughts approach with the concept of the Society of Mind brings us closer to a future where language models exhibit enhanced reasoning and produce more accurate and nuanced responses.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.