Microsoft President Highlights Deepfakes as Top Concern in AI

In Brief

Microsoft’s president expresses deep concern over the rise of deep fakes.

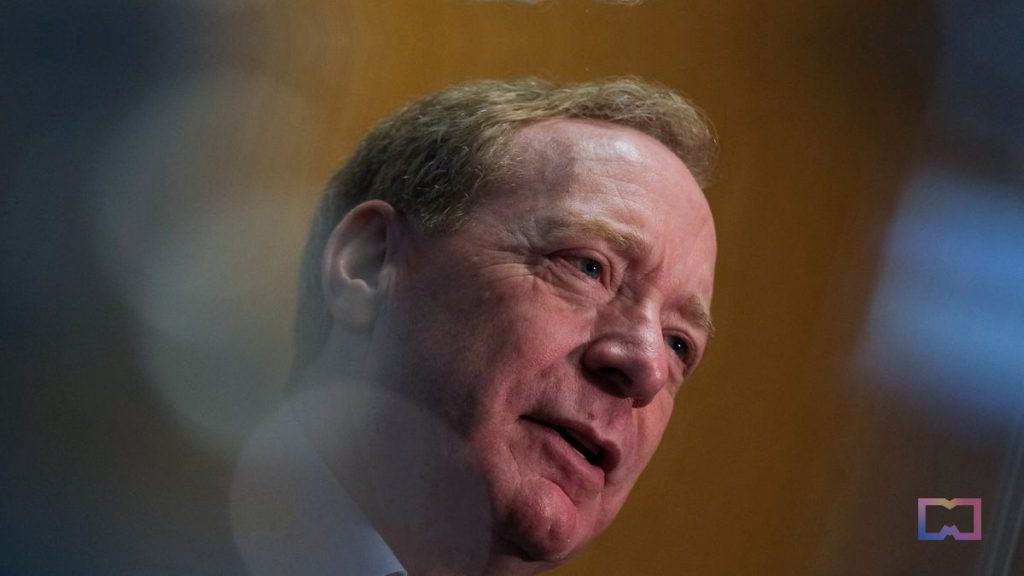

During a speech, Brad Smith emphasized the urgent need for mechanisms enabling the differentiation between authentic content and AI-generated material.

Microsoft’s president Brad Smith has raised concerns regarding artificial intelligence, emphasizing the potential harm caused by deepfakes—deceptive content that appears genuine but is actually fabricated. During a speech in Washington on the regulation of AI, Smith underlined the critical necessity for mechanisms that help people differentiate between genuine content and AI-generated material, especially when there is a potential for malicious intent.

“We’re going have to address the issues around deep fakes. We’re going to have to address in particular what we worry about most foreign cyber influence operations, the kinds of activities that are already taking place by the Russian government, the Chinese, the Iranians,”

he said.

According to Reuters, Smith has advocated for licensing for the most crucial AI types “with obligations to protect the security, physical security, cybersecurity, national security.” He also stated that a new or updated generation of export controls is necessary to prevent the theft or misuse of these models in ways that would violate the country’s export control regulations.

During his speech, Smith argued that individuals should be held responsible for any issues arising from using AI. He called upon lawmakers to implement safety measures to maintain human control over AI systems that govern critical infrastructure such as the electric grid and water supply. Smith also advocated for establishing a system similar to “Know Your Customer,” where developers of powerful AI models are required to monitor the usage of their technology and provide transparency to the public regarding AI-generated content, enabling them to identify manipulated videos.

AI regulation is currently a global debate. Recently, OpenAI’s CEO Sam Altman testified before the U.S. Senate, emphasizing the importance of AI regulation. Altman expressed his company’s willingness to assist policymakers in finding a balance between promoting safety and ensuring that people can benefit from AI technology. However, Altman doesn’t support the EU AI Act and has stated that OpenAI may leave Europe if the rule requiring the disclosure of copyrighted material used in AI system development is enforced.

Read more:

- Kendrick Lamar just dropped a music video using deepfake technology

- New “Drake-Like” AI Songs Surface on YouTube Following UMG’s Takedown Action on the Rapper’s Tracks

- Meta shareholder critiques Zuckerberg’s high expenses on the metaverse

- Discord Channels of Major NFT Projects Hacked

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Agne is a journalist who covers the latest trends and developments in the metaverse, AI, and Web3 industries for the Metaverse Post. Her passion for storytelling has led her to conduct numerous interviews with experts in these fields, always seeking to uncover exciting and engaging stories. Agne holds a Bachelor’s degree in literature and has an extensive background in writing about a wide range of topics including travel, art, and culture. She has also volunteered as an editor for the animal rights organization, where she helped raise awareness about animal welfare issues. Contact her on [email protected].

More articles

Agne is a journalist who covers the latest trends and developments in the metaverse, AI, and Web3 industries for the Metaverse Post. Her passion for storytelling has led her to conduct numerous interviews with experts in these fields, always seeking to uncover exciting and engaging stories. Agne holds a Bachelor’s degree in literature and has an extensive background in writing about a wide range of topics including travel, art, and culture. She has also volunteered as an editor for the animal rights organization, where she helped raise awareness about animal welfare issues. Contact her on [email protected].