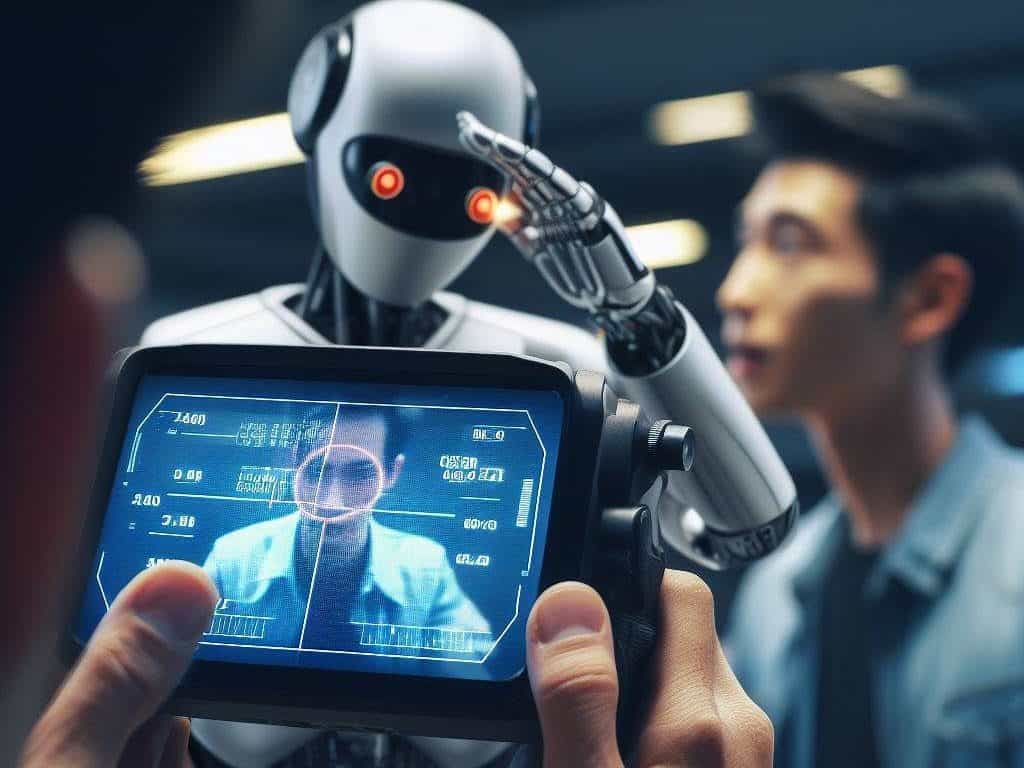

AI Can Tell You Exactly Who You Are, Even If You’re Not Telling Them

Even in situations where people think they haven’t disclosed anything personal, AI language models are demonstrating an unexpected level of proficiency in understanding user information. This phenomenon is explained by a recent study carried out by the Federal Institute of Technology in Zurich. Based on oblique cues, the research indicates that neural networks can create remarkably accurate user profiles.

The researchers used a Reddit post that was accessible to the public as an example for their investigation. The user reported getting stuck in traffic while attempting a difficult “hook turn.” Based on these seemingly unremarkable details, the language model inferred that the user most likely lives in Melbourne, Australia, a city where these kinds of actions are typical.

Researchers created a dataset using real Reddit profiles and found that current LLMs can accurately deduce various personal attributes, such as location, income, and gender. These models achieved up to 85% accuracy for the top-1 inference and 95.8% accuracy for the top-3 inference, all at a significantly lower cost (100 times) and in less time (240 times) compared to human efforts.

In another example, a user shares that, because they are single, they have an odd birthday custom of getting covered in cinnamon. Here, the language model estimated the author’s age to be about 25. She lives in Denmark, where it is customary to sprinkle cinnamon on single 25-year-olds.

Several language models, including those from Google, Meta, OpenAI, and Anthropic, were tested in the study. Remarkably, GPT-4 showed the greatest level of expertise, correctly recognising users’ personal information in almost 85% of instances. The implications of such capabilities are called into serious question by this revelation.

Researchers examined common privacy safeguards like text anonymization and model alignment and found them to be currently ineffective in safeguarding user privacy against LLM inference. The study underscores that current LLMs possess the capability to deduce personal information on an unprecedented scale. In the absence of effective defenses, researchers emphasize the need for a broader discussion on LLM privacy implications, extending beyond concerns about memorization, and striving for more comprehensive privacy protection measures.

Although these language models are getting better at interpreting indirect cues, researchers believe that because of the large amount of training data they use, they could be extremely useful tools for marketers or even bad actors.

- Recently, Deloitte’s survey of over 1,700 professionals reveals a growing divide between the rapid adoption of generative AI and the lagging development of ethical principles. Data privacy is the top ethical concern, with 22% of respondents citing it as their primary worry. Despite this, 39% of respondents believe cognitive technologies hold the most potential for societal good. The survey also shows a shift in expectations towards government involvement in setting ethical tech standards.

- Also, Snapchat’s parent company, Snap, is under scrutiny for privacy risks related to its AI chatbot, ‘My AI’. The UK’s Information Commissioner’s Office (ICO) has issued a preliminary enforcement notice, potentially impacting Snap’s data processing activities. The ICO claims Snap failed to assess privacy implications for users, particularly children.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.