ChatGPT API Is Now Available, Opens the Floodgate for Developers

In Brief

OpenAI has announced that ChatGPT is now available via API, allowing developers to quickly and easily integrate it into their applications.

The price is 10 times lower than the most powerful GPT-3.5 model, and the model can be conditionally reduced to a 6.7B model.

Data submitted through the API is no longer used for service upgrades (including model training).

OpenAI has just announced that ChatGPT and Whisper are available on the company’s API, meaning that every developer can add them to their application in literally one evening. Since December, OpenAI has achieved a 90% reduction in costs through various optimizations, the company explains in a blog post. The price of GPT-3.5-turbo is now ten times lower than the price of GPT-3.5, most likely because the model was reduced in size—that is, it can be conditionally not 175B but a 6.7B model.

| Recommended post: ChatGPT Could Cause Irreversible Human Degeneration |

To be completely honest, I am personally very pleased about this news. It was agonizing to see how many programs and bots claimed to have ChatGPT inside, even though they were using the Davinci003 model.

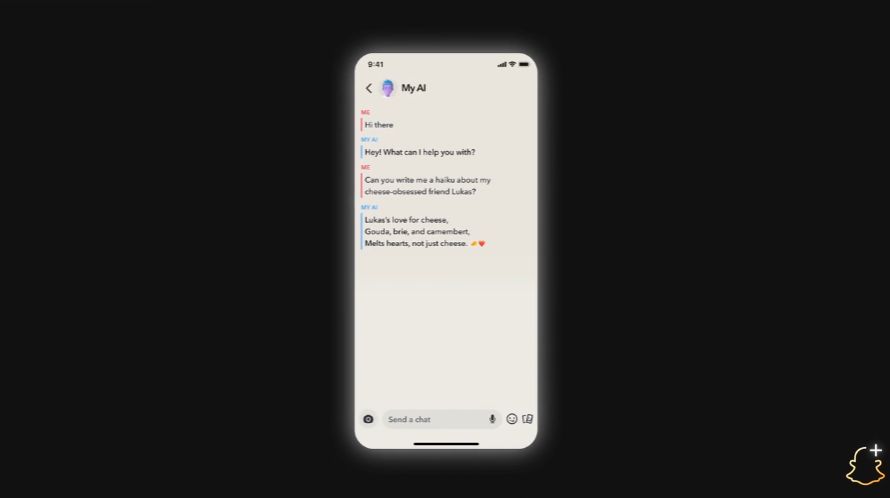

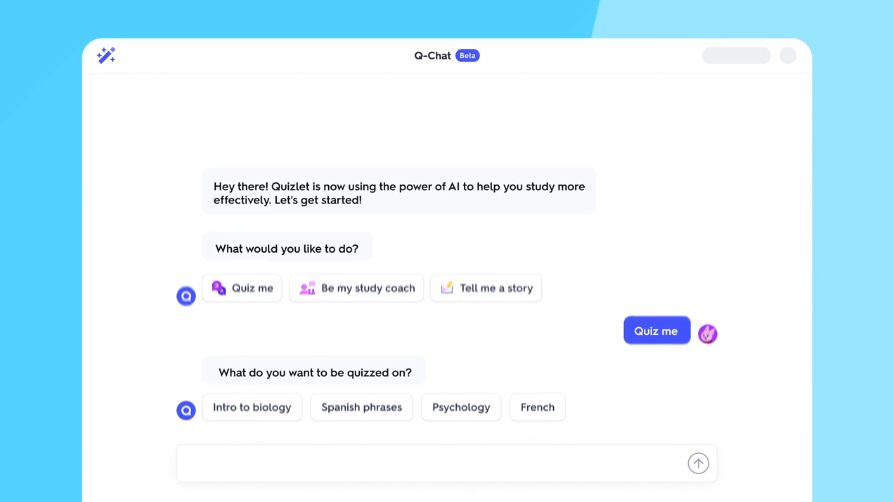

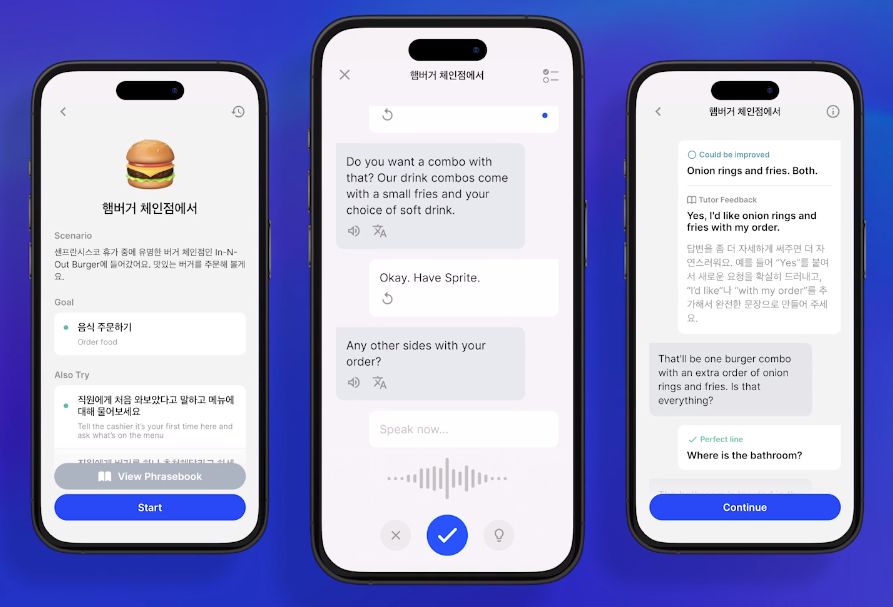

They also announced several integrations, for example, Quizlet, a service that promotes learning new topics. Now, a personal AI teacher will be available to give you prompts and help you learn.

Instacart allows shoppers to ask questions about food (“How do I make fish tacos?” or “Suggest a healthy lunch for my kids”) and receive inspiring answers with links to products that can be bought in one click. So far, from what I’ve seen, this is the first such integration that offers some kind of “product placement.” We see the use of this in search chatbots when links that you paid for are mixed into the results.

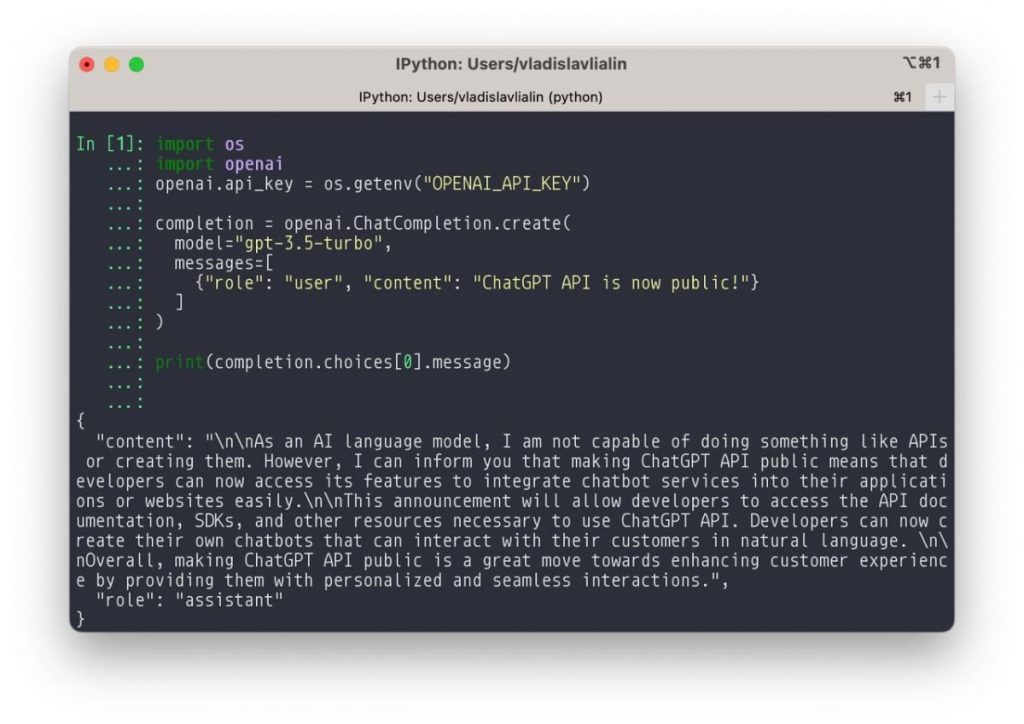

Now, in order to query the model, you must supply extra information in addition to the text to proceed; their list is outlined in Chat Markup Language (“ChatML”). When the dialogue model was refined, “roles” were specifically assumed: There is an AI assistant who writes the responses, and there is a “person” who writes the messages from your perspective.

And last but not least: Unless the organization opts in, data submitted through the API is no longer used for service upgrades (including model training). Customer information won’t be used for future training now!

| Recommended post: GPT-4-Based ChatGPT Outperforms GPT-3 by a Factor of 570 |

The GPT models will be updated and retrained on fresh samples periodically. On the one hand, it will be up to date and, in theory, should function a little more effectively with each modification. On the other hand, if the quality suddenly drops, you’ve already shot yourself in the foot because your magical prompts are now useless, meaning companies may shut down. This means that businesses must be aware of the potential risks associated with relying on automated processes and must have contingency plans in place in case something goes wrong. For instance, as of March 1, the gpt-3.5-turbo-0301 model is now available. It is stated that it will only be supported for three months, or until June 1, 2023.

The API is now open to the public; we verified it again, and it does indeed work. Now, you may create your own ChatGPT in a single evening without paying for PRO—just the API.

Prices, details, and answers to questions are here.

Read more about ChatGPT:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.