VideoDirectorGPT: An AI-Powered Director Reshaping Text-to-Video Production

Transforming written prompts into cogent visual narratives has been identified as a crucial challenge in the field of text-to-video generation, where numerous models are emerging. This task, which differs from traditional filmmaking, calls for a different set of abilities, similar to directing, and mastering Video Object Generation (VOG) can be quite the challenge. In addition, keen observation is an art form in and of itself.

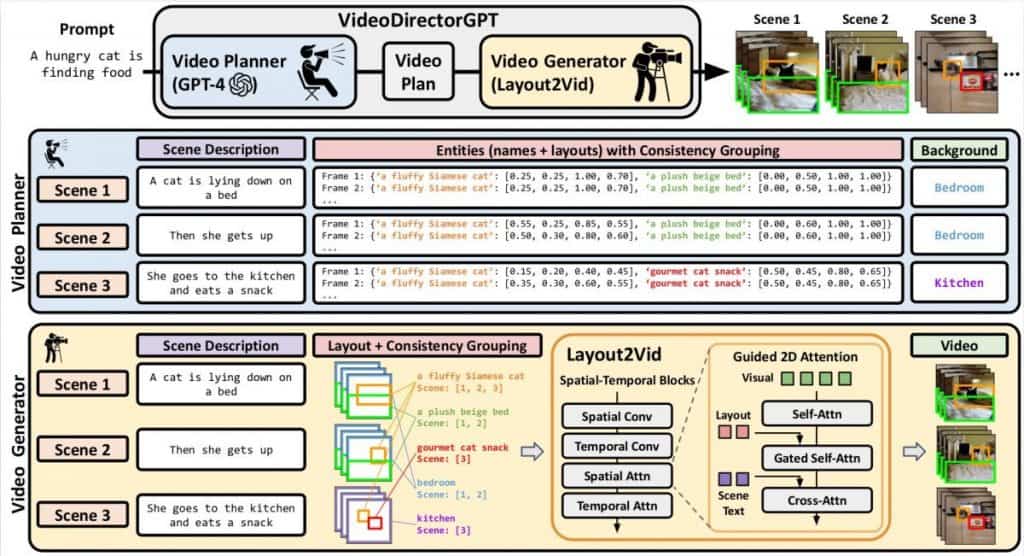

To address this, VideoDirectorGPT brings to the table an innovative approach to craft precise and consistent multi-scene videos, streamlining the process. At its core, VideoDirectorGPT employs a two-stage methodology that marries the prowess of Large Language Models (LLMs) with the art of video scheduling.

LLM-Guided Scheduling

In the first phase, VideoDirectorGPT employs LLMs as a video scheduler. The LLM acts as a storytelling master, crafting the overarching narrative for the multi-scene video. This narrative consists of scene-level text descriptions, detailed lists of objects and backgrounds in each scene, precise frame-by-frame object layouts with bounding boxes, and intelligent coherence groupings for objects and backgrounds.

Layout2Vid Video Generation

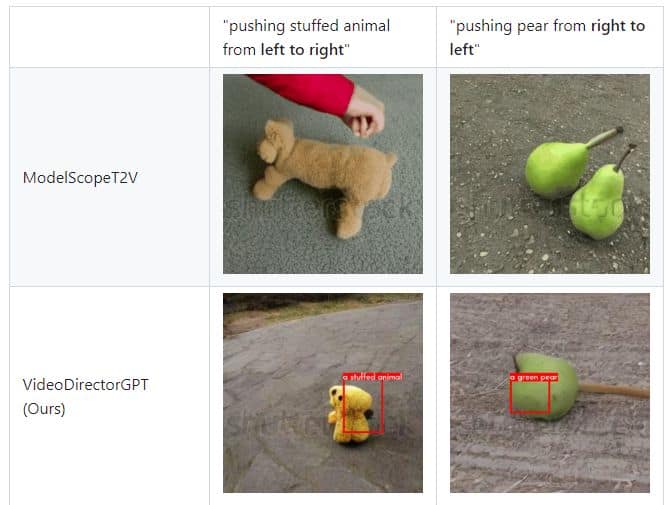

After LLM meticulously crafts the video plan, it’s time to put it into action. This is where Layout2Vid, the video generation module, comes into play. Expanding on the blueprint created in the initial stage, Layout2Vid employs identical image and text embeddings to depict objects and backgrounds in the video plan.

But the remarkable part — it provides spatial control over object layouts through a sophisticated 2D attention mechanism integrated into the spatial attention unit.

The result is a seamlessly orchestrated video that adheres to the initial text descriptions, translating them into dynamic visual sequences. It’s a union of AI-driven narrative construction and meticulous video rendering, ensuring that the generated content aligns precisely with the creator’s vision.

In August, Yandex has introduced a new feature called Masterpiece, which allows users to create short videos lasting up to 4 seconds with a frame rate of 24 frames per second. The technology uses the cascaded diffusion method to craft subsequent video frames, generating images that align with the user’s description. Masterpiece offers accessibility and simplicity, making it an attractive option for novices and users of all skill levels. The technology’s broader implications extend beyond creative expression and could redefine digital content creation and consumption.

Also, earlier this year, Runway released Gen-2, a text-to-video model that can generate new videos from scratch using a text prompt, a significant improvement over the previous version. This feature saves time and effort by generating videos that do not require advanced editing skills. In addition, Gen-2 can convert an uploaded image into a short video clip of higher quality than competitors. This technology is expected to improve the creation and sharing of content on social media platforms, potentially benefiting platforms such as Facebook and TikTok.

Read more related topics:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.