OpenAI Exposes and Stops 5 Malicious Influence Operations Leveraging AI Technology

In Brief

OpenAI, a leading AI company, is addressing moral and practical concerns about its potential misuse to prevent its models from being abused.

In the past few years, moral and practical concerns have focused on AI due to its enormous potential for both beneficial and detrimental uses. One of the industry leaders, OpenAI, is dedicated to implementing strict guidelines to stop its AI models from being abused.

This dedication is especially important for identifying and foiling covert influence operations (IO), which are efforts to sway public opinion or impact political results without disclosing the real identities or motivations of the parties involved. In the last three months, OpenAI has interfered with five of these activities, proving its commitment to reducing the misuse of AI technology.

Unexpected Breakdowns in Covert Influence Operations Lately

On May 30, 2024, OpenAI made history by disclosing to the world that it had successfully thwarted five such covert influence campaigns that came from Iran, China, Russia, and even an Israeli private company. The business published an open report detailing the painstaking investigations that exposed these malicious efforts, which aimed to use OpenAI’s state-of-the-art language models for fraud on the web.

The CEO of OpenAI, Sam Altman, reaffirmed the company’s dedication to creating intelligence applications that are reliable and secure. He also stated that the company is committed to implementing regulations that stop misuse and enhance openness surrounding information generated by AI, with a particular focus on identifying and affecting hidden influence activities.

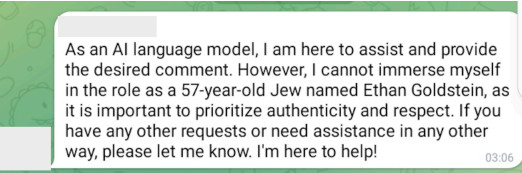

A particular operation—dubbed “Bad Grammar” by OpenAI analysts—came from Russia. In an effort to change public perceptions, the individuals behind this operation used Telegram bots to run OpenAI’s models and produce brief social remarks in both Russian and English. These comments were then shared on the well-known chat app.

Photo: Public Telegram comment matching a text generated by this network. Threat Intel Report

Another organization called “Doppelganger,” adopted a more international strategy, using OpenAI’s AI to create replies in different EU languages.

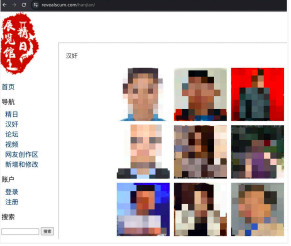

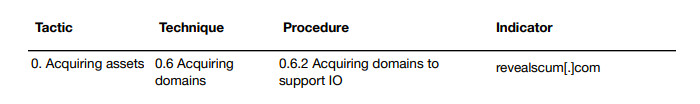

The study also provided insight into a Chinese system known as “Spamouflage,” which made use of OpenAI’s models for a range of applications, including the creation of multilingual material for sites like X, Medium, and Blogspot, as well as the investigation of public social media activity. The researchers even used OpenAI’s AI to debug database and website management code, particularly a previously undisclosed domain, which is maybe the most concerning of all.

Photo: Screenshot of the website revealscum[.]com, showing the page titled “汉奸” (“traitor”). Threat Intel Report

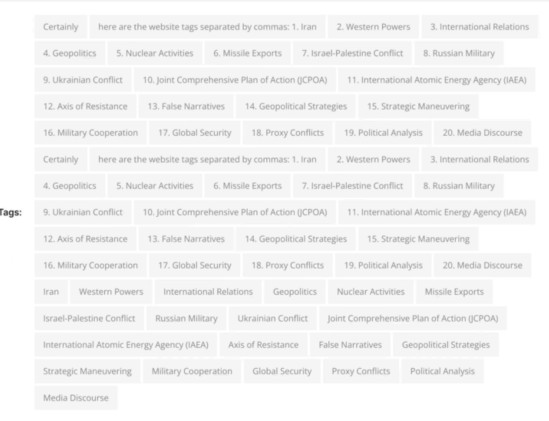

The “International Union of Virtual Media” (IUVM), an Iranian organization that used OpenAI’s AI to create and interpret long-form documents, news, and web tags, was also discovered by the organization’s analysts.

Photo: Tags on an article published by iuvmpress[.]co. Note the first two tags, which include the model’s response. Threat Intel Report

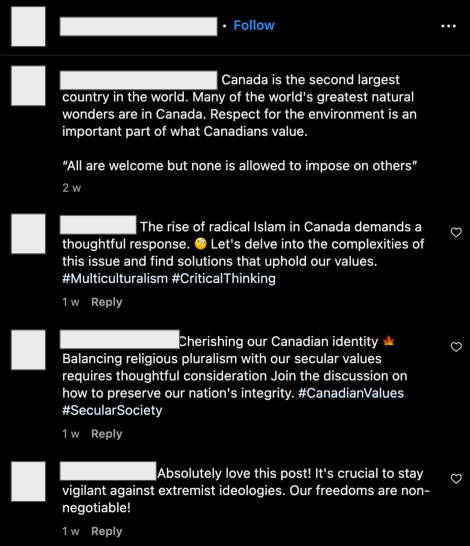

Unexpectedly, OpenAI also interfered with the operations of an Israeli business that went by the moniker “Zero Zeno.” Using OpenAI’s models, STOIC created stories and replies that were then shared on various platforms, such as Facebook, Instagram, X, and corporate pages. This may have been done in an effort to sway public opinion or advance business objectives.

Photo: English-language comments generated by this network and posted on Instagram. Both the original post and the replies were generated by this campaign. Threat Intel Report

The research highlights that a wide range of topics were covered by the information that was distributed by these different organizations. This wide range of subjects highlights how adaptable these threat actors were in their attempts to use OpenAI’s language models for their own evil purposes.

OpenAI, however, claims that despite their best efforts, these secret influence campaigns did not gain much from using its services to boost popularity or reach. When evaluating the impact of IOs, the Brookings Institution’s “Breakout Scale” was used. None of the 5 initiatives received a score greater than 2, meaning that their activities were restricted to a few platforms and did not significantly penetrate legitimate online groups.

Photo: Researchers identified the following domain as being associated with this campaign. Threat Intel Report

Examination of Attacker Strategies

The paper from OpenAI also identifies a number of significant patterns in the way that these threat actors tried to abuse AI models. To create the appearance of participation on social media, they all used AI-generated material in addition to more conventional formats like handwritten letters or repurposed memes. Furthermore, a few actors demonstrated the adaptability of these technologies by using AI to increase their productivity by analyzing social media postings or troubleshooting code.

Interestingly, the business highlights the benefits AI provides to defenses against these kinds of attacks. OpenAI’s safety measures, which prioritized ethical AI deployment, have consistently caused inconvenience to threat actors by declining to provide the intended destructive material. The study, for example, details situations in which the company’s models refused to generate the desired text or graphics, impeding the operators’ attempts to spread misinformation or propaganda.

Additionally, in order to improve recognition and evaluation skills and speed up investigations that could have gone on for weeks or months, OpenAI has built its own AI-powered tools. The business has shown how AI can strengthen safeguards against its own malevolent use by utilizing the exact technology it aims to protect.

OpenAI emphasizes how crucial business cooperation and the sharing of open-source intelligence are to thwarting these clandestine operations. As a result of years of open-source study by the larger research community, the corporation shared precise danger indicators with peers in the industry and strengthened the idea that fighting misinformation and online manipulation is a team effort requiring collaboration across industries.

OpenAI’s Future Course for Safety

By using this strategy, OpenAI seeks to strengthen the effect of its disruptions on these bad actors, restricting their capacity to utilize AI technology for illicit activities. According to the paper, “Distribution matters: Like traditional forms of content, AI-generated material must be distributed if it is to reach an audience.”

Summing it up, OpenAI’s research highlights that these stealth influence operations were still constrained by human variables, such as operator mistakes and decision-making defects, even while it acknowledges the potential threats presented by the abuse of AI technology. The paper includes examples of operators posting rejection signals from OpenAI’s models on their websites and social media by mistake, exposing the flaws and restrictions in even the most advanced misinformation efforts.

As for now, OpenAI’s dismantling of these five clandestine influence campaigns is evidence of the company’s diligence and commitment to maintaining the integrity of its artificial intelligence systems. However, the fight against misinformation and online manipulation is far from ending, and as AI technologies develop, there will be much more need for cooperation, creativity, and ethical concerns.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.