MusicLM: a new text-to-music and image-to-music AI model from Google

In Brief

Google introduces MusicLM, a model for generating high-fidelity music from text descriptions.

MusicLM can be conditioned on both text and a melody in that it can transform whistled and hummed melodies according to the style described in a text caption.

The model can generate music in a variety of genres, including classical, jazz, and rock.

Google introduces MusicLM, a model for generating high-fidelity music from text descriptions such as “a calming violin melody backed by a distorted guitar riff.” MusicLM casts the process of conditional music generation as a hierarchical sequence-to-sequence modeling task, and it generates music at 24 kHz that remains consistent over several minutes.

Google experiments show that MusicLM outperforms previous systems, both in audio quality and adherence to the text description. Moreover, it demonstrates that MusicLM can be conditioned on both text and a melody in that it can transform whistled and hummed melodies according to the style described in a text caption. To support future research, we publicly release MusicCaps, a dataset composed of 5.5k music-text pairs with rich text descriptions provided by human experts.

| Related article: Text-to-3D: Google has developed a neural network that generates 3D models from text descriptions |

The MusicLM model has been trained on a large corpus of musical scores, which has allowed the AI to learn the structure of music. The model can generate music in a variety of genres, including classical, jazz, and rock. In addition, the AI model can create new, original compositions.

The MusicLM model is an important development in the field of AI-generated music. The model represents a significant advance over previous models, which were limited to shorter pieces of music or only capable of generating simple melodies. The new model opens up the possibility of using AI to generate long, complex pieces of music, which could be used in movies, video games, or other media.

The new AI model can generate long music generations of up to five minutes.

The AI model can create music using captions from games and movies.

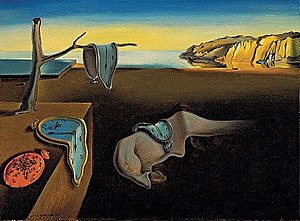

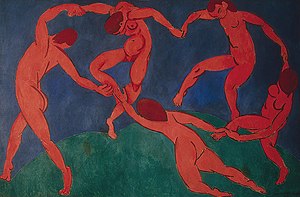

In addition, the AI model can generate music using images as input.

Read more about AI in the music industry:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.