Elon Musk Shockingly Withdraws Bombshell Lawsuit Against OpenAI Amid Intensifying Legal Battles Over Generative AI Copyright Concerns

In Brief

Elon Musk dropped his legal action against OpenAI and CEO Sam Altman just days before a California judge was set to dismiss the lawsuit.

Elon Musk has backed out of his well-known legal action against OpenAI, an artificial intelligence startup, and Sam Altman, the CEO. The action was taken only one day before a California judge was scheduled to consider OpenAI’s request to have the contentious lawsuit that Musk had launched in February 2024 dismissed.

Musk’s legal team did not give a reason for ending the lawsuit, which charged OpenAI with betraying its core values of creating AI for the good of mankind rather than for financial gain. The Tesla CEO may still submit a new case at a later time, though, as the dismissal was done “without prejudice.”

After a lengthy court fight that has heightened long-simmering tensions between Musk and the AI research company he co-founded in 2015 before leaving several years later, the withdrawal signals an anticlimactic finale, at least for the time being. It also signals a break in the quickly building legal battles around generative AI as businesses want to gain a foothold in this game-changing technology.

The Musk v. OpenAI case was highly anticipated by legal experts as it was expected to explore important issues of artificial intelligence ownership, ethics, and commercialization. However, those disclosures will have to wait while the rival groups get together in private.

Musk’s allegation that OpenAI “set the founding agreement aflame” by putting financial gain ahead of its initial goal of conducting open, honest, and charitable AI research served as the focal point of the case. His goal was to make OpenAI provide its technology to the public and prevent the company from making money off of Microsoft and other investors.

After observing OpenAI’s technological innovations, such as ChatGPT, Musk’s complaints were strongly denied by the company, which described Musk’s complaints as irrational and an attempt to further his own agenda.

Legal Turbulence Surrounding Generative AI

Musk’s lawsuit’s demise doesn’t do much to calm the growing legal unrest engulfing generative AI businesses like OpenAI, Google, Meta, Amazon, and others. Claims that huge language models such as ChatGPT were trained by improperly consuming copyrighted content started to accumulate as their capabilities garnered international notice.

Various content producers began to push back. AI companies were sued for allegedly stealing text, pictures, code, and song lyrics to feed their ravenous machine-learning engines without permission or payment.

In just the first few months of 2024, high-profile figures and companies, including:

- Comedian Sarah Silverman;

- Author Ta-Nehisi Coates;

- News outlets The Intercept, Raw Story and AlterNet;

- Music publishers Universal, ABKCO, and Concord;

- The estate of legendary comic George Carlin.

All these launched copyright complaints against AI giants like OpenAI, alleging their intellectual property was misused for commercial gain. Judges have been navigating complex matters such as petitions for fair use and accusations of irreversible injury.

Some AI companies have tried to avoid the mess by negotiating license agreements with content creators in the middle of the commotion caused by lawsuits. In exchange for payments, the Associated Press, Shutterstock, Axel Springer, and other parties consented to let AI models be trained using their archives and libraries.

Reuters reported that three writers had sued Nvidia in March for allegedly using their works’ copies without their consent to train AI, but the cases continued. At about the same time, The New York Times filed a massive lawsuit against Microsoft and OpenAI, alleging that they were diverting visitors and money from their websites.

Nonetheless, some concerns have had difficulty gaining momentum in the legal system. A federal judge dismissed the majority of a multiparty complaint filed by content providers like Silverman and Coates against OpenAI in February.

Increased Regulatory Scrutiny over Generative AI

Government authorities throughout the globe are keeping a close eye on generative AI in addition to private lawsuits as they grapple with an invention that upends established intellectual property frameworks.

In January 2024, the U.S. Federal Trade Commission launched an investigation into the artificial intelligence sector, requesting information from OpenAI, Microsoft, Google, and other companies about their relationships, acquisitions, investments, and data usage. According to reports, the Securities and Exchange Commission has also begun looking into claims that OpenAI deceived investors about its operations.

Europe has also moved strongly to slow down the fast growth of AI. The AI Act, the first comprehensive legal framework controlling the research and use of artificial intelligence, was approved by the European Union in late 2023. It classified various AI models according to their level of danger, expressly prohibiting the most problematic uses and requiring systems like ChatGPT to be transparent.

Thus far, the Biden administration has refrained from enacting any comparable binding laws. However, as the technology unavoidably grows more pervasive across society, the President issued an executive order in 2023 directing government agencies to set strict guidelines giving AI safety and ethics top priority.

Demands for an International AI Governance Structure

There is a rising need for a comprehensive global governance framework that would provide clear criteria for AI development and appropriate applications of the technology as lawsuits mount and authorities scurry.

The World Health Organization has recommended a brief hold on the release of more potent AI models, stating that unrestrained implementations may endanger public health or perhaps cause the extinction of humans. Similar appeals were made by more than 1,000 IT executives, who requested a global embargo for six months to give time to create effective risk mitigation procedures.

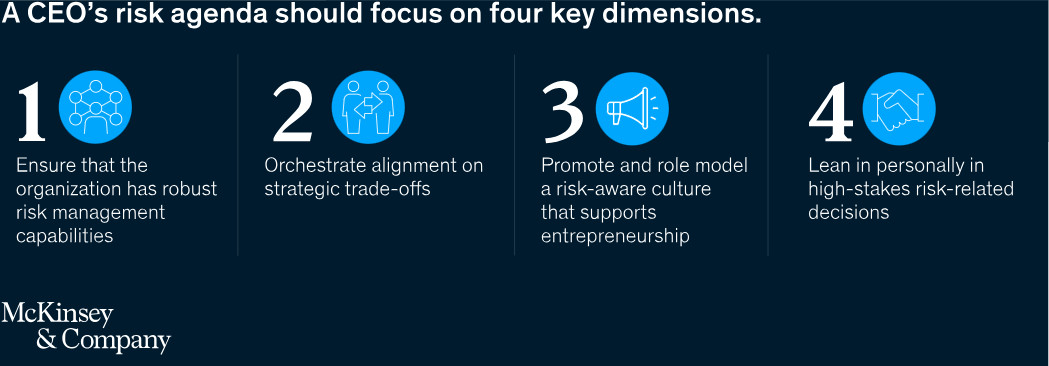

Photo: McKinsey&Company

Sam Altman, the CEO of OpenAI, has become a well-known voice in favor of monitoring AI. In a testimony given before Congress in April 2024, Altman stated that OpenAI and academics from Stanford University were working together to create an AI constitution that would uphold social norms and safeguard society.

Altman said it was essential to make sure the creation of revolutionary AI systems—including ones far more powerful than ChatGPT—was done correctly, noting that if it wasn’t, it would have unfavorable consequences.

It remains to be seen if such audacious goals translate into workable global policy. However, the generative AI genie is now out of the bottle, and the ongoing legal disputes highlight how important it is to find a long-term solution that strikes a balance between innovation and moral restraints.

The future is uncertain for Musk as well. After criticizing OpenAI’s economic motives, the eccentric millionaire faced international controversy in July 2023. The reason was that he founded his own AI company, xAI.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.