Meta AI Develops an Algorithm That Enables Robots to Learn Tasks from YouTube Videos

In Brief

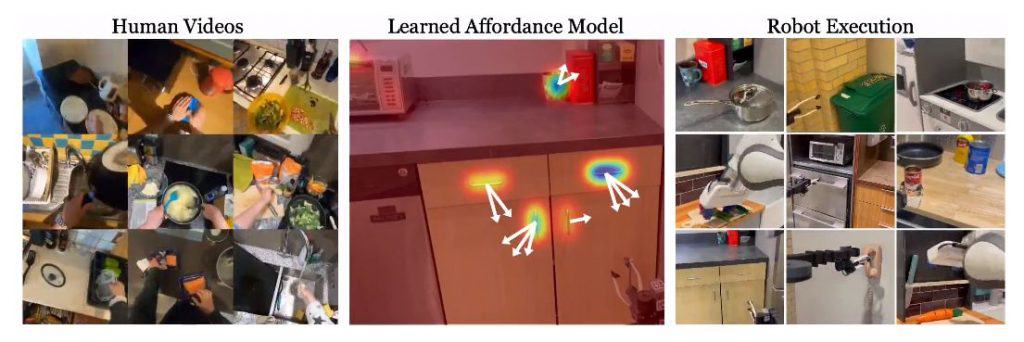

Researchers have developed a visual affordance model using internet videos of human behavior to train robots to perform complex tasks.

This approach bridges the gap between static datasets and real-world robot applications.

The researchers use large-scale human video datasets like Ego4D and Epic Kitchens to extract affordances, integrating computer vision techniques with robotic manipulation.

The Vision-Robotics Bridge (VRB) concept showcases the potential of this approach, enabling robots to learn from human videos and acquire the skills necessary for complex tasks.

Meta AI unveiled a new algorithm that enables robots to learn and replicate human actions by watching YouTube videos. In a recent paper entitled “Affordances from Human Videos as a Versatile Representation for Robotics,” the authors explore how videos of human interactions can be leveraged to train robots to perform complex tasks.

This research aims to bridge the gap between static datasets and real-world robot applications. While previous models have shown success on static datasets, applying these models directly to robots has remained a challenge. The researchers propose training a visual affordance model using internet videos of human behavior could be a solution. This model estimates where and how a human is likely to interact in a scene, providing valuable information for robots.

The concept of “affordances” is central to this approach. Affordances refer to the potential actions or interactions an object or environment offers. By understanding affordances through human videos, the robot gains a versatile representation that enables it to perform various complex tasks. The researchers integrate their affordance model with four different robot learning paradigms: offline imitation learning, exploration, goal-conditioned learning, and action parameterization for reinforcement learning.

| Recommended: Top 100+ Words Detectable by AI Detectors in 2023 |

To extract affordances, the researchers utilize large-scale human video datasets like Ego4D and Epic Kitchens. They employ off-the-shelf hand-object interaction detectors to identify the contact region and track the wrist’s trajectory after contact. However, an important challenge arises when the human is still present in the scene, causing a distribution shift. To address this, the researchers use available camera information to project the contact points and post-contact trajectory to a human-agnostic frame, which serves as input to their model.

Previously, robots were capable of mimicking actions, but their abilities were limited to replicating specific environments. With the latest algorithm, researchers have made significant progress in “generalizing” robot actions. Robots can now apply their acquired knowledge in new and unfamiliar environments. This achievement aligns with the vision of achieving Artificial General Intelligence (AGI) as advocated by AI researcher Jan LeCun.

Meta AI is committed to advancing the field of computer vision and is planning to share its project’s code and dataset. This will enable other researchers and developers to further explore and build upon this technology. With increased access to the code and dataset, the development of self-learning robots capable of acquiring new skills from YouTube videos will continue to progress.

By leveraging the vast amount of online instructional videos, robots can become more versatile and adaptable in various environments.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.