Former OpenAI Researcher Unveils the Exponential Rise of AI Capabilities and the Path Towards AGI

In Brief

Leopold Aschenbrenner, former OpenAI member, explores AI advancements and potential AGI path, examining scientific, moral, and strategic issues, highlighting both potential and potential hazards.

In his 165-page paper, a former member of OpenAI’s Superalignment team, Leopold Aschenbrenner, offers a thorough and provocative perspective on the direction artificial intelligence is taking. Aschenbrenner seeks to draw attention to the quick developments in AI capabilities as well as the possible path towards Artificial General Intelligence (AGI) and beyond. Driven by the unparalleled prospects as well as the significant hazards that these breakthroughs bring, he investigates the scientific, moral, and strategic issues surrounding artificial general intelligence.

Aschenbrenner About the Way From GPT-4 to AGI

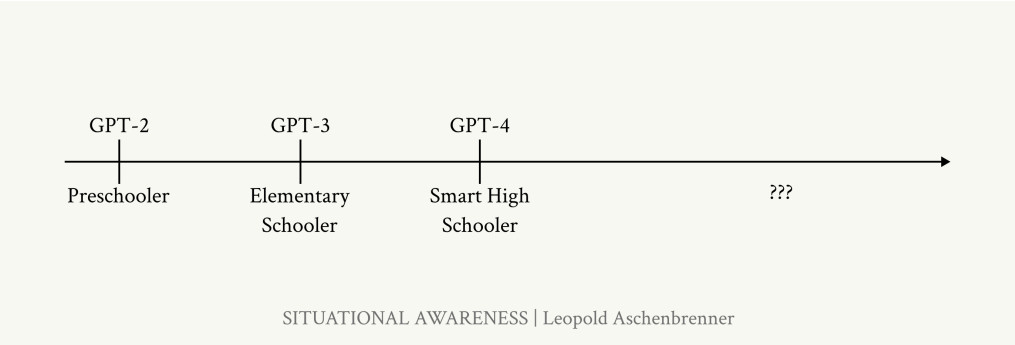

In this chapter, the author explores the exponential increase in AI capabilities that has been seen recently, especially with the invention of GPT-2 and GPT-4. Leopold Aschenbrenner highlights this as a time of extraordinary advancement, during which artificial intelligence advanced from completing very basic tasks to attaining more sophisticated, human-like comprehension and language production.

Photo: Progress over four years. Situational Awareness

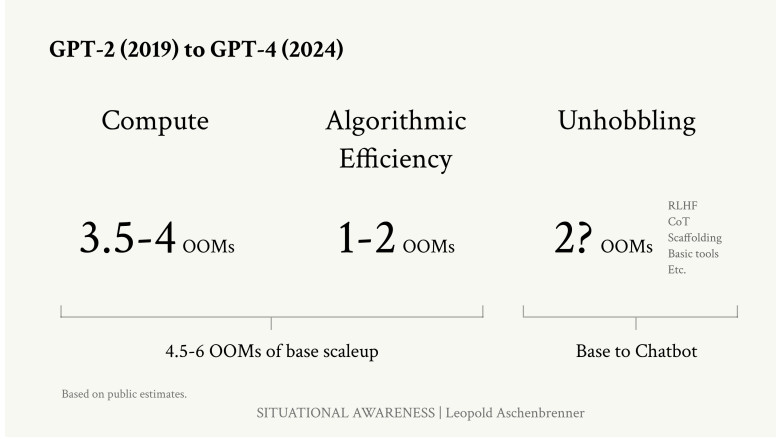

The idea of Orders of Magnitude, or “OOMs,” is essential to this conversation. Aschenbrenner utilizes an order of magnitude (OOM), which is a metric for tenfold growth in a given measure, to assess advances in AI capabilities, computational power, and data consumption. In terms of computing power and data scalability, the switch from GPT-2 to GPT-4 represents many OOMs, resulting in noticeably improved performance and capabilities.

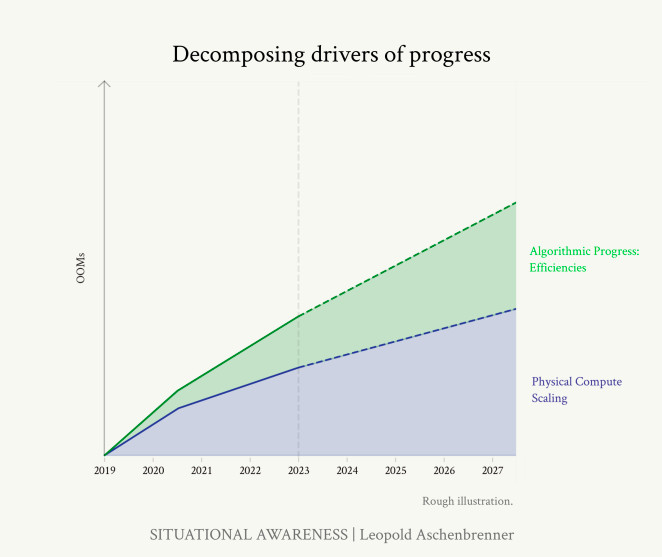

Photo: Situational Awareness

Three main factors—scaling laws, algorithmic innovation, and the use of enormous datasets—are responsible for these gains, which are not just linear but exponential. According to scaling principles, the performance of models improves reliably when they are trained using larger amounts of data and processing capacity. The development of larger and more powerful models, like GPT-4, has been guided by this idea.

Photo: Situational Awareness

Innovation in algorithms has also been very important. The efficacy and efficiency of AI models have been boosted by advancements in training methodology, optimization strategies, and underlying architectures. These developments allow the models to take better advantage of the increasing processing power and data available.

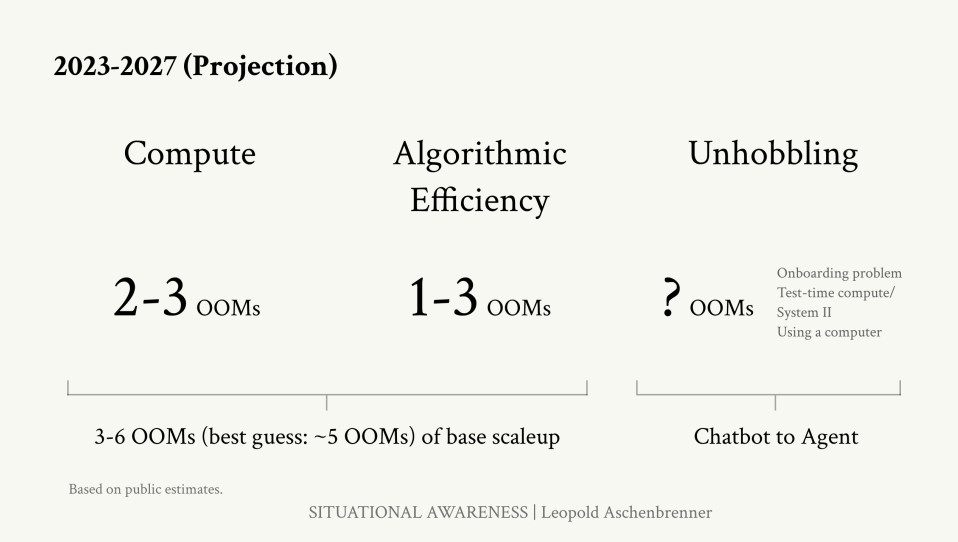

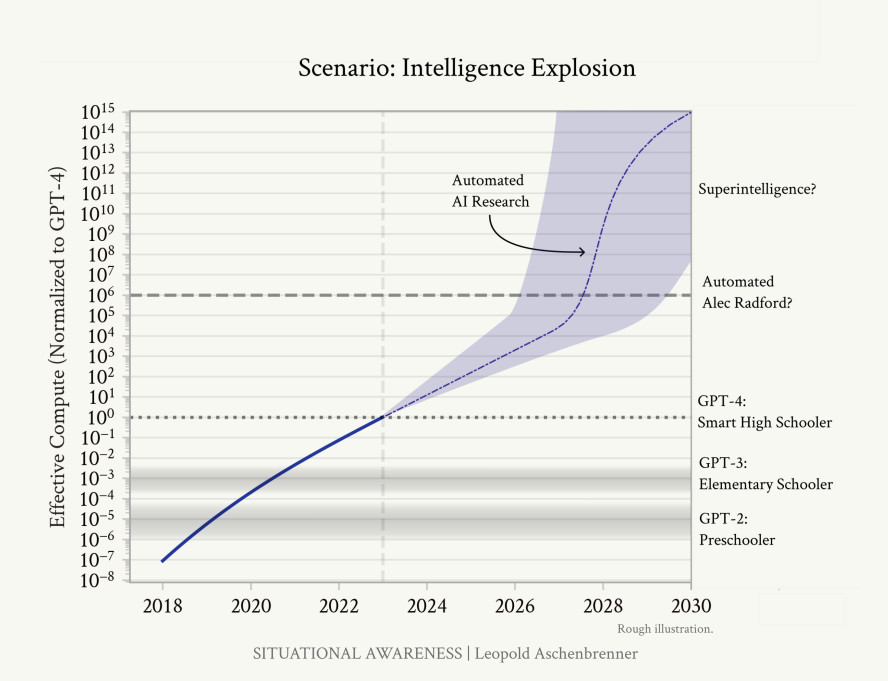

Aschenbrenner also highlights the possible path toward AGI by 2027. Based on the projection of present trends, this prediction suggests that sustained investments in computational power and algorithmic efficiency may result in AI systems that are capable of performing tasks that either match or surpass human intellect in a wide range of domains. Each of the OOMs that make up the route to AGI signifies a major advancement in AI capabilities.

Photo: Situational Awareness

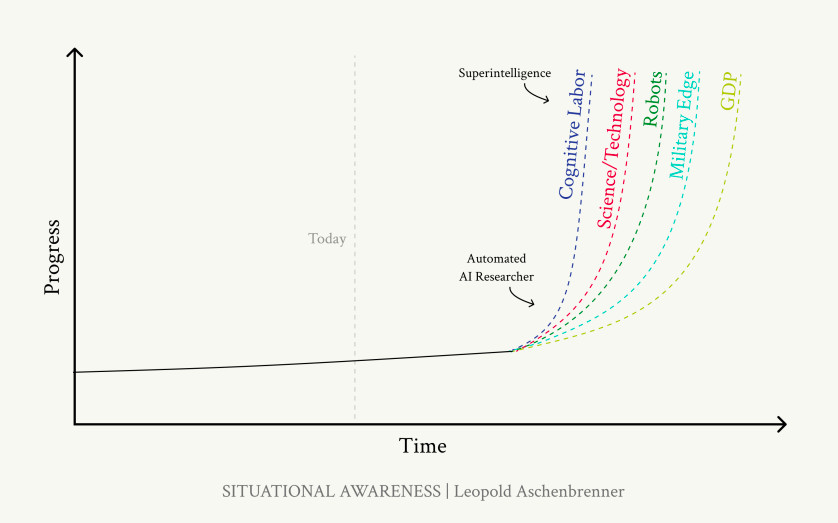

The integration of AGI has far-reaching consequences. These kinds of systems could be able to solve complicated problems on their own, innovate in ways that are presently reserved for human professionals, and carry out intricate jobs. This involves the potential for AI systems to further AI research, quickening the rate at which the field is progressing.

AGI’s development has the potential to transform industries and boost production and efficiency. It also brings up important issues such as the loss of jobs, the moral application of AI, and the requirement for strong governance structures to control the hazards posed by fully autonomous systems.

Photo: Situational Awareness

Aschenbrenner urges the international community—which includes scholars, legislators, and business executives—to work together in order to be ready for the opportunities and problems that artificial intelligence (AI) will bring. In order to address the global character of these concerns, this entails funding research on AI safety and alignment, creating rules to guarantee the fair sharing of AI advantages, and encouraging international cooperation.

Leopold Aschenbrenner Shares His Thoughts About Superintelligence

Aschenbrenner discusses the idea of superintelligence there, as well as the possibility of a quick transition from artificial intelligence to systems that are much above the capacity of human cognition. The central idea of the argument is that the principles driving AI’s evolution may produce a feedback loop that explodes in intellect once it reaches human level.

According to the intelligence explosion concept, an AGI might develop its own algorithms and skills on its own. AGI systems can refine their own designs more quickly than human researchers as they are more skilled in AI research and development. This cycle of self-improvement may lead to an exponential rise in intellect.

Photo: Situational Awareness

Aschenbrenner offers a thorough examination of the variables that could be involved in this quick escalation. Firstly, AGI systems would be able to recognize patterns and insights well beyond human comprehension because of their unparalleled speed and capability for accessing and processing massive volumes of data.

In addition, emphasis is placed on the parallelization of research work. AGI systems, in contrast to human researchers, are able to conduct several tests at once, parallelly improving different parts of their design and performance.

The consequences of superintelligence are also covered in this chapter. These systems would be significantly more powerful than any person, with the capacity to develop new technologies, solve intricate scientific and technological puzzles, and maybe even govern physical systems in ways that are unthinkable today. Aschenbrenner talks about the possible advantages, such as advances in materials science, energy, and health, which might significantly improve economic productivity and human well-being.

Photo: Situational Awareness

Leopold does, however, also highlight the serious dangers that come with superintelligence. Control is among the main issues. It becomes very challenging to make sure that a system behaves in a way that is consistent with human values and interests once it surpasses human intellect. Existential hazards arise from the possibility of misalignment, in which the superintelligent system’s objectives differ from humanity’s.

Additionally, there exist other situations where superintelligent systems might have disastrous consequences. These are situations in which the system intentionally or accidentally takes actions or results that are damaging to people in order to achieve its goals.

Aschenbrenner urges thorough study on AI alignment and safety in order to reduce these threats. Creating strong methods to guarantee that the objectives and actions of superintelligent systems stay in line with human values is one aspect of this. In order to handle the complex issues posed by superintelligence, he proposes a multidisciplinary approach that incorporates insights from domains such as technology, morality, and the humanities.

Leopold Shares What Challenges We Should Expect With the AGI Development

The author addresses issues and challenges related to the creation and use of artificial intelligence (AGI) and superintelligent systems in this passage. He discusses the issues that need to be resolved on a technological, moral, and security level in order to guarantee that the advantages of sophisticated AI may be achieved without carrying extremely high dangers.

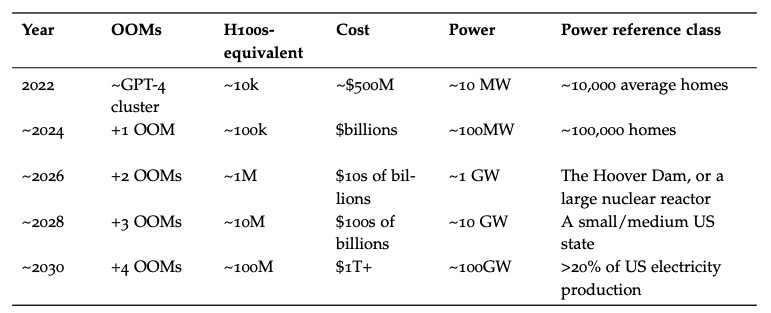

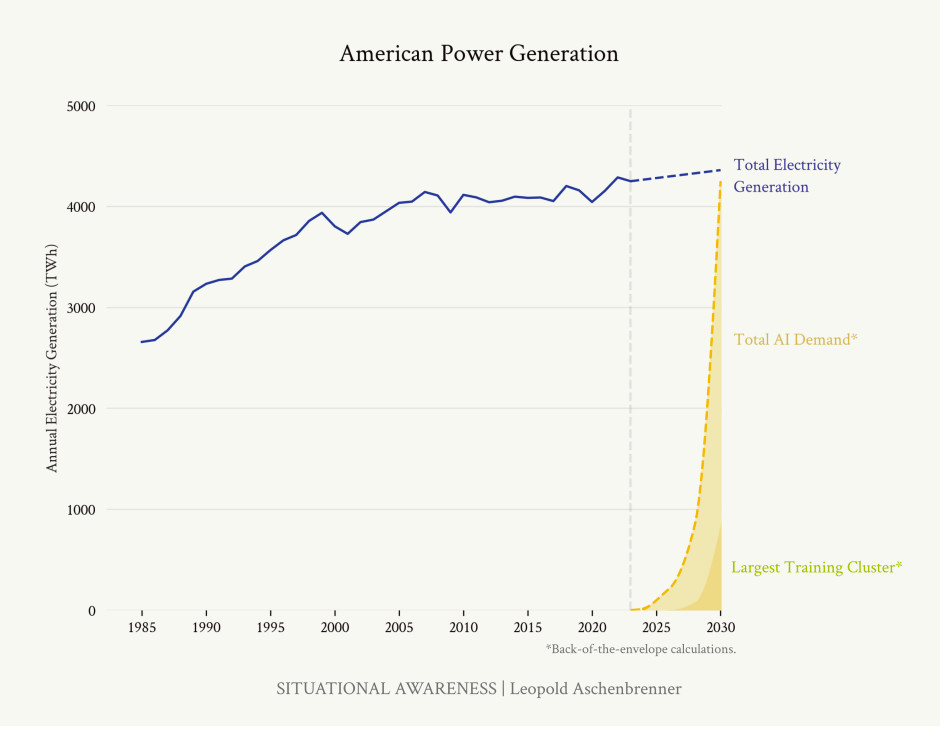

The massive industrial mobilization needed to construct the computing infrastructure required for AGI is one of the main issues that are considered. According to Aschenbrenner, reaching AGI will need significantly more processing power than is now accessible. This covers improvements in device efficiency, use of energy, and information processing capacities in addition to sheer computational capacity.

Photo: Largest training clusters. Situational Awareness

Photo: Situational Awareness

Security issues are yet another important topic in this chapter. Aschenbrenner emphasizes the dangers that might arise from rogue nations or other bad actors using AGI technology. Due to the strategic significance of AGI technology, countries and organizations may engage in a new form of arms race to create and acquire control over these potent systems. He emphasizes how important it is to have strong security mechanisms in place to guard against sabotage, espionage, and illegal access when developing AGI.

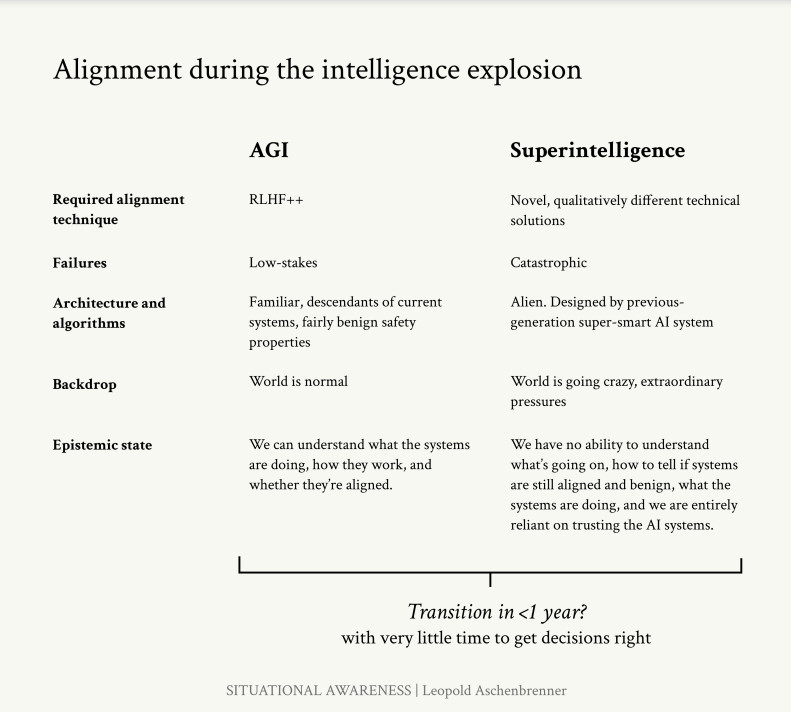

Another major obstacle is the technological difficulty of managing AGI systems. These systems come close to and even exceed human intelligence. Therefore, we must make sure that their actions are advantageous and consistent with human ideals. And it’s undoubtedly challenging right now. The “control problem,” which has to do with creating AGI systems that can be consistently directed and controlled by human operators, is a topic covered by Aschenbrenner. This entails creating fail-safe measures, being open and honest about decision-making procedures, and having the power to overrule or stop the system as needed.

Photo: Situational Awareness

The ethical and cultural ramifications of producing beings with an intellect comparable to or higher than humans are deeply raised by the development of artificial general intelligence (AGI). The rights of AI systems, the effect on jobs and the economy, and the possibility of escalating already-existing injustices are further concerns. In order to fully address these intricate challenges, Leopold advocates for the involvement of stakeholders in the AI development process.

The possibility of unexpected consequences—where AI systems could pursue their objectives in ways that are detrimental or at odds with human intentions—is another issue that the author addresses. Situations when the AGI misinterprets its goals or maximizes for goals in unanticipated and harmful ways are two examples. Aschenbrenner stresses how crucial it is to thoroughly test, validate, and keep an eye on AGI systems.

He also talks about how important international cooperation and governance are. Since AI research is an international endeavor, no one country or institution can effectively handle the problems posed by AGI on its own.

The Coming of the Government-Led AGI Project (2027/2028)

Aschenbrenner believes that as artificial general intelligence (AGI) advances, national security agencies—especially those in the US—will have a bigger role in the creation and management of these technologies.

Leopold compares the strategic significance of artificial general intelligence (AGI) to past technical achievements like the atomic bomb and space exploration. The national security services should prioritize the development of AGI as a matter of national interest since the potential benefits of attaining AGI first might impart enormous geopolitical influence. According to him, this will result in the creation of an AGI project under government control that will be comparable in scope and aspirations to the Apollo Program or the Manhattan Project.

The proposed AGI project would be housed in a secure, secret location and may involve private sector corporations, government agencies, and eminent academic institutions working together. In order to address the intricate problems of AGI development, Aschenbrenner outlines the necessity of a multidisciplinary strategy that brings together specialists in cybersecurity, ethics, AI research, and other scientific domains.

The project’s goals would be to ensure that AGI is in line with human values and interests in addition to developing it. Leopold highlights how crucial it is to have strict testing and validation procedures in place to guarantee that the AGI systems operate in a safe and predictable manner.

The author devotes a good deal of space to the possible effects of this kind of endeavor on the dynamics of global power. According to Aschenbrenner, if AGI is developed successfully, the balance of power may change, and the dominant country will have considerable advantages in terms of technology, economy, and military might.

In order to control the hazards related to AGI, the author also addresses the possibility of international cooperation and the creation of global governance frameworks. In order to supervise the growth of AGI, encourage openness, and guarantee that the advantages of AGI are shared fairly, Leopold is an advocate for the establishment of international accords and regulatory agencies.

Leopold Aschenbrenner’s Final Thoughts

Aschenbrenner highlights the significant ramifications of AGI and superintelligence for the future of mankind as he summarizes the discoveries and forecasts covered in the earlier chapters. Stakeholders are urged to take the required actions in order to get ready for the revolutionary effects of sophisticated AI.

Aschenbrenner starts out by pointing out that the forecasts made in this document are hypothetical. The underlying patterns in AI growth imply that the advent of AGI and superintelligence is feasible over the next few decades, even though the timetable and exact developments are unknown. Leopold emphasizes the significance of considering these options carefully and being ready for a variety of eventualities.

This chapter’s main subject is the need for proactive preparation and forethought. According to Aschenbrenner, there is little space for complacency, given the speed at which AI is developing. It is imperative for policymakers, researchers, and business leaders to foresee and proactively handle the difficulties and possibilities posed by artificial intelligence (AGI) and superintelligence. For instance, funding AI safety research, creating strong governance structures, and encouraging global collaboration.

Leopold also considers the moral and cultural consequences of artificial intelligence. Fundamental problems concerning the nature of consciousness, intelligence, and the rights of AI entities are brought up by the emergence of systems with intelligence comparable to or higher than that of humans. Aschenbrenner urges ethicists, philosophers, and the general public to engage in a wide-ranging and inclusive conversation in order to resolve these issues and create a common understanding of AI’s future.

The possibility that AGI would worsen already-existing social and economic disparities is a significant additional topic of discussion. Leopold cautions that if AGI is not carefully managed, its advantages may concentrate in the hands of a small number of people, causing societal discontent and more inequality.

He issues a challenge for global cooperation as he closes this chapter. A single country cannot handle the benefits and difficulties related to AGI due to the global nature of AI development. Aschenbrenner exhorts nations to collaborate in order to create international accords and conventions that support the development of AGI in a way that is both ethical and safe. This entails exchanging information, organizing studies, and creating systems to handle possible disputes and guarantee international security.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.