Deloitte Predicts Explosive Rise in Fraud Losses: Generative AI Could Cost US Financial Institutions $40 Billion by 2027

In Brief

A Hong Kong company staff member gave scammers $25 million in 2024, highlighting the growing risk of AI-driven fraud in the financial sector, posing serious threats to clients.

A staff member of a Hong Kong-based company gave scammers US$25 million in January 2024 after falling for a deepfake video that impersonated her head of finance and several staff members. This event serves as an example of the growing risk of sophisticated and challenging-to-detect AI-driven fraud in the financial sector. Criminals are using generative AI technology to produce convincing deepfakes, fake speech, and forged documents. This is why the banking sector and its clients are in serious danger.

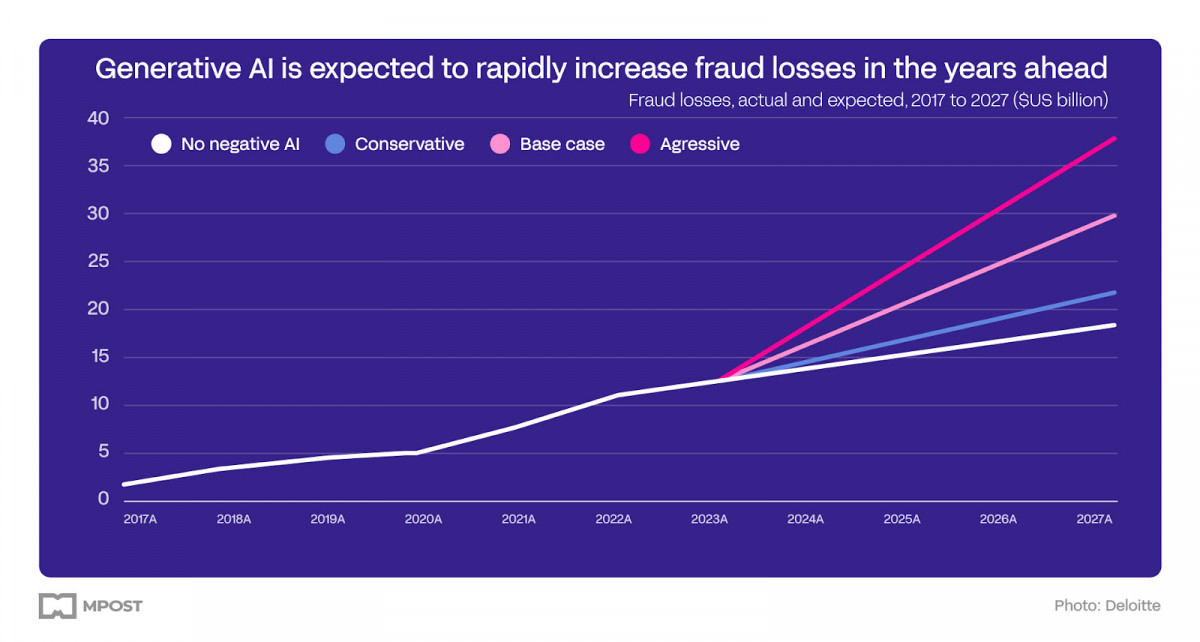

This episode is a depressing precursor to the increasingly dangerous environment in which financial institutions currently find themselves. Based on forecasts from Deloitte’s Center for Financial Services, theft losses in the US might reach an astounding $40 billion by 2027—a 32% annualized increase from the $12.3 billion in crime damages in 2023.

Photo: Deloitte

Generative AI’s Disruptive Impact

Generative AI’s disruptive potential stems from its ability to create highly convincing synthetic media, including deepfake videos, fictitious voices, and forged documents. This technology’s self-learning capabilities continually enhance its deceptive prowess, outpacing traditional detection systems designed to identify fraud based on predefined rules and patterns.

Moreover, the accessibility of generative AI tools on the dark web has democratized their availability, enabling a thriving underground marketplace where scamming software is readily sold for prices ranging from a mere $20 to thousands of dollars. This democratization has rendered numerous anti-fraud tools ineffective, leaving financial institutions scrambling to adapt.

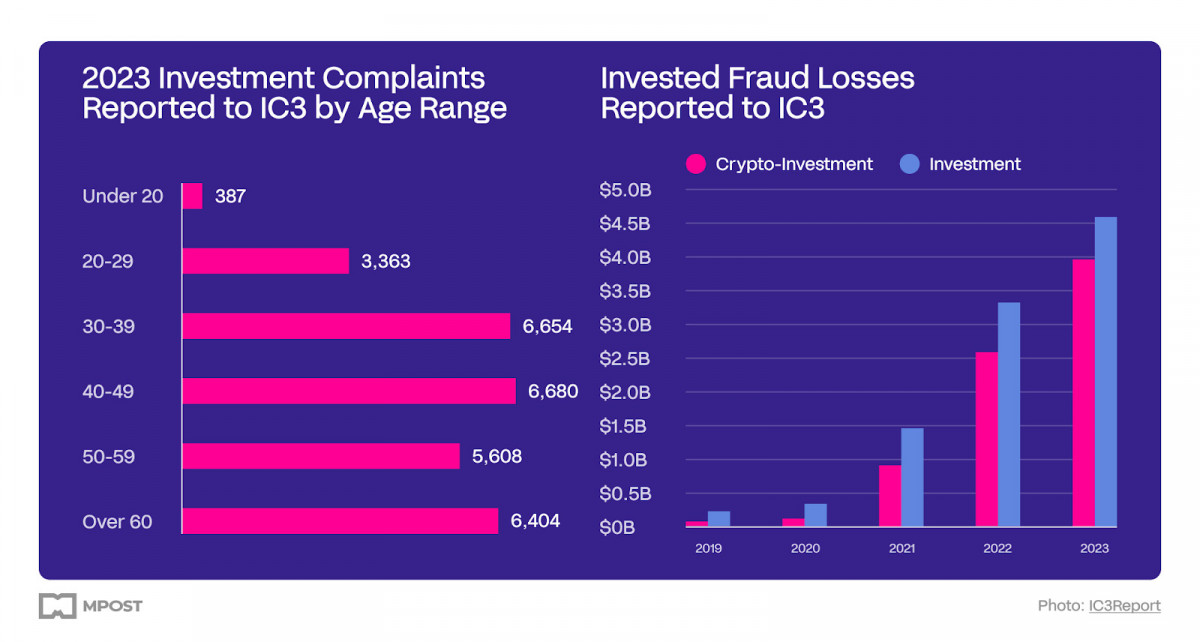

Photo: 2023 crime types, IC3Report

Business email compromise (BEC) assaults are one area where generative AI fraud is particularly prone to occur. The FBI documented 21,832 cases of BEC fraud in 2022 alone, with damages estimated to be over $2.7 billion. In a “violent” implementation scenario, Deloitte projects that generative AI may increase email fraud losses to over $11.5 billion by 2027.

A Potential Danger to Identification from Deepfakes

Deepfake technology also threatens identity verification procedures, which have historically been seen as a stronghold of security. According to recent research, the finance industry will see a startling 700% spike in deepfake events in 2023 alone. The IT sector is worrisomely slow to create reliable methods for spotting phony audio recordings, which exposes a serious risk.

Photo: IC3Report

Even though traditionally, banks have been the first to use novel technologies to fight fraud, a study from the U.S. Treasury issued a warning, stating that “existing risk management frameworks may not be adequate to cover emerging AI technologies.” Institutions are already vying with one another to incorporate machine learning and artificial intelligence capabilities into their scam identification and reaction systems, automating procedures to more quickly identify and look into questionable activity.

To strengthen its defenses against credit card fraud, Mastercard’s Decision Intelligence engine examines billions of data points to anticipate transaction authenticity, while JPMorgan has integrated huge language models to identify indications of email intrusion.

Developing a Wholesome Defense Plan

In order to stay competitive, financial institutions need to take a diversified approach as the generative AI fraud environment constantly changes. Combining human intuition with contemporary technologies is crucial to anticipating and thwarting scammers’ new strategies. Since the dangerous environment is always evolving, anti-fraud teams need to adopt a culture of constant learning and adaptation. This is because no one solution can adequately alleviate the situation.

Photo: IC3Report

Restructuring governance, resource allocation, and strategy in a comprehensive way will be necessary to future-proof institutions against fraud. Working together both inside and outside the financial sector will be essential because generative AI fraud poses a danger to whole organizations. Together with reliable third-party technology suppliers, banks may develop effective responses by defining precise responsibilities and resolving liability issues.

Furthermore, strengthening customer education and awareness is essential to constructing resistance against fraud. Regular communication touchpoints, like push alerts on banking applications, may notify clients of possible dangers and describe the precautions their financial institutions are taking to protect their money.

Institutions are actively involved in the creation of fresh regulations as a result of regulatory agencies’ increased attention to the benefits and risks of generative AI. Banks may create a thorough record of their systems and procedures by including compliance teams early in the technology development process. This will facilitate regulatory monitoring and guarantee conformity to changing requirements.

Putting Money Into Talent and Ongoing Innovation

The most important thing to remember is that banks need to put a high priority on investing in human capital, employing and educating staff members to recognize, stop, and report fraud aided by AI. These investments are necessary to keep ahead of the curve in the face of a quickly developing opponent, even if they may temporarily put a burden on finances.

It takes a multidisciplinary strategy to develop a workforce that is knowledgeable about the subtleties of generative AI fraud, combining knowledge from domains like data science, cybersecurity, and behavioral analytics. Financial institutions may develop staff with the necessary skills to identify and proactively mitigate new dangers by promoting a culture of continuous learning and information sharing.

Banks may also consider using contract workers, internal engineering teams, and outside contractors to create proprietary fraud detection technologies. By encouraging a culture of ongoing learning and adaptation, this strategy maximizes resource allocation and allows for quick reactions to new challenges.

When David Birch, Director of Consult Hyperion, stated that financial institutions needed to have a solid plan in place to tackle AI-driven identity theft, he emphasized that identification was the first line of defense. He went on to stress that identification systems need to be able to resist and adapt to ever-evolving fraud schemes in order to protect the service’s image and protect legitimate clients.

Financial institutions need to prepare for a protracted struggle as generative AI continues to change the fraud environment. Through the adoption of a proactive and multidimensional approach that integrates state-of-the-art technology with human experience, regulatory compliance, and industry collaboration, businesses may bolster their defenses against the increasing number of extremely complex fraud schemes driven by artificial intelligence.

The potential losses are expected to exceed $40 billion in the United States alone by 2027, so the stakes are enormous. However, financial institutions may reduce the risks and protect the integrity of their operations by placing a higher priority on investments in people, technology, and teamwork. This will guarantee that their clients will continue to have faith in them in an increasingly complicated digital environment.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.