AI Crypto’s Leading Builders Unite to Launch AI Benchmarking Alliance

In Brief

The Crypto AI Benchmark Alliance (CAIBA) aims to evaluate the effectiveness of AI agents in real-world tasks, preparing them for the next generation of wallets and researchers.

AI agents are easily the most notable tech newcomer of 2025. Everyone is building them, but no one is grading their homework. Now, that is starting to change. Fourteen top crypto projects – from Cyber to EigenLayer to Thirdweb – have teamed up to launch the Crypto AI Benchmark Alliance (CAIBA).

Their goal is to create a crypto-native benchmarking standard that evaluates how well AI models can think, plan, and act on-chain. They have just presented their first benchmark: CAIA. It goes beyond testing simple metrics, and instead throws agents into real-world tasks like transaction workflows and tokenomics research. Because if these agents are going to power the next generation of wallets and researchers, they have to be able to reason like one.

Who’s Behind CAIBA?

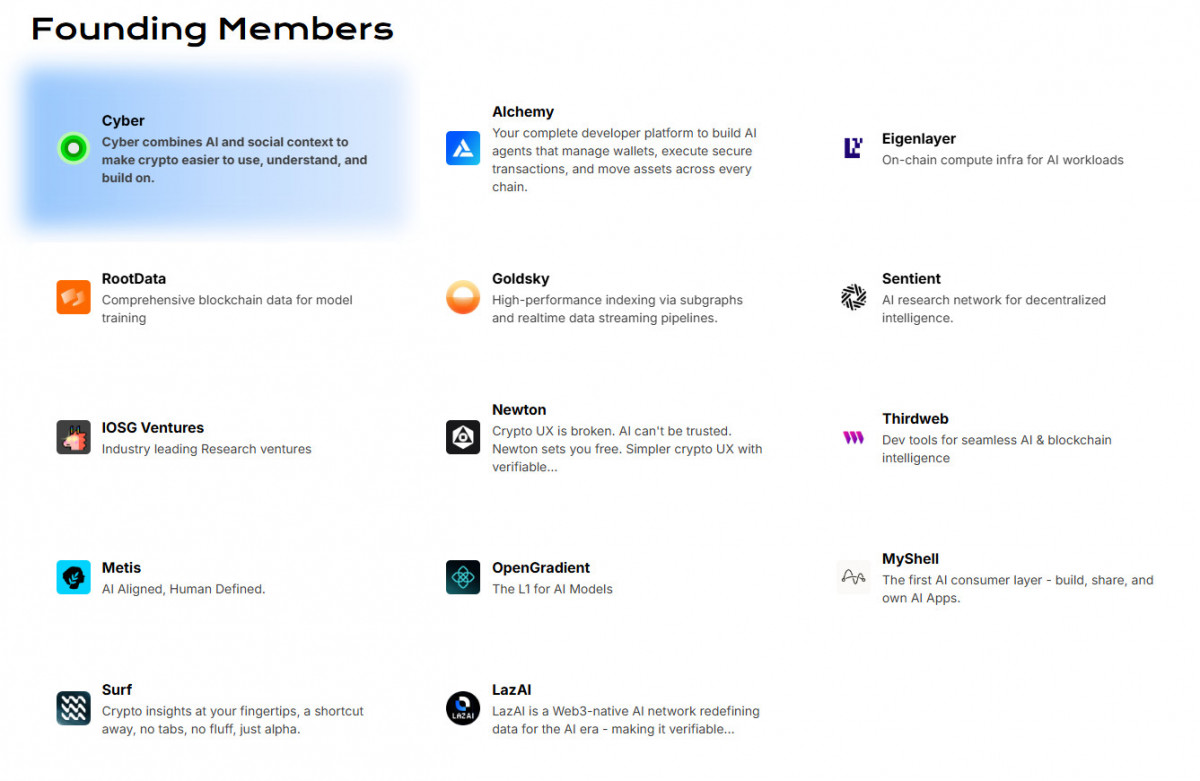

CAIBA is backed by a diverse coalition of crypto’s most forward-thinking builders in AI, data, and infrastructure. The founding members span the entire crypto AI stack: from protocol-level compute to real-time data streaming, dev tooling, and UX innovation. Together, these projects are building a transparent benchmarking framework grounded in real-world use.

Here’s who’s involved (and what they do):

- Cyber – Combines AI and social context to make crypto easier to use, understand, and build on.

- Alchemy – A full-stack dev platform for building AI agents that can execute multichain transactions.

- EigenLayer – Onchain compute infrastructure for AI workloads.

- RootData – Blockchain data tailored for AI model training.

- Goldsky – High-performance indexing via subgraphs and real-time data streaming pipelines.

- Sentient – Decentralized AI research network.

- IOSG Ventures – Research-driven crypto VC focusing on frontier innovation.

- Newton – Reimagines crypto UX with AI and verifiability at the core.

- Thirdweb – Dev tools enabling seamless integration between AI models and blockchain apps.

- Metis – AI-aligned, human-defined systems for Web3.

- OpenGradient – Layer-1 blockchain optimized for AI model deployment.

- MyShell – The first AI consumer layer.

- Surf – Frictionless interface for crypto insights, focused on speed and signal.

- LazAI – Web3-native AI network redefining data with verifiability baked in.

This alliance is designed to grow. All members contribute code, datasets, and domain expertise, with deliverables published on GitHub and Hugging Face under open licenses where possible.

CAIBA’s founding members (source)

CAIBA’s strength lies in its structure: open, composable, and led by the builders shaping the crypto AI ecosystem from the ground up.

Why Crypto Needs Its Own AI Benchmarks

AI models are getting better every month, but most benchmarks still assume we’re on GPT-3. But that’s not today’s reality. In 2025, crypto AI agents analyze tokenomics, spot suspicious transactions, research protocols, and interact with tools like block explorers or wallet APIs. That’s not something even GPT-4 or Claude 4 was trained for. At least not natively.

And while mainstream AI benchmarks like MMLU or Arena test general reasoning or factual knowledge, they don’t reflect how AI is actually used in Web3; where tasks require onchain data, live context, multi-step planning, and the ability to act reliably.

That’s the gap CAIBA was formed to close. “Transparent, rigorous testing is essential,” said Ryan Li, co-founder of Cyber. “Models must not only answer correctly but also act reliably so users can make decisions with confidence.”

CAIBA’s benchmarks are designed around this real-world usage. They test models on crypto-native tasks, from verifying contract data to diagnosing governance mechanics. They judge the process, not just the output: Did the model plan correctly? Did it use the right tools? Did it follow through?

CAIBA’s First Framework: CAIA

CAIBA’s first release is the Crypto AI Agent Benchmark, or CAIA. It’s a test designed to answer the following question: can an AI agent actually do the job of a junior crypto analyst?

CAIA evaluates models across three core areas:

- Knowledge — Can the agent accurately answer questions about tokens, protocols, and governance structures?

- Planning — Can it map out multi-step tasks, like comparing staking yields or analyzing token vesting schedules?

- Action — Can it interact with real tools like block explorers or APIs to follow through?

Each benchmark task mirrors real analyst workflows. This includes researching, diagnosing tokenomics, and tracing wallet behavior. Agents are tested not just on what they know, but how they think and execute.

CAIA includes:

- Predefined tasks;

- Reference answers;

- Grading scripts.

Again, all released under open licenses on platforms like GitHub and Hugging Face, so anyone can replicate, remix, or improve the benchmark. Models currently being evaluated include: general-purpose LLMs like GPT-4o, Claude 4, Gemini 2.5, and DeepSeek-1, as well as crypto-native models fine-tuned for on-chain reasoning and workflows

CAIA is more of a toolkit than a test, because of its focus on transparency and reproducibility. Builders can see exactly where an agent succeeds or fails, compare results across models, and use that data to improve performance over time.

How CAIA Impacts the Ecosystem

For developers, CAIA provides a clear, open framework to test their agents before shipping. For protocols and platforms, CAIBA is a way to evaluate the AI models they’re integrating into products, whether it’s for research assistants, trading copilots, or automated governance bots. A high CAIA score means more trust and less risk.

For investors and researchers, it creates a shared scoreboard, making it easier to compare models, track improvements, and identify truly innovative projects in the sea of “AI-powered” claims. And for the broader Web3 ecosystem, CAIBA sets a precedent: that openness, reproducibility, and collaboration should be the standard for AI, just like they are for code.

What’s Next For CAIBA?

CAIA is just the beginning. The alliance has already begun work on additional benchmarks, each targeting new capabilities and agent types. Future releases may cover areas like contract auditing, DAO proposal analysis, or DeFi risk modeling, as AI continues to take on more specialized roles in crypto.

CAIBA is actively inviting developers, researchers, and protocols to get involved. Anyone can contribute by submitting a model, proposing new tasks, or improving the evaluation framework; and because everything is published under open licenses, you don’t need permission to start building.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.