Unmasking Big Brother: The Controversial UK Trials of Amazon’s Rekognition Software

In Brief

UK train passengers were allegedly scanned, and their emotions were identified using Amazon’s Rekognition software during artificial intelligence exercises supervised by Network Rail.

As part of extensive artificial intelligence monitoring exercises, thousands of unwary train passengers in the UK have purportedly had their faces scanned and their emotions identified by Amazon’s contentious Rekognition software. The tests, which were conducted over the course of the previous two years at key stations, were supervised by train operator Network Rail.

The AI system estimated attributes like age, gender, and emotional state using photos from CCTV cameras; papers indicate that this data may eventually be utilized for targeted advertising. Researchers have repeatedly cautioned that these emotion analysis tools are troublesome and unreliable, and privacy experts have expressed concerns about the “concerning” lack of openness and public consultation around their use.

The Spread of Facial Recognition Surveillance

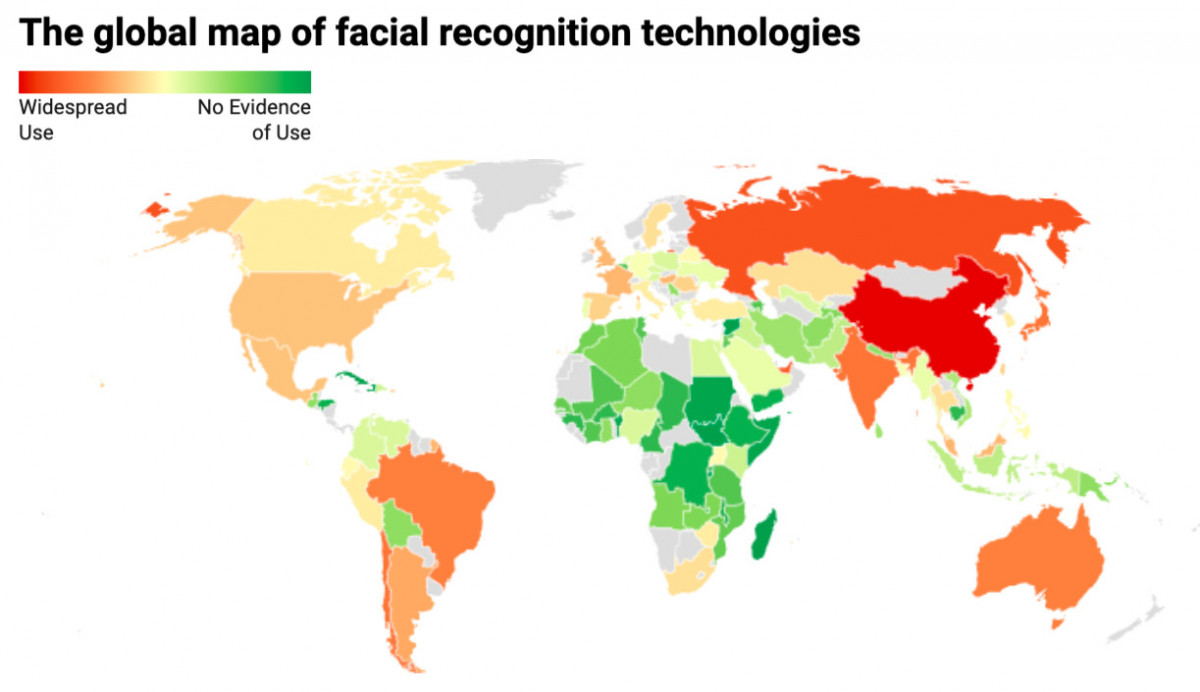

The UK experiments are only one example of a fast-spreading worldwide trend in which government agencies and business enterprises alike are using AI video analytics and face recognition to spy on large populations. A thorough analysis reveals that contentious technologies are either in use or have been approved for use in more than 100 nations worldwide.

Photo: Comparitech

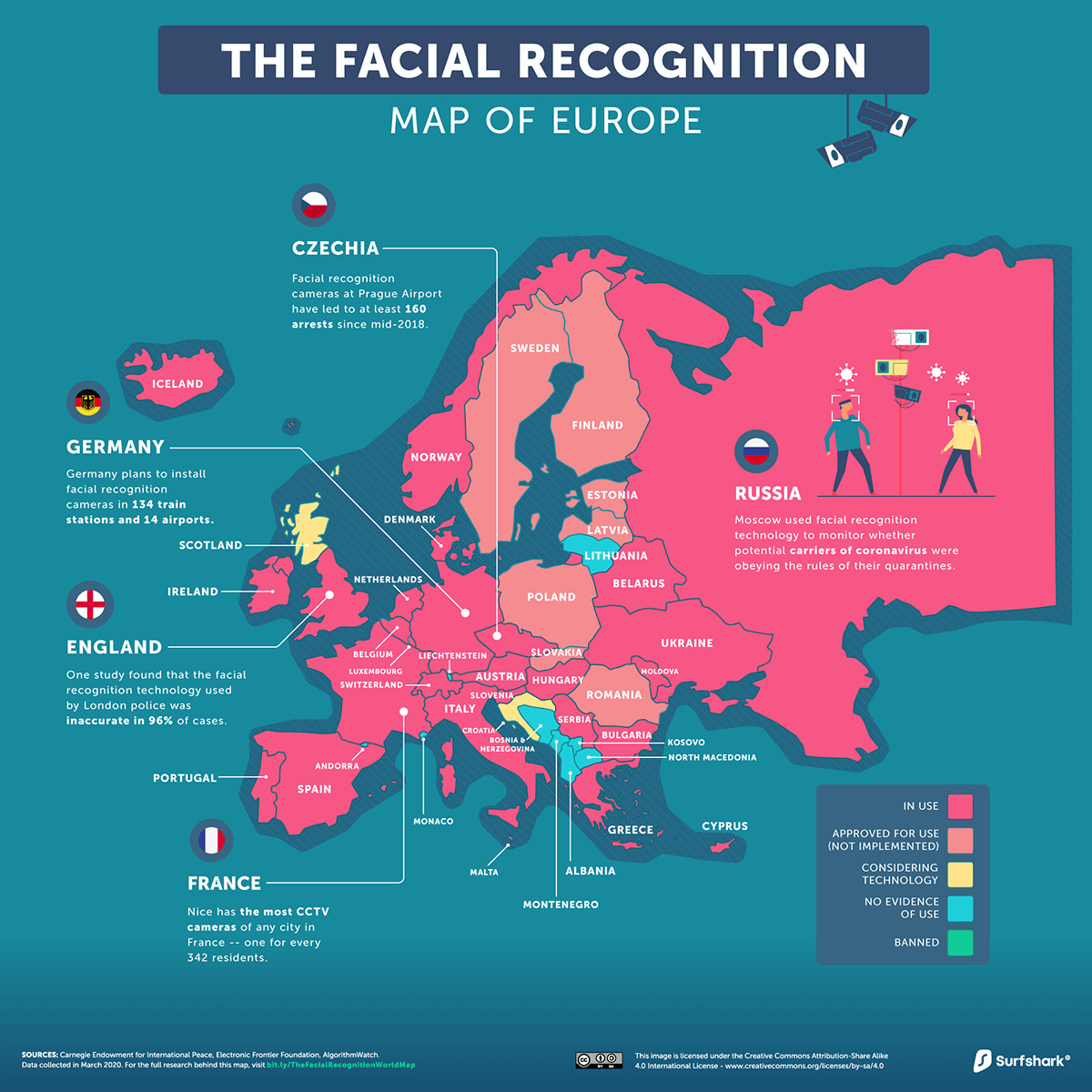

Europe

Thirty-two European nations have allowed or are employing face recognition surveillance. 2020 saw the introduction of face recognition CCTV cameras by London police, who also made the first arrest with the technology the same year. In Germany, twelve airports and more than 130 train stations are expected to have face recognition installed. A reaction to the broad usage of the technology has resulted in prohibitions on it in Swedish and French schools.

Photo: Surfshark

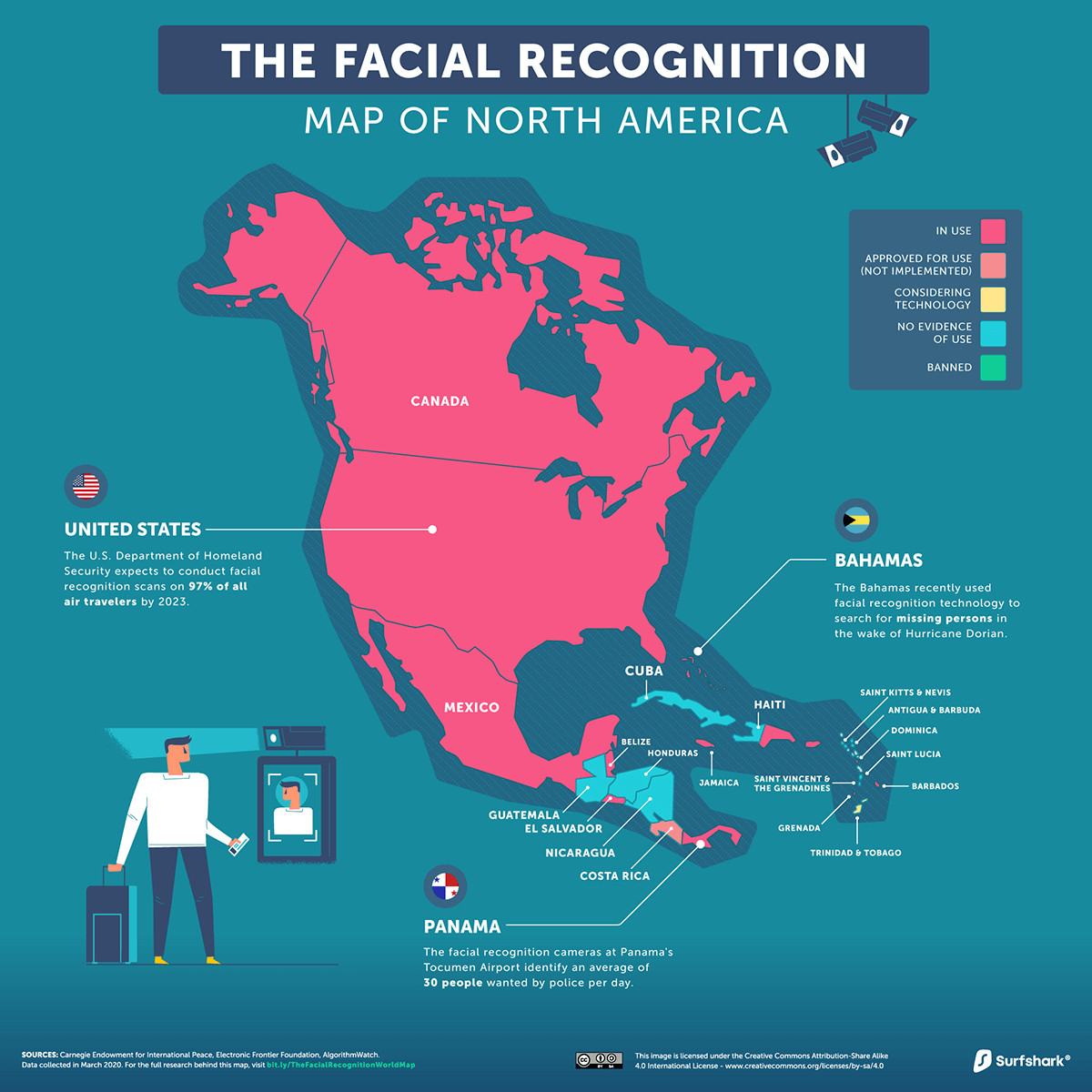

North America

The United States and the other half of North American nations presently use face recognition technology for surveillance. By 2023, the Department of Homeland Security wants to have 97% of air travelers’ faces stored in police databases, which already have the faces of more than 50% of Americans. Numerous police agencies and airports have embraced it; however, because of privacy concerns, an increasing number of US jurisdictions, including San Francisco, have outlawed its usage.

Photo: Surfshark

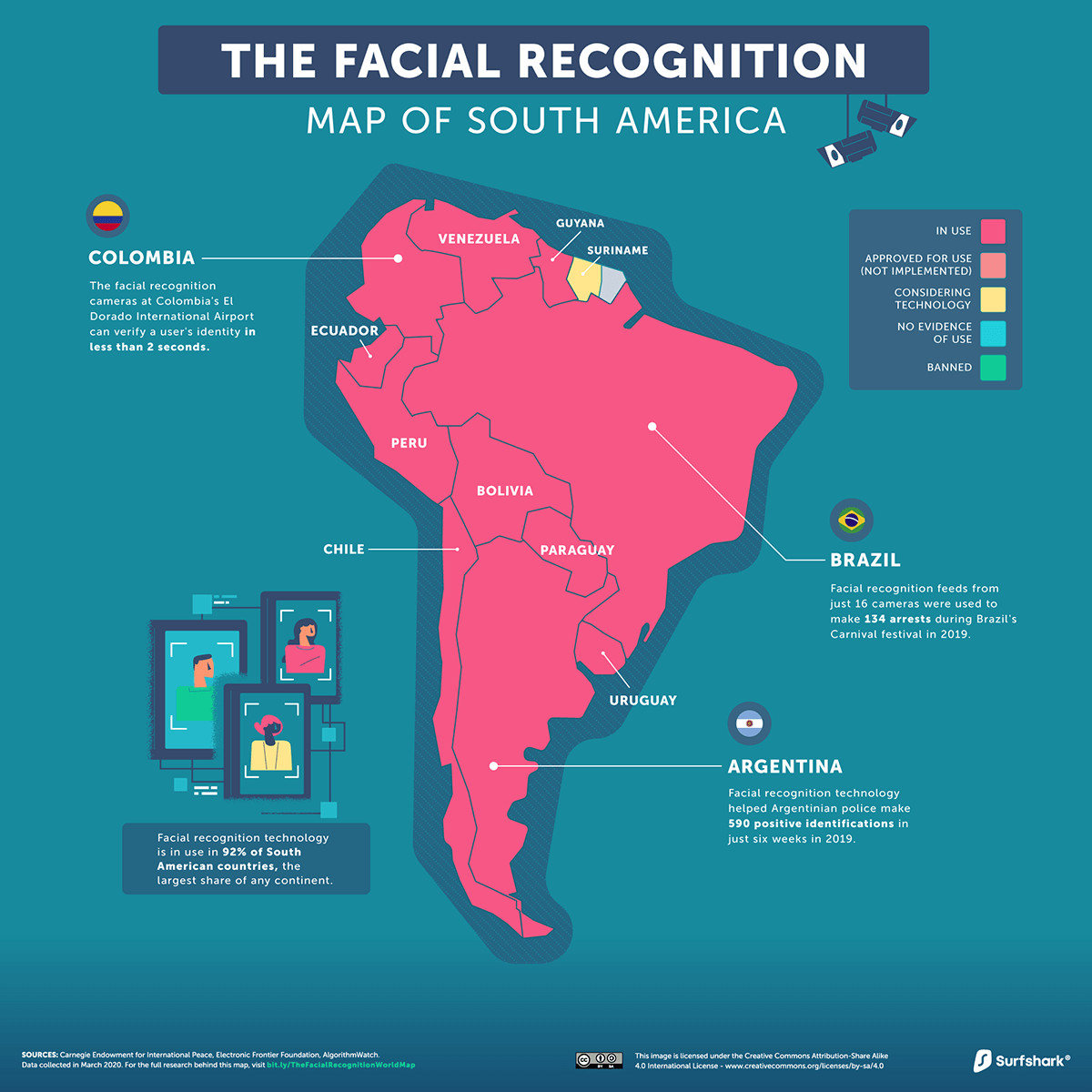

South America

92% of South American countries, including Brazil and Argentina, have police forces that have used face recognition technology. With just a few camera feeds during large events, they are able to identify hundreds of people who are wanted. One of the most sought fugitives in the area by Interpol was apprehended by Brazilian officials with the use of technology.

Photo: Surfshark

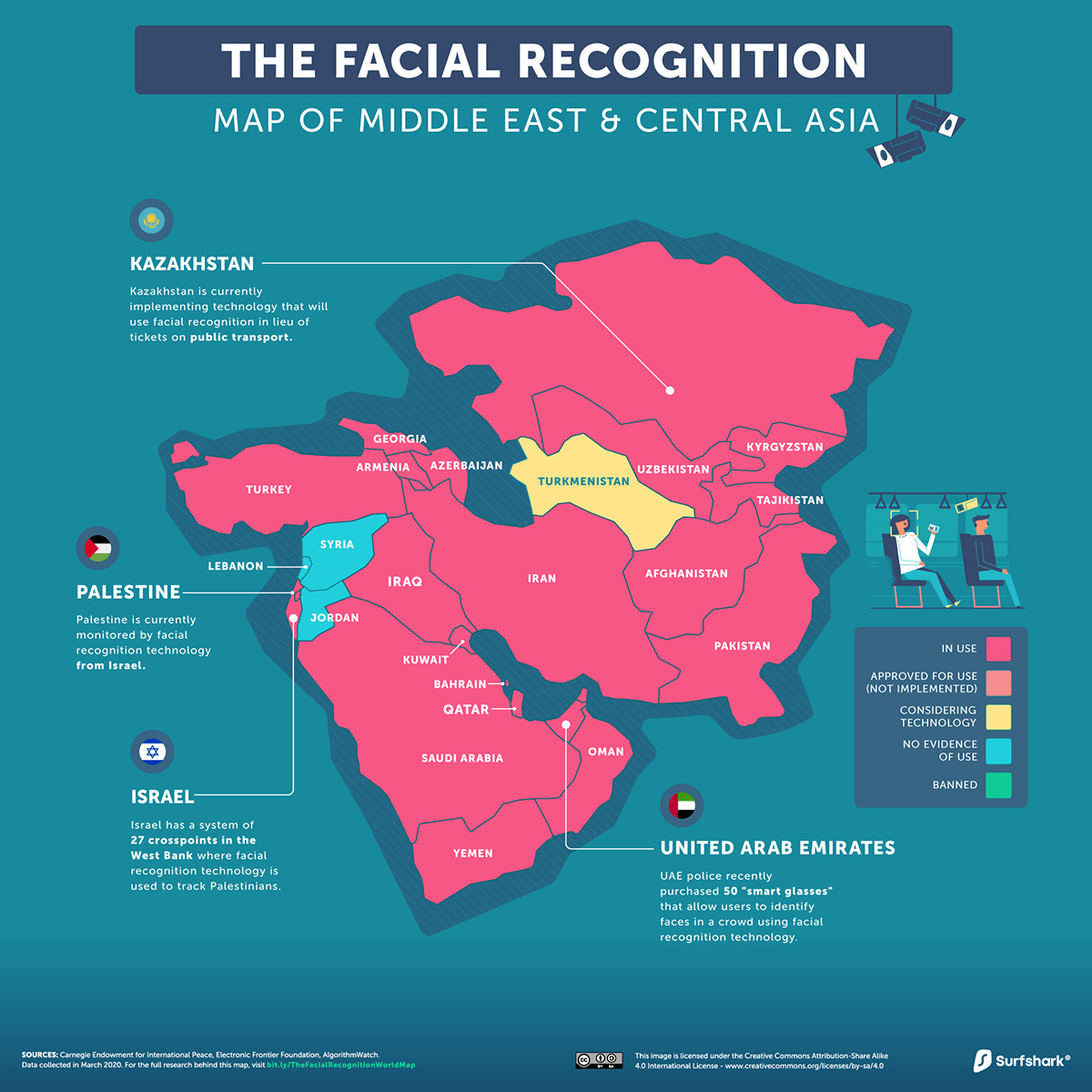

Middle East & Central Asia

In order to identify persons in crowds, the UAE recently bought smart glasses with integrated face recognition technology. The technology that scans passengers’ faces to replace public transportation tickets is being tested in Kazakhstan. In total, facial recognition scanning technologies are presently in operation throughout 76% of the Middle East and Central Asia.

Photo: Surfshark

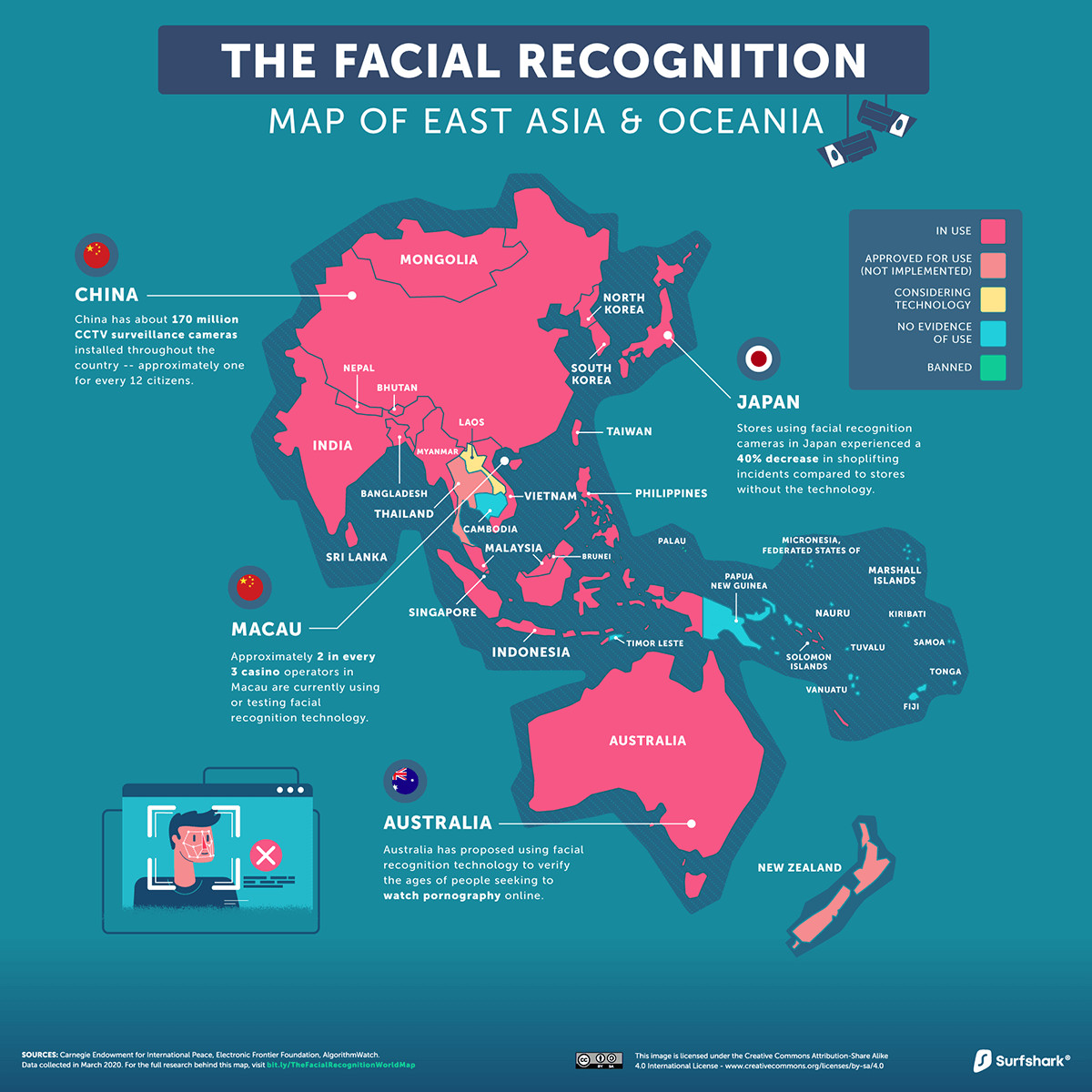

Oceania and Asia

With an estimated camera for every 12 people living in the country, China is the world leader in the widespread use of face recognition technology. Thus far, the government has sold its AI spying technology to at least sixteen foreign countries. In other places, Australia wants to confirm age before allowing access to internet pornography, while Japan intends to use it to limit gambling addiction in casinos.

Photo: Surfshark

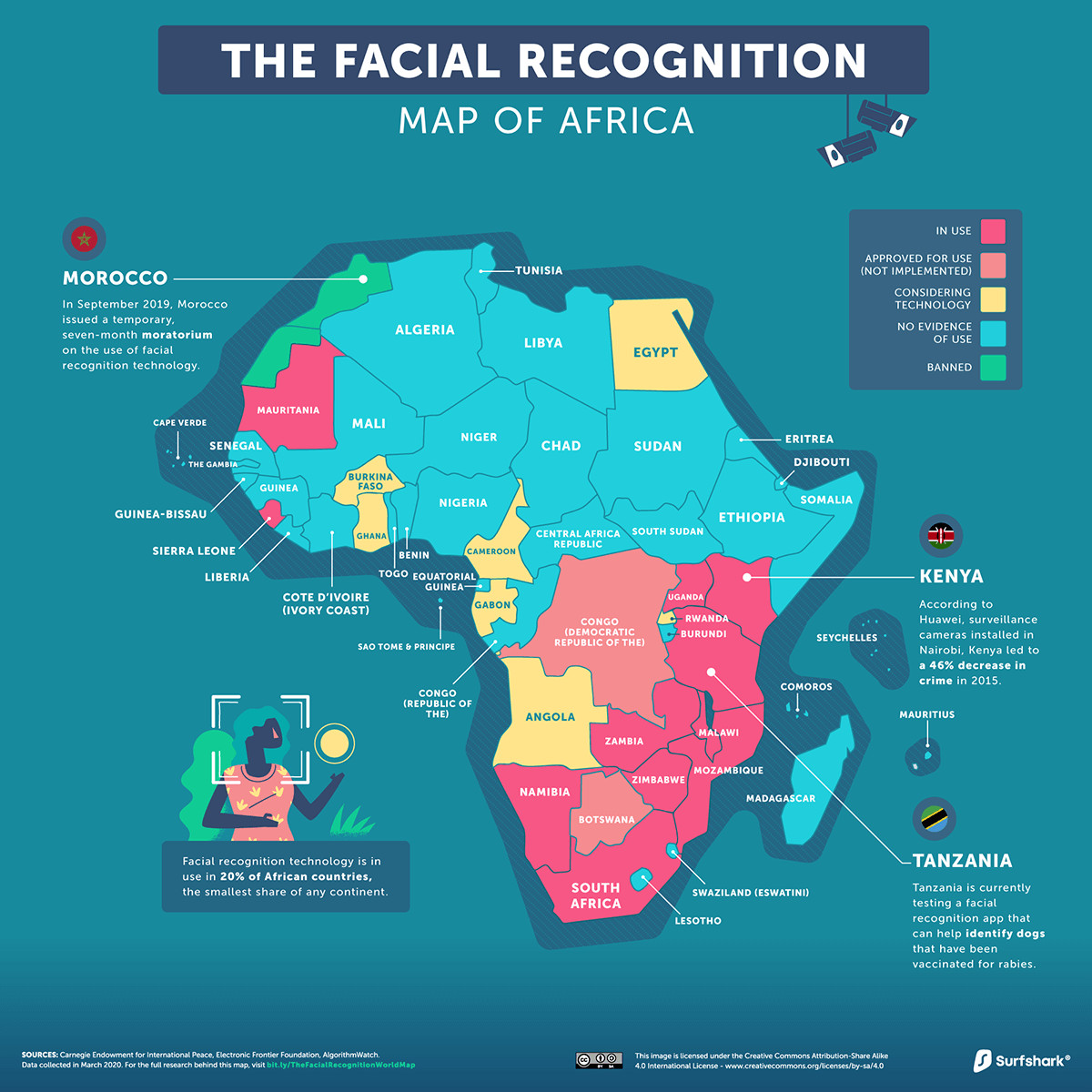

Africa

Africa has the lowest rate of face recognition surveillance usage of any continent, at 20% of all countries. This is anticipated to rise, though, since nations like Zimbabwe have struck agreements to receive the technology from China in return for biometric data that will enhance the system’s accuracy for varying ethnic groups.

Photo: Surfshark

The Contested UK Trials of Rekognition

In the UK train station experiments, the contentious Rekognition system—which Amazon has persisted in promoting for surveillance purposes in spite of internal resistance—was employed to search camera footage for face data. Photographs were taken as visitors crossed “virtual tripwires” at ticket barriers, according to papers obtained through a freedom of information request. The pictures were then transmitted to the program to estimate qualities like age and gender and to identify emotions such as happiness, sadness, or rage.

The studies looked at measuring “passenger satisfaction” using this data and maybe increasing “advertising and retail revenue” at stations. Yet scientists have always cautioned that interpreting emotions from photos is questionable from a scientific standpoint.

The AI experiments grew to explore a variety of different use cases, such as detecting trespassing, overcrowding, smoking, screaming, and loitering, despite obvious problems with validity and accuracy. In order to facilitate quicker reaction times, they sought to automatically notify personnel about perceived risks and “anti-social behavior”.

Even in cases when face recognition technology isn’t used, privacy organizations have questioned the general lack of transparency and public engagement around such invasive surveillance. One risk assessment document, with an “ignorant attitude,” questioned, “If certain individuals are likely to object or find it intrusive?” before dismissing such worries. And responding, “In general, no, but there’s no accounting for some people.”

According to Jake Hurfurt, Head of Research at Big Brother Watch, which got the materials, the introduction and normalization of AI surveillance in these public settings without much discussion or participation is a very worrying tendency.

Network Rail has argued that the trials are appropriate for maintaining security and that they comply with applicable laws. Regarding additional privacy problems or the state of emotion detection right now, the rail operator has not responded to inquiries.

An International Discussion: Whether It’s All Appropriate

A global discussion over ethics, accuracy, and civil liberties violations has been spurred by the fast development of face recognition and AI video analytics for surveillance purposes by both public and commercial organizations. Critics have drawn attention to the technologies’ potential to allow for new levels of prejudice, intrusive surveillance, and privacy erosion despite proponents’ claims that the technologies improve security and efficiency.

The use of Amazon’s own Rekognition software to covertly scan and judge the emotional states of UK rail passengers raises deeper questions about oversight, consent, and where to draw the line on AI surveillance in public spaces.

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.

More articles

Victoria is a writer on a variety of technology topics including Web3.0, AI and cryptocurrencies. Her extensive experience allows her to write insightful articles for the wider audience.